Definition

- Machine learning is field of study thaht gives computers the ability to learn withuot being explicitly programmed.

Machine Learning Algorithms

- Supervised learning

- Unsupervised learning

- Recommender system

- Reinforcement learning

Supervised Learning

Basic Concept

- Input and its corresponding right answer give labels then test the module with brand new input

- Example:

- Types

- Regression: a particular type of supervise learning, is predict a number from infinitely many possible outputs

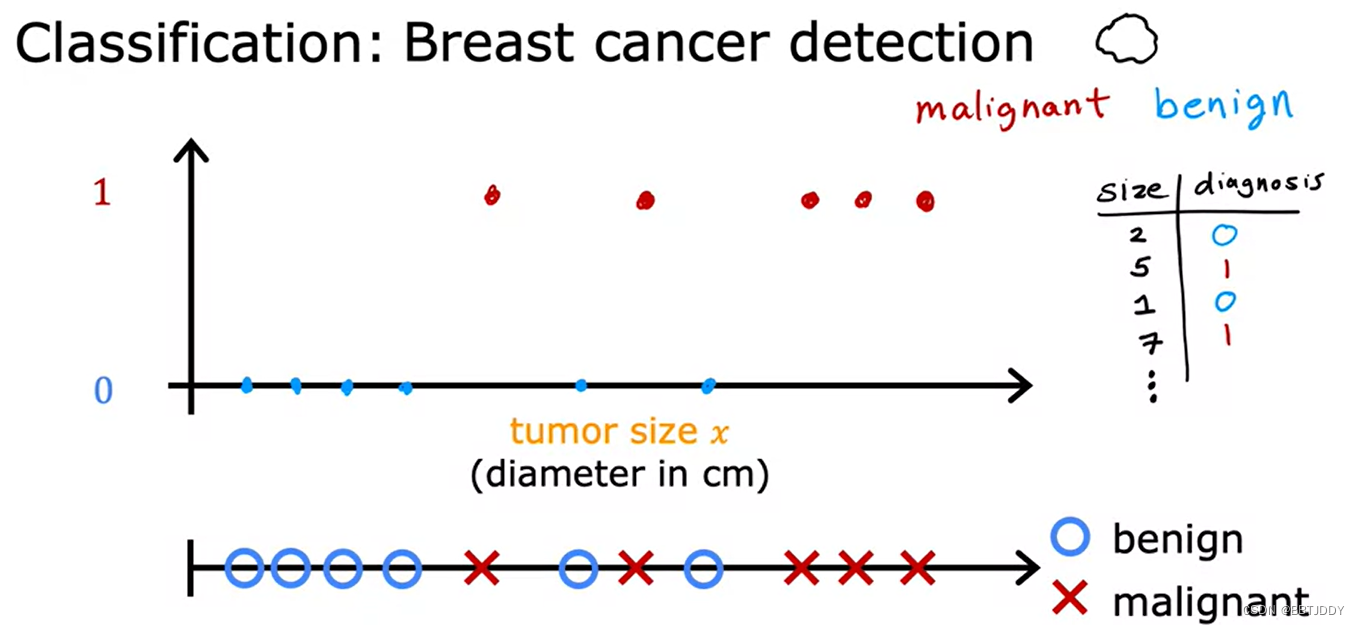

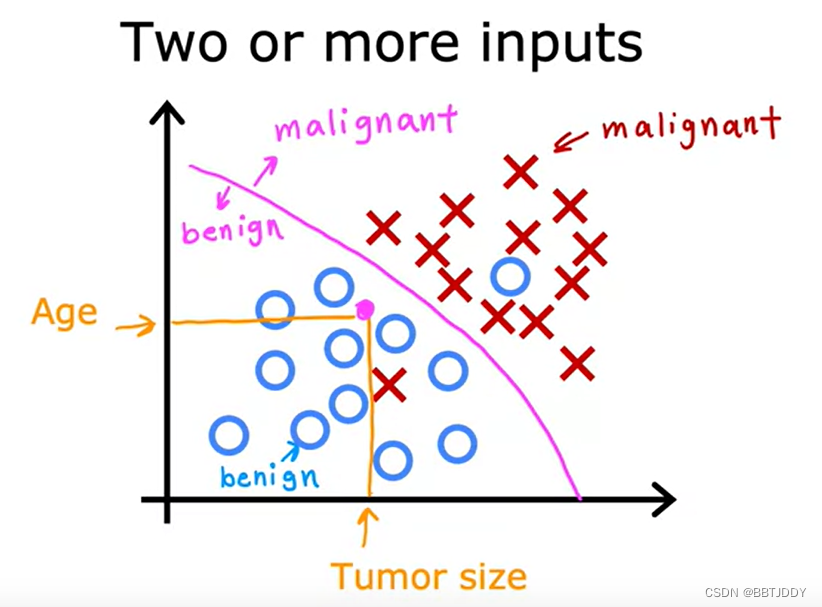

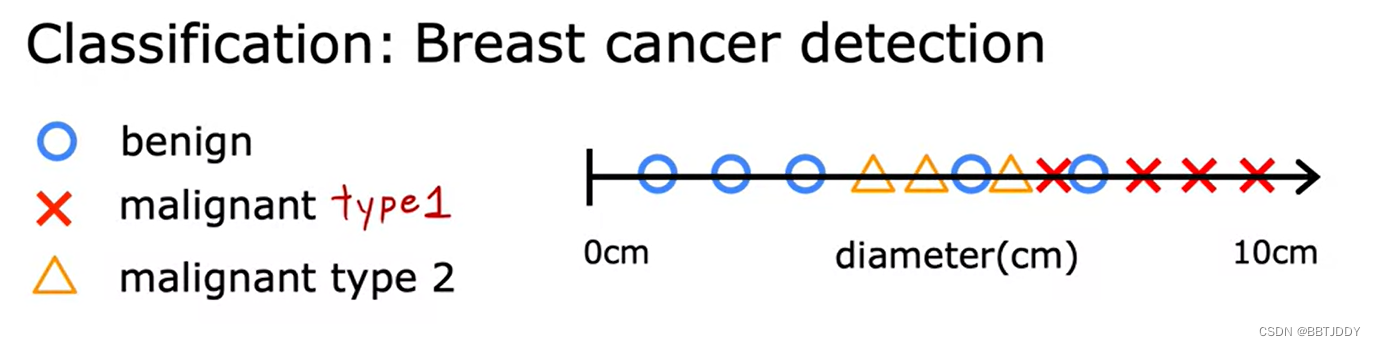

- Classification: predict catagories, finited possible outputs (classes/catogories may be many, so do the inputs)

- Regression: a particular type of supervise learning, is predict a number from infinitely many possible outputs

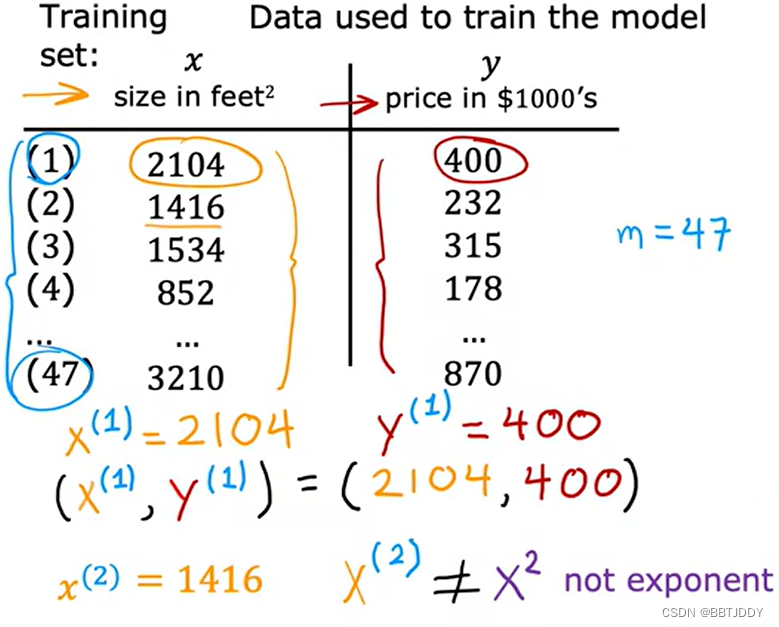

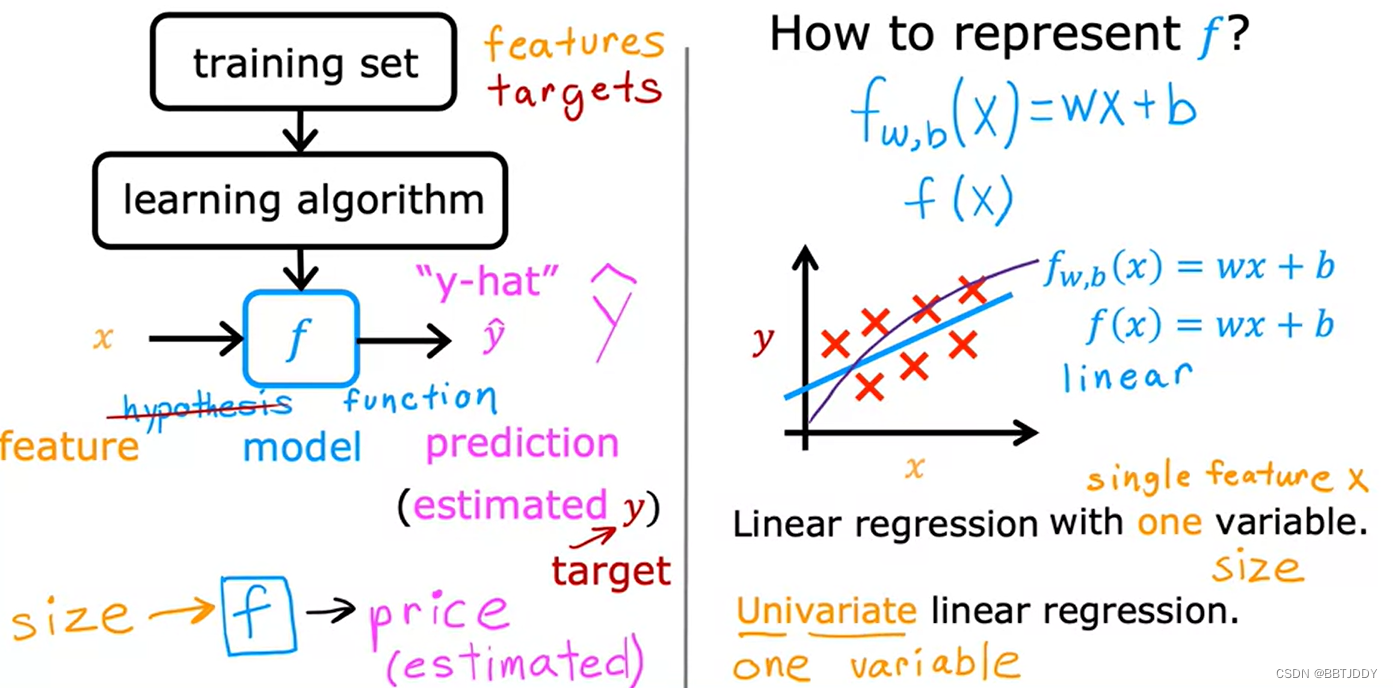

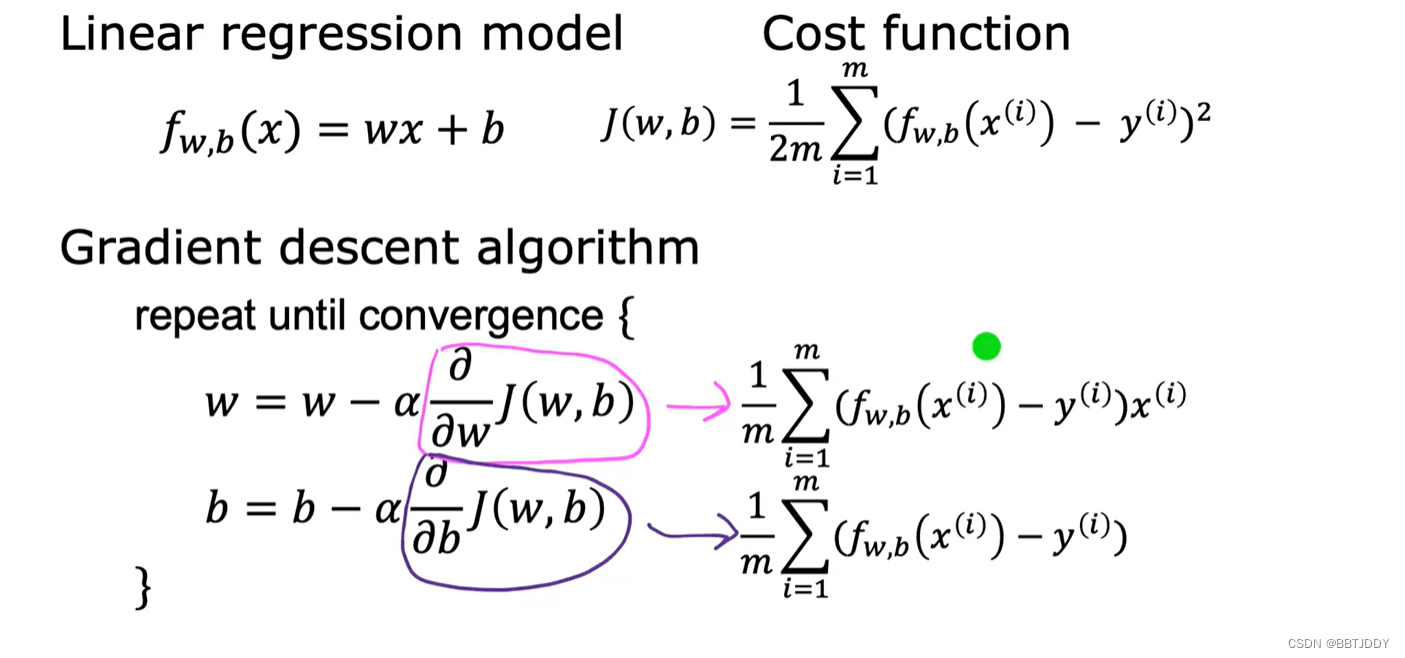

Linear Regression Model

- Terminology

- x = "input" variable = feature

- y = "output" variable = "taget" variable

- m = number of training examples

- (x,y) = single training example

- w,b = parameter = coefficients = weights

- w is slope while b is y-intercept

The process of unsupervise learning

Univariable linear regression = one variable linear regression

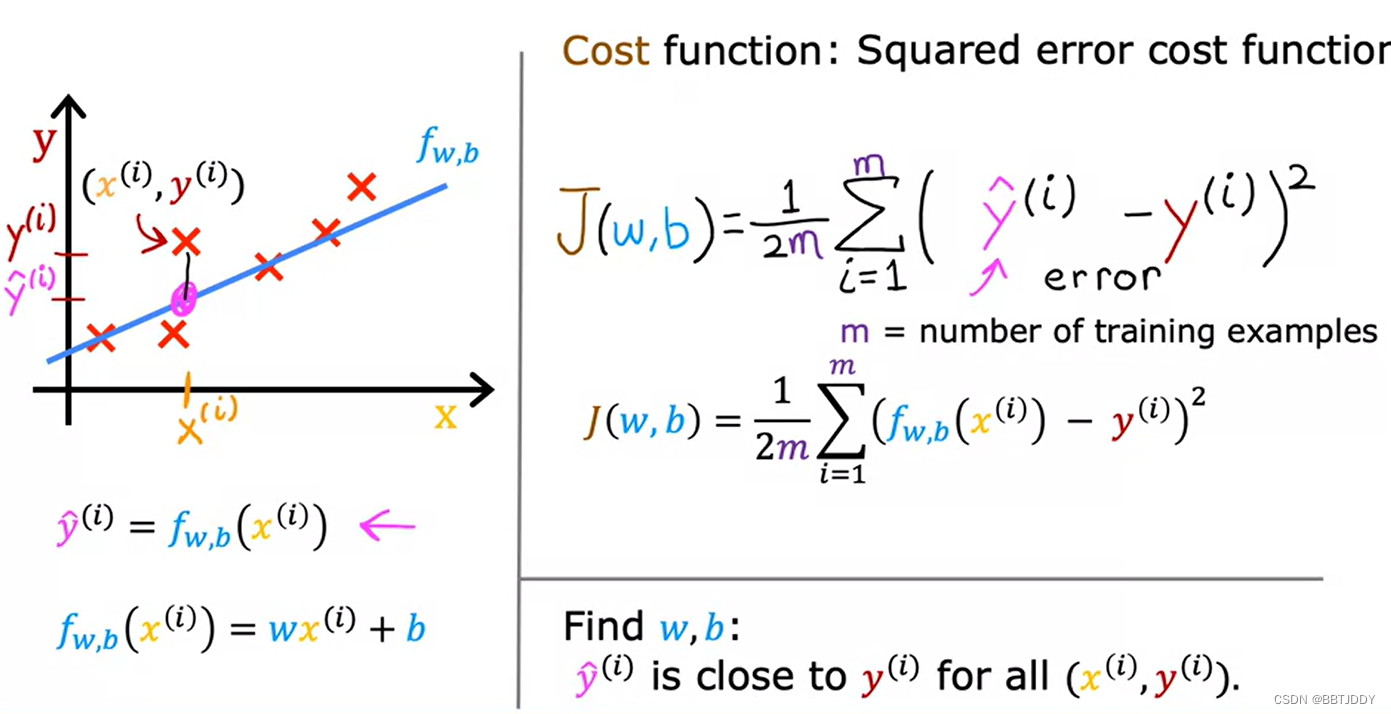

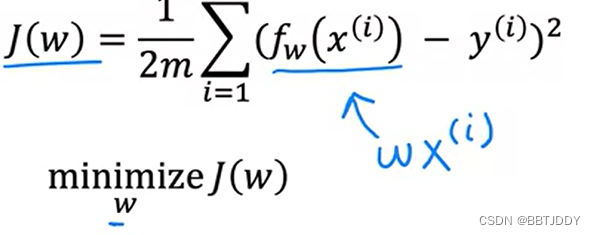

- Cost function —— find w and b (额外除以2目的是方便后面梯度下降求导时把2约去使式子看起来更简洁)

- Squared error cost function (To find different value when choosing w and b)

For linear regression with the squared error cost function, you always end up with a bow shape or a hammock shape.

==

==

- The difference between fw(x) and J(w)

- the previous one is related to x and we choose different w for J(w)

- the previous one is related to x and we choose different w for J(w)

Gradient descent

- The method of find the minimal J(w,b)

- Every time ture 360 degree to have a little step and find the intermediate destination with the the largest difference with the last point, then do the same until you find you couldn't go down anymore

- process (so called "Batch" gradient descent)

- start with some w,b (set w=b=0)

- keep chaging w,b to reduce J(w,b)

- Until we settle at or near a minimum

- If you find different minimal result by choosing different starting point, all these different results are called local minima

- Gradient descent algorithm

α = learning rate (usually a small positive number bwtween 0 to 1):decide how large the step I take when going down to the hill

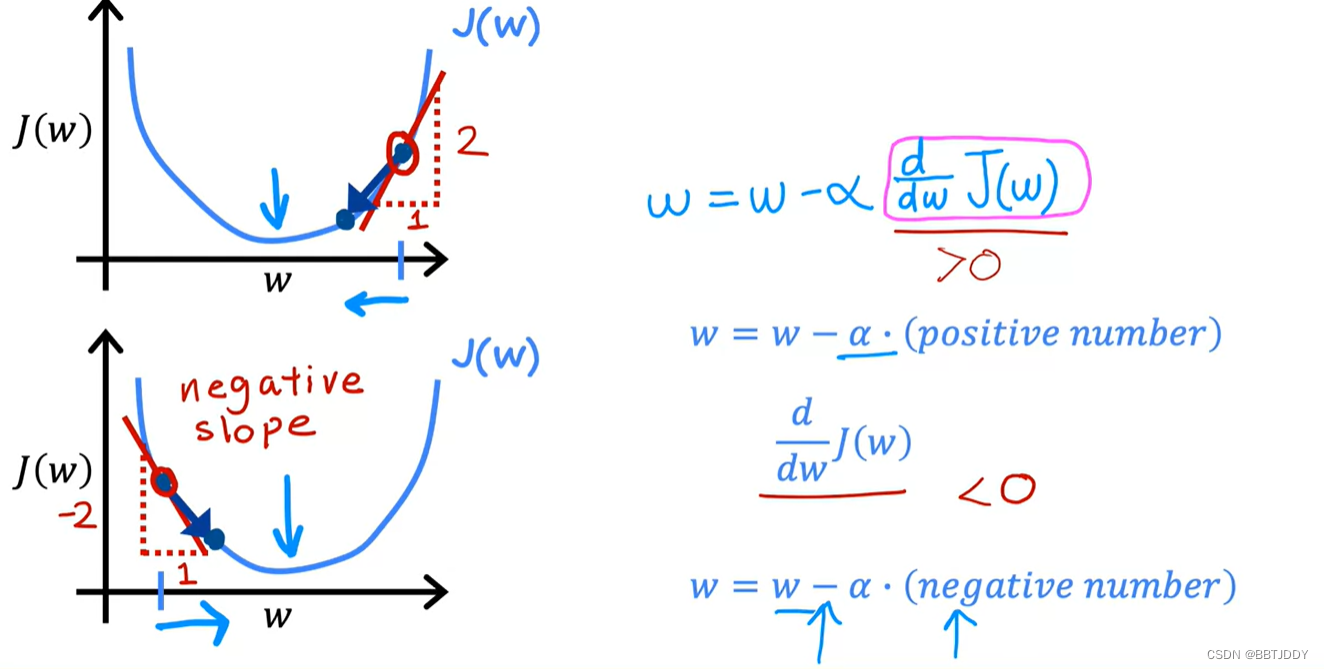

(dJ(w,b)/dw) destinate in which direction you want to take your step

- The end condition: w and b don't change much with each addition step that you take

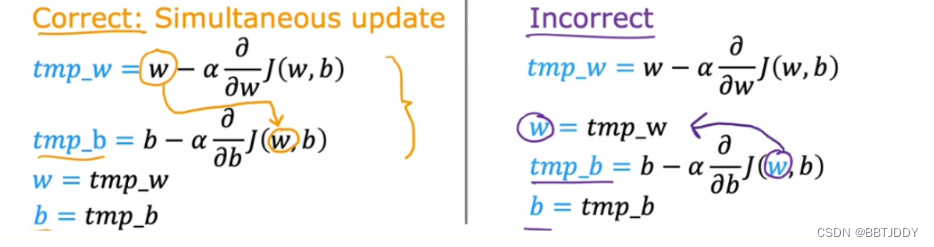

- Tip: b and w must be updated simultaneously

- WHY THEY MAKE SENSE?

- Learning rate α

Problem1: When α is too small, the gradient makes sense but is too slow

Problem2: When α is too big, it may overshoot, never reach the minimal value of J(w)

Problem3: When the starting point is the local minima, the result will stop at the local minima (Can reach locak minimum with fixed learning rate)

所以!α是要根据坡度变化而变化的!!

Learning Regression Algorithm

- For square error cost function, there only one minima

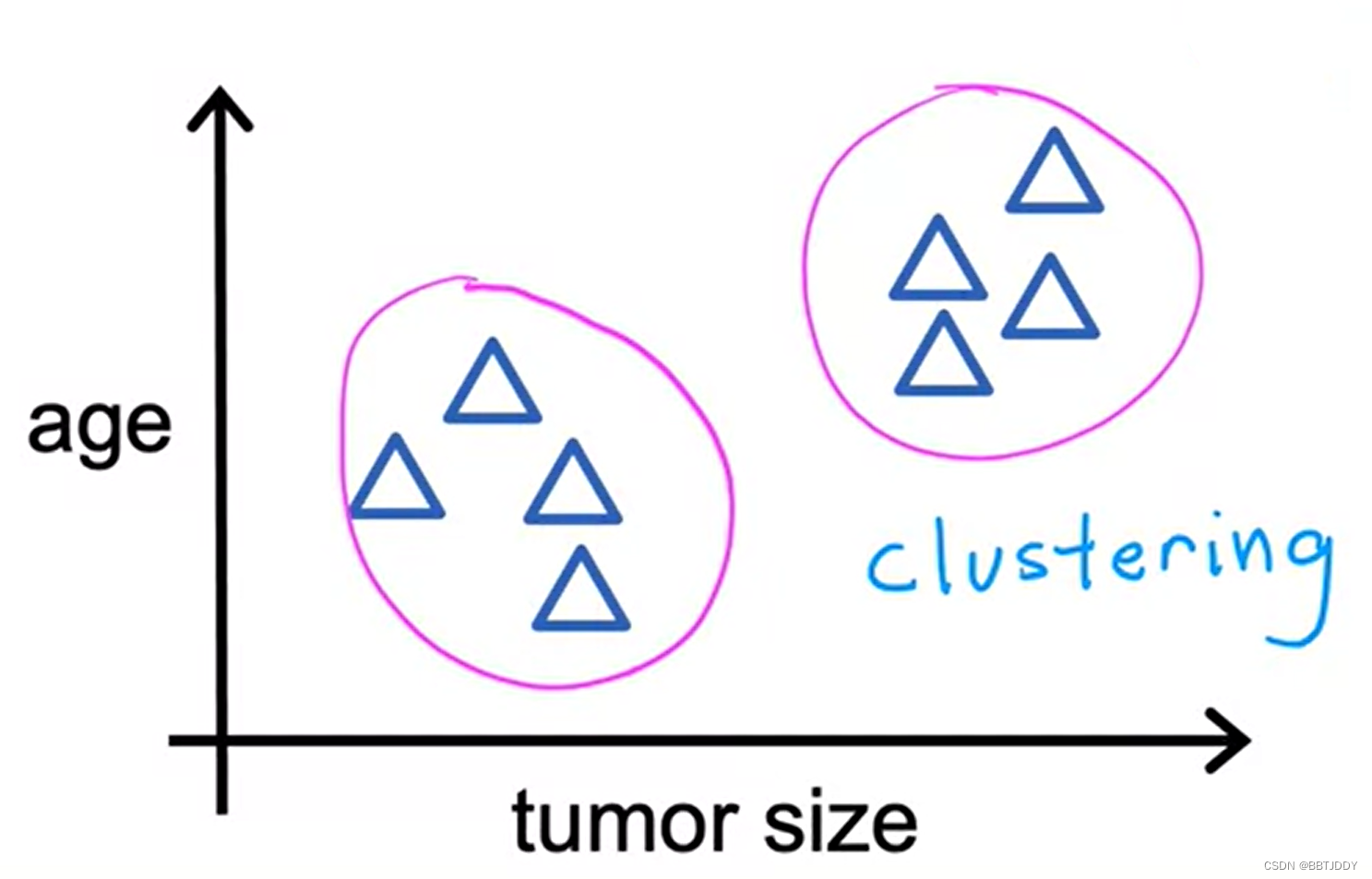

Unsupervise Learning

- Finding something interesting in unlabeled data:Data only comes with inputs x, but not outputs label y. Algrithm has to find structure in the data

- Types

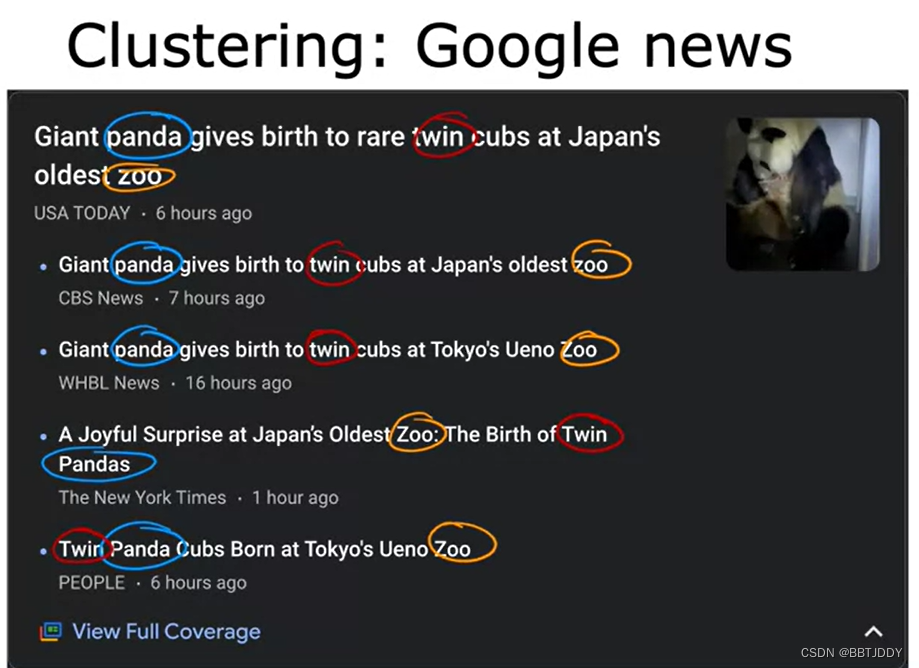

- Clustering: Group similar data points together

- Anomaly detection: Find unusual data points

- Dimensionality redution: Compress data using fewer numbers

- Clustering: Group similar data points together