ABSTRACT

This paper addresses the problem of model compression via knowledge distillation. We advocate for a method that optimizes the output feature of the penultimate layer of the student network and hence is directly related to representation learning. To this end, we firstly propose a direct feature matching approach which focuses on optimizing the student’s penultimate layer only. Secondly and more importantly, because feature matching does not take into account the classification problem at hand, we propose a second approach that decouples representation learning and classification and utilizes the teacher’s pre-trained classifier to train the student’s penultimate layer feature. In particular, for the same input image, we wish the teacher’s and student’s feature to produce the same output when passed through the teacher’s classifier, which is achieved with a simple L2 loss. Our method is extremely simple to implement and straightforward to train and is shown to consistently outperform previous state-of-the-art methods over a large set of experimental settings including different (a) network architectures, (b) teacher-student capacities, (c) datasets, and (d) domains. The code is available at https://github.com/jingyang2017/KD_SRRL.

翻译:

这篇论文解决了通过知识蒸馏进行模型压缩的问题。我们主张一种优化学生网络倒数第二层输出特征的方法,因此与表示学习直接相关。为此,我们首先提出了一种直接特征匹配方法,重点优化学生网络的倒数第二层。其次,更重要的是,因为特征匹配没有考虑到手头的分类问题,我们提出了第二种方法,将表示学习和分类解耦,并利用教师的预训练分类器来训练学生的倒数第二层特征。特别是,对于相同的输入图像,我们希望通过教师的分类器传递时,教师和学生的特征产生相同的输出,这通过简单的 L2 损失实现。我们的方法非常简单易实现,训练过程直接,且在大量实验设置下一直表现优异,包括不同的(a)网络架构,(b)教师-学生容量,(c)数据集和(d)领域。代码可在 GitHub - jingyang2017/KD_SRRL 找到。

INTRODUCTION

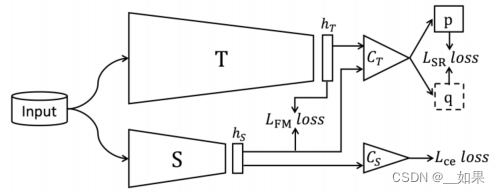

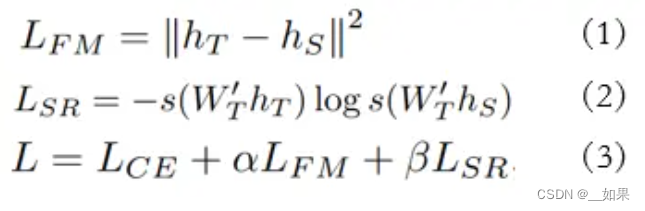

我们的方法通过最小化教师和学生的倒数第二特征表示 hT 和 hS 之间的差异来进行知识蒸馏。为此,我们提出使用两种损失:(a) 特征匹配损失 LFM,和 (b) 所谓的 Softmax 回归损失 LSR。与 LFM 相反,我们的主要贡献 LSR 被设计来考虑手头的分类任务。为此,LSR 规定对于相同的输入图像,当通过教师预先训练和冻结的分类器时,教师和学生的特征产生相同的输出。请注意,为了简单起见,未显示用于使 hT 和 hS 的特征维度相同的函数。

METHOD

公式1的 ℎ𝑇 、 ℎ𝑆 分别是教师、学生的倒数第二层输出特征,公式2的 𝑊𝑇′是教师的分类器参数,公式3中的 𝐿𝐶𝐸 是基于真实标签的标准损失。

ABLATION STUDIES

LSR比LFM有效得多

在网络中的多个点应用LSR损耗实际上会损害准确性

CONCLUSION

We presented a method for knowledge distillation that optimizes the output feature of the penultimate layer of the student network and hence is directly related to representation learning. A key to our method is the newly proposed Softmax Regression Loss which was found necessary for effective representation learning. We showed that our method consistently outperforms other stateof-the-art distillation methods for a wide range of experimental settings including multiple network architectures (ResNet, Wide ResNet, MobileNet) with different teacher-student capacities, datasets (CIFAR10/100, ImageNet), and domains (real-valued and binary networks).

翻译:

我们提出了一种用于知识蒸馏的方法,优化了学生网络倒数第二层的输出特征,因此与表示学习直接相关。我们方法的关键是新提出的 Softmax 回归损失,发现它对于有效的表示学习是必要的。我们展示了我们的方法在多种实验设置下始终优于其他最先进的蒸馏方法,包括多种网络架构(ResNet、Wide ResNet、MobileNet)以及不同的教师-学生容量、数据集(CIFAR10/100、ImageNet)和领域(实值和二进制网络)。