一、所需工具

所需工具:

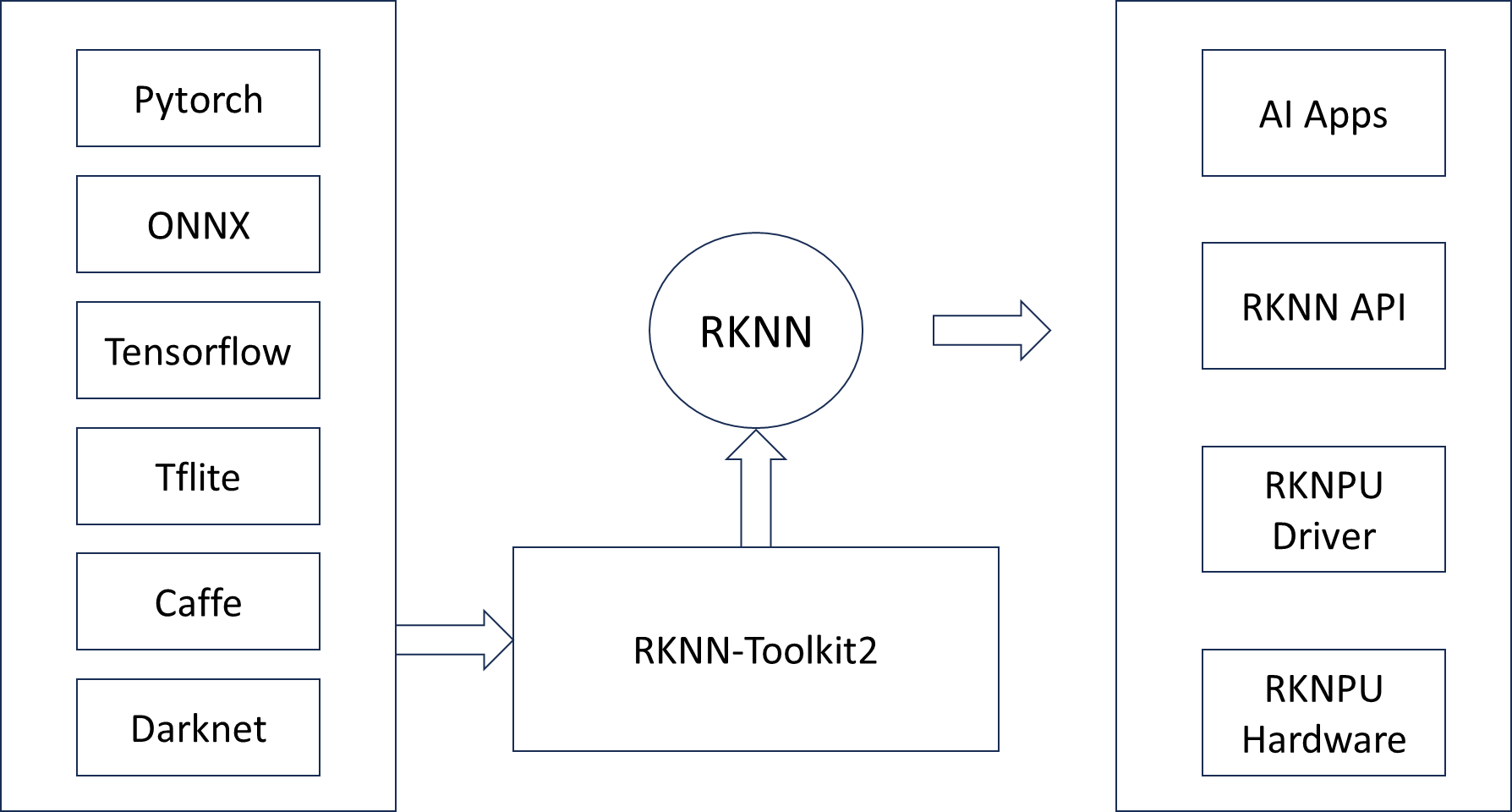

RKNN-Toolkit2 是一个软件开发工具包,供用户在 PC 和 Rockchip NPU 平台上执行模型转换、推 断和性能评估。

RKNN-Toolkit-Lite2 为 Rockchip NPU 平台提供了 Python 编程接口,帮助用户部署 RKNN 模型并 加速实施AI应用。

RKNN Runtime 为 Rockchip NPU 平台提供了 C/C++ 编程接口,帮助用户部署 RKNN 模型并加速 实施AI应用。

RKNPU 内核驱动负责与 NPU 硬件交互。

所以,利用 rknn 部署 YOLOv8 需要两个步骤:

PC 端利用 rknn-toolkit2 将不同框架下的模型转换成 rknn 格式模型

板端利用 rknn-toolkit-lite2 的 Python API 板端推理模型

二、环境依赖

conda create -n rknn python=3.8.20pip install -r requirements.txt

actionlib==1.14.0

angles==1.9.13

bondpy==1.8.6

camera-calibration==1.17.0

camera-calibration-parsers==1.12.0

catkin==0.8.10

controller-manager==0.20.0

controller-manager-msgs==0.20.0

cv-bridge==1.16.2

diagnostic-analysis==1.11.0

diagnostic-common-diagnostics==1.11.0

diagnostic-updater==1.11.0

dynamic-reconfigure==1.7.3

gazebo_plugins==2.9.2

gazebo_ros==2.9.2

gencpp==0.7.0

geneus==3.0.0

genlisp==0.4.18

genmsg==0.6.0

gennodejs==2.0.2

genpy==0.6.15

image-geometry==1.16.2

interactive-markers==1.12.0

joint-state-publisher==1.15.1

joint-state-publisher-gui==1.15.1

laser_geometry==1.6.7

message-filters==1.16.0

numpy==1.26.4

opencv-python==4.8.0.76

psutil==5.9.8

python-qt-binding==0.4.4

qt-dotgraph==0.4.2

qt-gui==0.4.2

qt-gui-cpp==0.4.2

qt-gui-py-common==0.4.2

resource_retriever==1.12.7

rosbag==1.16.0

rosboost-cfg==1.15.8

rosclean==1.15.8

roscreate==1.15.8

rosgraph==1.16.0

roslaunch==1.16.0

roslib==1.15.8

roslint==0.12.0

roslz4==1.16.0

rosmake==1.15.8

rosmaster==1.16.0

rosmsg==1.16.0

rosnode==1.16.0

rosparam==1.16.0

rospy==1.16.0

rosservice==1.16.0

rostest==1.16.0

rostopic==1.16.0

rosunit==1.15.8

roswtf==1.16.0

rqt-console==0.4.12

rqt-image-view==0.4.17

rqt-logger-level==0.4.12

rqt-moveit==0.5.11

rqt-reconfigure==0.5.5

rqt-robot-dashboard==0.5.8

rqt-robot-monitor==0.5.15

rqt-runtime-monitor==0.5.10

rqt-rviz==0.7.0

rqt-tf-tree==0.6.4

rqt_action==0.4.9

rqt_bag==0.5.1

rqt_bag_plugins==0.5.1

rqt_dep==0.4.12

rqt_graph==0.4.14

rqt_gui==0.5.3

rqt_gui_py==0.5.3

rqt_launch==0.4.9

rqt_msg==0.4.10

rqt_nav_view==0.5.7

rqt_plot==0.4.13

rqt_pose_view==0.5.11

rqt_publisher==0.4.10

rqt_py_common==0.5.3

rqt_py_console==0.4.10

rqt_robot_steering==0.5.12

rqt_service_caller==0.4.10

rqt_shell==0.4.11

rqt_srv==0.4.9

rqt_top==0.4.10

rqt_topic==0.4.13

rqt_web==0.4.10

ruamel.yaml==0.18.6

ruamel.yaml.clib==0.2.8

rviz==1.14.25

sensor-msgs==1.13.1

smach==2.5.2

smach-ros==2.5.2

smclib==1.8.6

tf==1.13.2

tf-conversions==1.13.2

tf2-geometry-msgs==0.7.7

tf2-kdl==0.7.7

tf2-py==0.7.7

tf2-ros==0.7.7

topic-tools==1.16.0

xacro==1.14.18

三、 YOLO-COCO预训练模型转换与部署

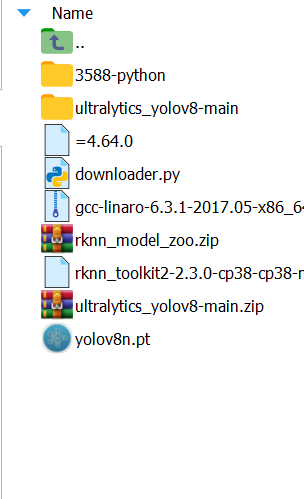

在虚拟机中安装rknn_toolkit2(只需执行一次):

| 硬件平台 | RKNN-Toolkit2 版本 | Python 版本 | Wheel 文件后缀 |

|---|---|---|---|

| RK3588 | 1.6.0 | 3.8/3.10 | linux_aarch64.whl |

| RK3566 | 1.5.0 | 3.6/3.8 | linux_aarch64.whl |

| x86 PC | 2.3.0 | 3.8 | manylinux2014_x86_64.whl |

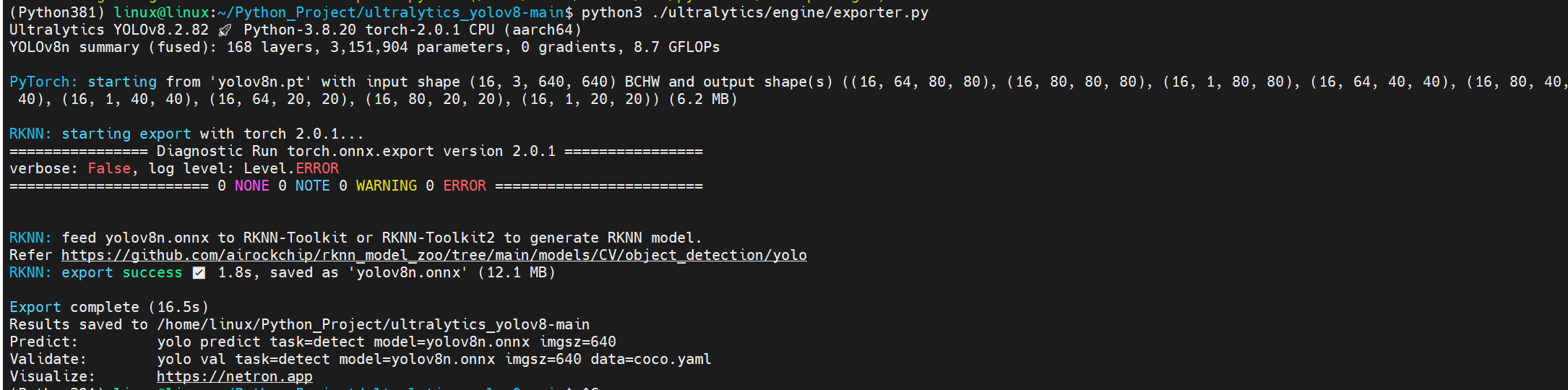

pip install rknn_toolkit2-1.6.0-cp38-cp38-linux_aarch64.whl安装ultralytics

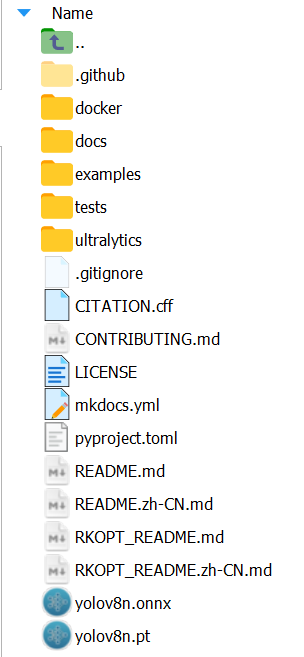

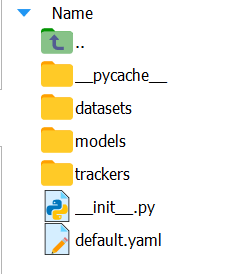

git clone https://github.com/airockchip/ultralytics_yolov8.gitcd ultralytics_yolov8git checkout 5b7ddd8f821c8f6edb389aa30cfbc88bd903867b将yolov8n.pt模型放在ultralytics_yolov8下:

在ultralytics/cfg/default.yaml文件下,替换 “yolov8m-seg.pt” 成 “yolov8n.pt”。

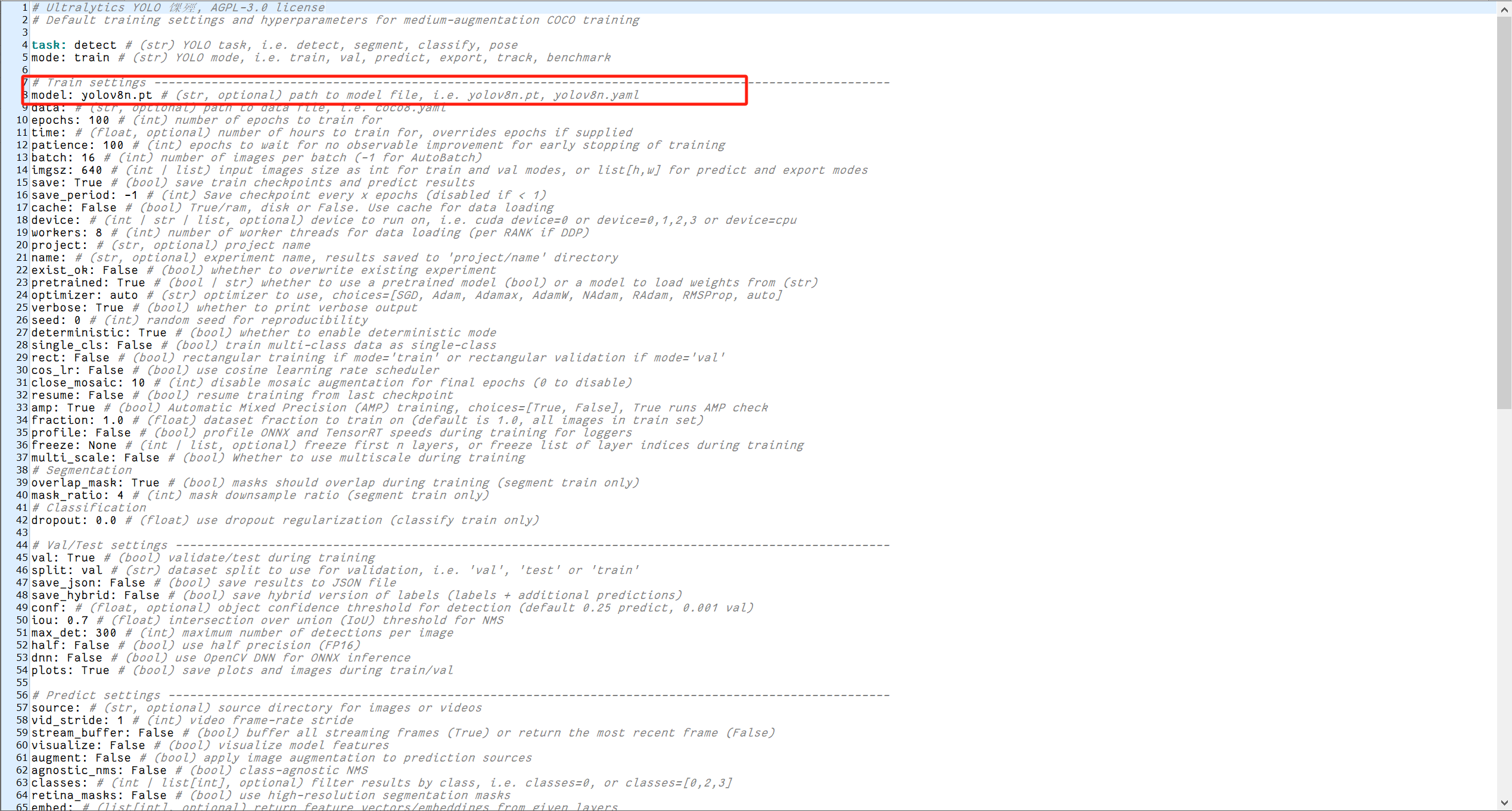

将pt转成onnx

export PYTHONPATH=./python3 ./ultralytics/engine/exporter.py

将onnx转rknn

# 先安装基础依赖(注意版本!)

pip install numpy==1.23.5 protobuf==3.20.3 onnx==1.13.1 pillow==9.5.0

# 安装RKNN-Toolkit2(根据硬件选择版本)

# 对于RK3588:

pip install rknn-toolkit2==1.6.0 --no-deps

pip install psutil==5.9.4 requests==2.28.1 # 补充依赖rm ../rknn_model_zoo/examples/yolov8/model/yolov8n.*cp yolov8n.onnx ../rknn_model_zoo/examples/yolov8/model/cd ../rknn_model_zoo/examples/yolov8/python/

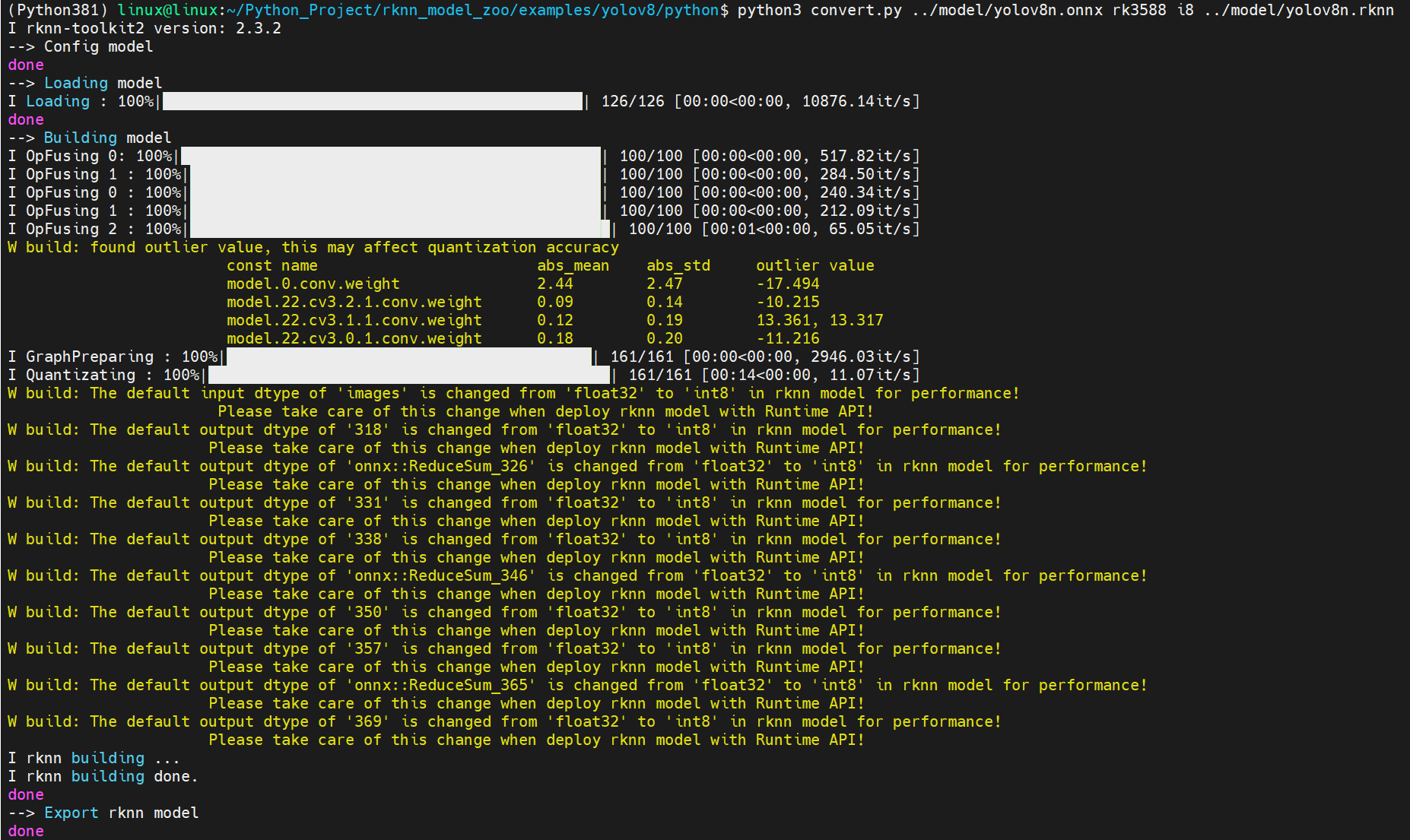

python3 convert.py ../model/yolov8n.onnx rk3588 i8 ../model/yolov8n.rknn

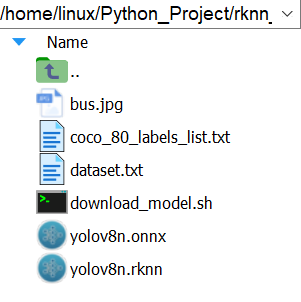

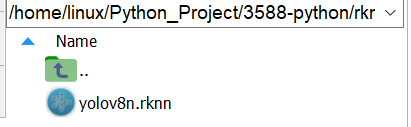

将虚拟机生成的yolov8n.rknn模型放在3588板卡上:

main.py

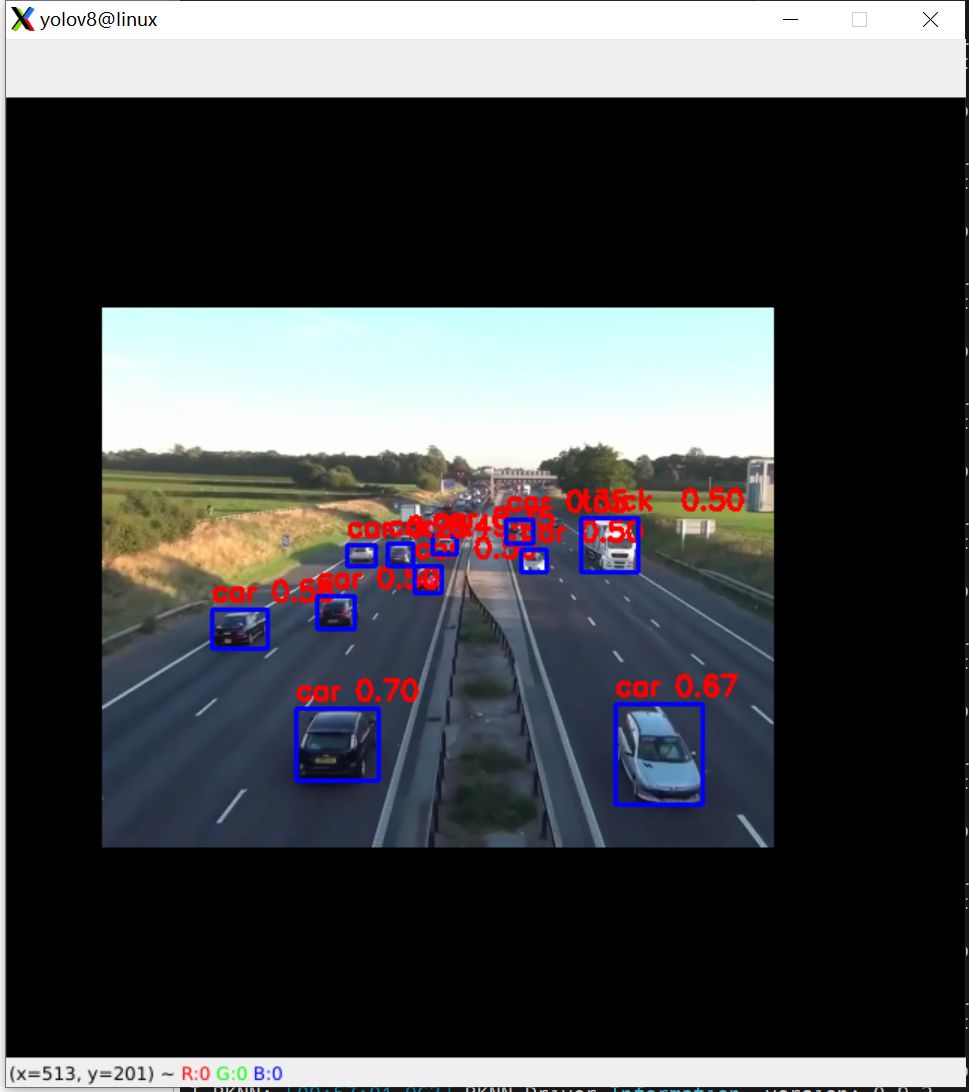

import cv2 import time from rknnpool import rknnPoolExecutor # 图像处理函数,实际应用过程中需要自行修改 from func import myFunc cap = cv2.VideoCapture('./720p60hz.mp4') #cap = cv2.VideoCapture(20) #cap.set(cv2.CAP_PROP_FRAME_WIDTH,640) #cap.set(cv2.CAP_PROP_FRAME_HEIGHT,480) modelPath = "./rknnModel/yolov8n.rknn" # 线程数, 增大可提高帧率 TPEs = 10 # 初始化rknn池 pool = rknnPoolExecutor( rknnModel=modelPath, TPEs=TPEs, func=myFunc) # 初始化异步所需要的帧 if (cap.isOpened()): for i in range(TPEs + 1): ret, frame = cap.read() if not ret: cap.release() del pool exit(-1) pool.put(frame) frames, loopTime, initTime = 0, time.time(), time.time() while (cap.isOpened()): frames += 1 ret, frame = cap.read() if not ret: break #print(frame.shape) pool.put(frame) frame, flag = pool.get() if flag == False: break #print(frame.shape) cv2.imshow('yolov8', frame) if cv2.waitKey(1) & 0xFF == ord('q'): break if frames % 30 == 0: print("30帧平均帧率:\t", 30 / (time.time() - loopTime), "帧") loopTime = time.time() print("总平均帧率\t", frames / (time.time() - initTime)) # 释放cap和rknn线程池 cap.release() cv2.destroyAllWindows() pool.release()

func.py

#以下代码改自https://github.com/rockchip-linux/rknn-toolkit2/tree/master/examples/onnx/yolov5 import cv2 import numpy as np OBJ_THRESH, NMS_THRESH, IMG_SIZE = 0.25, 0.45, 640 CLASSES = ("person", "bicycle", "car", "motorbike ", "aeroplane ", "bus ", "train", "truck ", "boat", "traffic light", "fire hydrant", "stop sign ", "parking meter", "bench", "bird", "cat", "dog ", "horse ", "sheep", "cow", "elephant", "bear", "zebra ", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife ", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza ", "donut", "cake", "chair", "sofa", "pottedplant", "bed", "diningtable", "toilet ", "tvmonitor", "laptop ", "mouse ", "remote ", "keyboard ", "cell phone", "microwave ", "oven ", "toaster", "sink", "refrigerator ", "book", "clock", "vase", "scissors ", "teddy bear ", "hair drier", "toothbrush ") def filter_boxes(boxes, box_confidences, box_class_probs): """Filter boxes with object threshold. """ box_confidences = box_confidences.reshape(-1) candidate, class_num = box_class_probs.shape class_max_score = np.max(box_class_probs, axis=-1) classes = np.argmax(box_class_probs, axis=-1) _class_pos = np.where(class_max_score* box_confidences >= OBJ_THRESH) scores = (class_max_score* box_confidences)[_class_pos] boxes = boxes[_class_pos] classes = classes[_class_pos] return boxes, classes, scores def nms_boxes(boxes, scores): """Suppress non-maximal boxes. # Returns keep: ndarray, index of effective boxes. """ x = boxes[:, 0] y = boxes[:, 1] w = boxes[:, 2] - boxes[:, 0] h = boxes[:, 3] - boxes[:, 1] areas = w * h order = scores.argsort()[::-1] keep = [] while order.size > 0: i = order[0] keep.append(i) xx1 = np.maximum(x[i], x[order[1:]]) yy1 = np.maximum(y[i], y[order[1:]]) xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]]) yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]]) w1 = np.maximum(0.0, xx2 - xx1 + 0.00001) h1 = np.maximum(0.0, yy2 - yy1 + 0.00001) inter = w1 * h1 ovr = inter / (areas[i] + areas[order[1:]] - inter) inds = np.where(ovr <= NMS_THRESH)[0] order = order[inds + 1] keep = np.array(keep) return keep # def dfl(position): # # Distribution Focal Loss (DFL) # import torch # x = torch.tensor(position) # n,c,h,w = x.shape # p_num = 4 # mc = c//p_num # y = x.reshape(n,p_num,mc,h,w) # y = y.softmax(2) # acc_metrix = torch.tensor(range(mc)).float().reshape(1,1,mc,1,1) # y = (y*acc_metrix).sum(2) # return y.numpy() # def dfl(position): # # Distribution Focal Loss (DFL) # n, c, h, w = position.shape # p_num = 4 # mc = c // p_num # y = position.reshape(n, p_num, mc, h, w) # exp_y = np.exp(y) # y = exp_y / np.sum(exp_y, axis=2, keepdims=True) # acc_metrix = np.arange(mc).reshape(1, 1, mc, 1, 1).astype(float) # y = (y * acc_metrix).sum(2) # return y def dfl(position): # Distribution Focal Loss (DFL) # x = np.array(position) n,c,h,w = position.shape p_num = 4 mc = c//p_num y = position.reshape(n,p_num,mc,h,w) # Vectorized softmax e_y = np.exp(y - np.max(y, axis=2, keepdims=True)) # subtract max for numerical stability y = e_y / np.sum(e_y, axis=2, keepdims=True) acc_metrix = np.arange(mc).reshape(1,1,mc,1,1) y = (y*acc_metrix).sum(2) return y def box_process(position): grid_h, grid_w = position.shape[2:4] col, row = np.meshgrid(np.arange(0, grid_w), np.arange(0, grid_h)) col = col.reshape(1, 1, grid_h, grid_w) row = row.reshape(1, 1, grid_h, grid_w) grid = np.concatenate((col, row), axis=1) stride = np.array([IMG_SIZE//grid_h, IMG_SIZE//grid_w]).reshape(1,2,1,1) position = dfl(position) box_xy = grid +0.5 -position[:,0:2,:,:] box_xy2 = grid +0.5 +position[:,2:4,:,:] xyxy = np.concatenate((box_xy*stride, box_xy2*stride), axis=1) return xyxy def yolov8_post_process(input_data): boxes, scores, classes_conf = [], [], [] defualt_branch=3 pair_per_branch = len(input_data)//defualt_branch # Python 忽略 score_sum 输出 for i in range(defualt_branch): boxes.append(box_process(input_data[pair_per_branch*i])) classes_conf.append(input_data[pair_per_branch*i+1]) scores.append(np.ones_like(input_data[pair_per_branch*i+1][:,:1,:,:], dtype=np.float32)) def sp_flatten(_in): ch = _in.shape[1] _in = _in.transpose(0,2,3,1) return _in.reshape(-1, ch) boxes = [sp_flatten(_v) for _v in boxes] classes_conf = [sp_flatten(_v) for _v in classes_conf] scores = [sp_flatten(_v) for _v in scores] boxes = np.concatenate(boxes) classes_conf = np.concatenate(classes_conf) scores = np.concatenate(scores) # filter according to threshold boxes, classes, scores = filter_boxes(boxes, scores, classes_conf) # nms nboxes, nclasses, nscores = [], [], [] for c in set(classes): inds = np.where(classes == c) b = boxes[inds] c = classes[inds] s = scores[inds] keep = nms_boxes(b, s) if len(keep) != 0: nboxes.append(b[keep]) nclasses.append(c[keep]) nscores.append(s[keep]) if not nclasses and not nscores: return None, None, None boxes = np.concatenate(nboxes) classes = np.concatenate(nclasses) scores = np.concatenate(nscores) return boxes, classes, scores def draw(image, boxes, scores, classes): for box, score, cl in zip(boxes, scores, classes): top, left, right, bottom = box # print('class: {}, score: {}'.format(CLASSES[cl], score)) # print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom)) top = int(top) left = int(left) # print(cl) cv2.rectangle(image, (top, left), (int(right), int(bottom)), (255, 0, 0), 2) cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score), (top, left - 6), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2) def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)): shape = im.shape[:2] # current shape [height, width] if isinstance(new_shape, int): new_shape = (new_shape, new_shape) r = min(new_shape[0] / shape[0], new_shape[1] / shape[1]) ratio = r, r # width, height ratios new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r)) dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - \ new_unpad[1] # wh padding dw /= 2 # divide padding into 2 sides dh /= 2 if shape[::-1] != new_unpad: # resize im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR) top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1)) left, right = int(round(dw - 0.1)), int(round(dw + 0.1)) im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border return im # return im, ratio, (dw, dh) def myFunc(rknn_lite, IMG): IMG = cv2.cvtColor(IMG, cv2.COLOR_BGR2RGB) # 等比例缩放 IMG = letterbox(IMG) # 强制放缩 # IMG = cv2.resize(IMG, (IMG_SIZE, IMG_SIZE)) IMG2 = np.expand_dims(IMG, 0) outputs = rknn_lite.inference(inputs=[IMG2],data_format=['nhwc']) #print("oups1",len(outputs)) #print("oups2",outputs[0].shape) boxes, classes, scores = yolov8_post_process(outputs) IMG = cv2.cvtColor(IMG, cv2.COLOR_RGB2BGR) if boxes is not None: draw(IMG, boxes, scores, classes) return IMG def myFunc3D(rknn_lite, IMG): IMG = cv2.cvtColor(IMG, cv2.COLOR_BGR2RGB) # 等比例缩放 IMG = letterbox(IMG) # 强制放缩 # IMG = cv2.resize(IMG, (IMG_SIZE, IMG_SIZE)) IMG2 = np.expand_dims(IMG, 0) outputs = rknn_lite.inference(inputs=[IMG2],data_format=['nhwc']) #print("oups1",len(outputs)) #print("oups2",outputs[0].shape) boxes, classes, scores = yolov8_post_process(outputs) return classes # IMG = cv2.cvtColor(IMG, cv2.COLOR_RGB2BGR) # if boxes is not None: # draw(IMG, boxes, scores, classes) # return IMG

rknnpool.py

from queue import Queue # import torch from rknnlite.api import RKNNLite from concurrent.futures import ThreadPoolExecutor, as_completed def initRKNN(rknnModel="./rknnModel/yolov5n.rknn", id=0): rknn_lite = RKNNLite() ret = rknn_lite.load_rknn(rknnModel) if ret != 0: print("Load RKNN rknnModel failed") exit(ret) if id == 0: ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0) elif id == 1: ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_1) elif id == 2: ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_2) elif id == -1: ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0_1_2) else: ret = rknn_lite.init_runtime() if ret != 0: print("Init runtime environment failed") exit(ret) print(rknnModel, "\t\tdone") return rknn_lite def initRKNNs(rknnModel="./rknnModel/yolov5n.rknn", TPEs=1): rknn_list = [] for i in range(TPEs): rknn_list.append(initRKNN(rknnModel, i % 3)) return rknn_list class rknnPoolExecutor(): def __init__(self, rknnModel, TPEs, func): self.TPEs = TPEs self.queue = Queue() self.rknnPool = initRKNNs(rknnModel, TPEs) self.pool = ThreadPoolExecutor(max_workers=TPEs) self.func = func self.num = 0 def put(self, frame): self.queue.put(self.pool.submit( self.func, self.rknnPool[self.num % self.TPEs], frame)) self.num += 1 def get(self): if self.queue.empty(): return None, False fut = self.queue.get() return fut.result(), True def release(self): self.pool.shutdown() for rknn_lite in self.rknnPool: rknn_lite.release()

四、自定义模型转换和部署

转换和部署的步骤与上面一致,但是要将预训练模型改为自己的模型,然后再根据步骤转onnx,将 onnx转为rknn进行部署。 同时也要根据自己的实际数据的对应情况修改 func.py 文件,确保CLASSES和自己的模型训练时的标签 保持一致。