在之前几篇笔记中完成了基于 apple vision 的 G1 遥操作,现在需要将数据集转换成 lerobot 格式然后再进行训练部署

相关开源 repo:

| Unitree Robotics repositories | link |

|---|---|

| Unitree Datasets | unitree datasets |

| AVP Teleoperate | avp_teleoperate |

目录

1 📦 环境安装

本项的目的是使用 LeRobot 开源框架训练并测试基于 Unitree 机器人采集的数据。所以首先需要安装 LeRobot 相关依赖。安装步骤如下,也可以参考 LeRobot 官方进行安装:

# 下载源码

git clone --recurse-submodules https://github.com/unitreerobotics/unitree_IL_lerobot.git

# 进入已经下载:

git submodule update --init --recursive

# 创建 conda 环境

conda create -y -n unitree_lerobot python=3.10

conda activate unitree_lerobot

# 安装 LeRobot

cd unitree_lerobot

cd lerobot && pip install -e .

# 安装 unitree_lerobot

cd ..

cd .. && pip install -e .2 ⚙️ 数据采集与转换

2.1 🖼️ 数据加载测试

如果想加载已经录制好的数据集,可以从 huggingface上加载 unitreerobotics/G1_ToastedBread_Dataset 数据集, 默认下载到 ~/.cache/huggingface/lerobot/unitreerobotics。如果想从加载本地数据请更改 root 参数

from lerobot.common.datasets.lerobot_dataset import LeRobotDataset

import tqdm

episode_index = 1

dataset = LeRobotDataset(repo_id="unitreerobotics/G1_ToastedBread_Dataset")

from_idx = dataset.episode_data_index["from"][episode_index].item()

to_idx = dataset.episode_data_index["to"][episode_index].item()

for step_idx in tqdm.tqdm(range(from_idx, to_idx)):

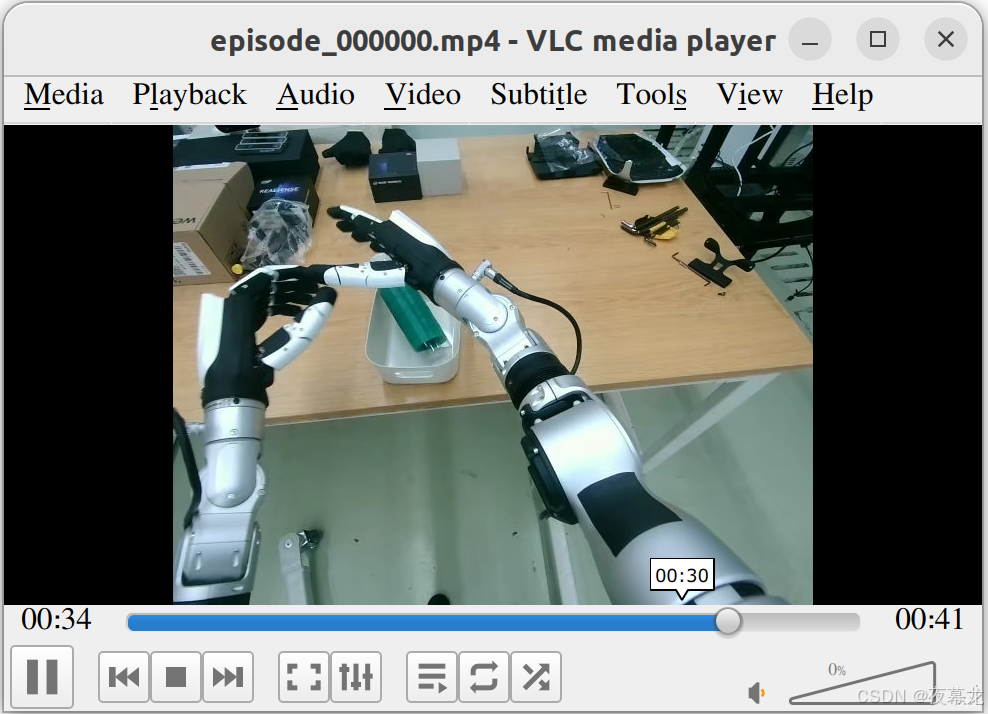

step = dataset[step_idx]可视化(使用的 lerobot 架构,可以参考相关笔记)

cd unitree_lerobot/lerobot

python lerobot/scripts/visualize_dataset.py \

--repo-id unitreerobotics/G1_ToastedBread_Dataset \

--episode-index 02.2 🔨 数据采集

使用开源遥操作项目 xr_teleoperate 采集 Unitree G1 运动数据(目前使用的是 v1.0 版本),得到相应数据文件

python teleop_hand_and_arm.py --xr-mode=hand --arm=G1_29 --ee=inspire1 --record --motion***2.3 🛠️ 数据转换

当前采集的数据是采用 JSON 格式进行存储。假如采集的数据存放在 $HOME/datasets/task_name 目录中,格式如下:

datasets/ # 数据集文件夹

└── task_name / # 任务名称

├── episode_0001 # 第一条轨迹

│ ├──audios/ # 声音信息

│ ├──colors/ # 图像信息

│ ├──depths/ # 深度图像信息

│ └──data.json # 状态以及动作信息

├── episode_0002

├── episode_...

├── episode_xxx

2.3.1 🔀 排序和重命名

生成 lerobot 数据集时,最好保证数据的 episode_0 命名是从 0 开始且是连续的,使用下面脚本对数据进行排序处理

python unitree_lerobot/utils/sort_and_rename_folders.py \

--data_dir $HOME/datasets/task_name2.3.2 🔄 转换

转换 json 格式到 lerobot 格式,可以根据 ROBOT_CONFIGS 定义自己的 robot_type

这个地方就有很多问题了

1. 由于 constants.py 中宇树并未支持 inspire hands,因此需要自己增加。此外,由于采用的是双目相机而非 d435i,因此最终版本如下:

G1_INSPIRE_FACE_STEREO_CONFIG = RobotConfig(

motors=[

# 左臂 7

"kLeftShoulderPitch","kLeftShoulderRoll","kLeftShoulderYaw",

"kLeftElbow","kLeftWristRoll","kLeftWristPitch","kLeftWristYaw",

# 右臂 7

"kRightShoulderPitch","kRightShoulderRoll","kRightShoulderYaw",

"kRightElbow","kRightWristRoll","kRightWristPitch","kRightWristYaw",

# Inspire 左端执行器 6(JSON 的 left_ee 维度)

"kLeftEE0","kLeftEE1","kLeftEE2","kLeftEE3","kLeftEE4","kLeftEE5",

# Inspire 右端执行器 6(right_ee)

"kRightEE0","kRightEE1","kRightEE2","kRightEE3","kRightEE4","kRightEE5",

],

cameras=[

"cam_face_left",

"cam_face_right",

],

# color_0 -> 左目;color_1 -> 右目

camera_to_image_key={

"color_0": "cam_face_left",

"color_1": "cam_face_right",

},

json_state_data_name=["left_arm", "right_arm", "left_ee", "right_ee"],

json_action_data_name=["left_arm", "right_arm", "left_ee", "right_ee"],

)

ROBOT_CONFIGS.update({

"Unitree_G1_Inspire_FaceStereo": G1_INSPIRE_FACE_STEREO_CONFIG,

})2. 由于转换版本存在各种小 bug,最终修复版本 convert_unitree_json_to_lerobot_update.py 包括如下内容:

修复:FileNotFoundError: Missing data.json: /home/yejiangchen/Desktop/Codes/unitree/unitree_IL_lerobot/datasets/bottle/episode_0001/audios/data.json

自动探测相机分辨率并用于 features.shape(避免固定 480×640 带来的不匹配)

图像从 HWC→CHW 的显式转换

帧数一致性断言(state/action/每路相机)

维度一致性断言(拼接后的向量长度与 motors 数量一致)

action_dim 计算的小 bug 修复

index is None 时的健壮处理

新增 --overwrite(默认不覆盖),避免误删已有本地数据

兼容面部双目配置(在 ROBOT_CONFIGS 里添加 Unitree_G1_Inspire_FaceStereo)

update 后的脚本如下:

# -*- coding: utf-8 -*-

"""

Script: Json to LeRobot (with stereo-face support, auto-shape probe, CHW, and consistency checks)

Usage:

python unitree_lerobot/utils/convert_unitree_json_to_lerobot.py \

--raw-dir $HOME/datasets/g1_grabcube_double_hand \

--repo-id your_name/g1_grabcube_double_hand \

--robot_type Unitree_G1_Inspire_FaceStereo \

--push_to_hub \

--overwrite

"""

import os

import cv2

import tqdm

import tyro

import json

import glob

import dataclasses

import shutil

import numpy as np

from pathlib import Path

from collections import defaultdict

from typing import Literal, List, Dict, Optional

from lerobot.common.constants import HF_LEROBOT_HOME

from lerobot.common.datasets.lerobot_dataset import LeRobotDataset

from unitree_lerobot.utils.constants import ROBOT_CONFIGS

@dataclasses.dataclass(frozen=True)

class DatasetConfig:

use_videos: bool = True

tolerance_s: float = 0.0001

image_writer_processes: int = 10

image_writer_threads: int = 5

video_backend: str | None = None

DEFAULT_DATASET_CONFIG = DatasetConfig()

class JsonDataset:

def __init__(self, data_dirs: Path, robot_type: str) -> None:

"""

Initialize the dataset for loading and processing JSON episodes.

"""

assert data_dirs is not None, "Data directory cannot be None"

assert robot_type in ROBOT_CONFIGS, (

f"Unknown robot_type='{robot_type}'. "

f"Please add it to ROBOT_CONFIGS in unitree_lerobot/utils/constants.py. "

f"Available: {list(ROBOT_CONFIGS.keys())}"

)

self.data_dirs = str(data_dirs)

self.json_file = 'data.json'

# Initialize paths and cache

self._init_paths()

self.json_state_data_name = ROBOT_CONFIGS[robot_type].json_state_data_name

self.json_action_data_name = ROBOT_CONFIGS[robot_type].json_action_data_name

self.camera_to_image_key = ROBOT_CONFIGS[robot_type].camera_to_image_key

self._init_cache()

def _init_paths(self) -> None:

"""Initialize episode paths: only top-level directories under raw-dir that contain data.json."""

self.episode_paths = []

for path in glob.glob(os.path.join(self.data_dirs, '*')):

if os.path.isdir(path) and os.path.isfile(os.path.join(path, self.json_file)):

self.episode_paths.append(path)

self.episode_paths = sorted(self.episode_paths)

self.episode_ids = list(range(len(self.episode_paths)))

print(f"Found {len(self.episode_paths)} valid episodes under {self.data_dirs}.")

def __len__(self) -> int:

return len(self.episode_paths)

def _init_cache(self) -> List[Dict]:

"""Load episodes with data.json; skip invalid ones and rebuild episode_paths."""

self.episodes_data_cached = []

episode_paths_new = []

for episode_path in tqdm.tqdm(self.episode_paths, desc="Loading Cache Json"):

json_path = os.path.join(episode_path, self.json_file)

if not os.path.isfile(json_path):

print(f"⚠️ Skip (no {self.json_file}): {episode_path}")

continue

try:

with open(json_path, 'r', encoding='utf-8') as f:

data = json.load(f)

self.episodes_data_cached.append(data)

episode_paths_new.append(episode_path)

except Exception as e:

print(f"❌ Failed to load {json_path}: {e}")

continue

self.episode_paths = episode_paths_new

print(f"==> Cached {len(self.episodes_data_cached)} episodes")

return self.episodes_data_cached

@staticmethod

def _concat_qpos(sample_data: Dict, key: str, parts: List[str]) -> np.ndarray:

"""

Concatenate qpos from multiple parts in order.

"""

data_array = np.array([], dtype=np.float32)

for part in parts:

if part in sample_data.get(key, {}) and sample_data[key][part] is not None:

qpos = sample_data[key][part].get('qpos', [])

if qpos is None:

qpos = []

qpos = np.asarray(qpos, dtype=np.float32)

if qpos.size > 0:

data_array = np.concatenate([data_array, qpos])

return data_array

def _extract_data(self, episode_data: Dict, key: str, parts: List[str]) -> np.ndarray:

"""

Extract stacked states or actions: shape [T, D].

"""

result = []

for sample_data in episode_data['data']:

data_array = self._concat_qpos(sample_data, key, parts)

result.append(data_array)

if not result:

return np.zeros((0, 0), dtype=np.float32)

return np.stack(result, axis=0)

def _parse_images(self, episode_path: str, episode_data) -> Dict[str, List[np.ndarray]]:

"""

Load images for non-depth camera keys and map to standardized names.

Returns dict: {image_key: [HWC RGB arrays]}

"""

images: Dict[str, List[np.ndarray]] = defaultdict(list)

# find available color keys from the first frame

color_keys = list(episode_data["data"][0].get('colors', {}).keys())

# exclude anything with 'depth' in key just in case

cameras = [key for key in color_keys if "depth" not in key.lower()]

for camera in cameras:

image_key = self.camera_to_image_key.get(camera)

if image_key is None:

# camera present in JSON but no mapping -> skip silently (or raise if you prefer strict)

continue

for sample_data in episode_data['data']:

relative_path = sample_data.get('colors', {}).get(camera)

if not relative_path:

images[image_key].append(None) # keep alignment; will validate later

continue

image_path = os.path.join(episode_path, relative_path)

if not os.path.exists(image_path):

raise FileNotFoundError(f"Image path does not exist: {image_path}")

img_bgr = cv2.imread(image_path, cv2.IMREAD_COLOR)

if img_bgr is None:

raise RuntimeError(f"Failed to read image: {image_path}")

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

images[image_key].append(img_rgb)

return images

def get_item(self, index: Optional[int] = None) -> Dict:

"""

Get a single episode bundle with state/action/images/task/cfg.

"""

if index is None:

index = np.random.randint(0, len(self.episode_paths))

file_path = self.episode_paths[index]

episode_data = self.episodes_data_cached[index]

# Extract state/action

action = self._extract_data(episode_data, 'actions', self.json_action_data_name)

state = self._extract_data(episode_data, 'states', self.json_state_data_name)

def dim_of(arr: np.ndarray) -> int:

if arr.ndim == 2:

return arr.shape[1]

if arr.ndim == 1:

return arr.shape[0]

raise ValueError(f"Unexpected array shape: {arr.shape}")

episode_length = len(state)

state_dim = dim_of(state) if episode_length > 0 else 0

action_dim = dim_of(action) if episode_length > 0 else 0

task = episode_data.get('text', {}).get('goal', "")

cameras = self._parse_images(file_path, episode_data)

# find any valid image to get H/W (fallback if needed)

cam_height = cam_width = None

for imgs in cameras.values():

for img in imgs:

if img is not None:

cam_height, cam_width = img.shape[:2]

break

if cam_height is not None:

break

data_cfg = {

'camera_names': list(cameras.keys()),

'cam_height': cam_height,

'cam_width': cam_width,

'state_dim': state_dim,

'action_dim': action_dim,

}

return {

'episode_index': index,

'episode_length': episode_length,

'state': state,

'action': action,

'cameras': cameras,

'task': task,

'data_cfg': data_cfg

}

def probe_camera_shapes(raw_dir: Path, robot_type: str) -> Dict[str, tuple[int, int]]:

"""

Inspect the first episode to learn each camera's (H, W).

Returns {camera_name: (H, W)}

"""

jd = JsonDataset(raw_dir, robot_type)

ep = jd.get_item(0)

cam_shapes: Dict[str, tuple[int, int]] = {}

for cam, imgs in ep["cameras"].items():

for img in imgs:

if img is not None:

h, w = img.shape[:2]

cam_shapes[cam] = (h, w)

break

return cam_shapes

def create_empty_dataset(

repo_id: str,

robot_type: str,

mode: Literal["video", "image"] = "video",

*,

has_velocity: bool = False,

has_effort: bool = False,

dataset_config: DatasetConfig = DEFAULT_DATASET_CONFIG,

cam_shapes: Optional[Dict[str, tuple[int, int]]] = None,

overwrite: bool = False,

) -> LeRobotDataset:

if not overwrite and Path(HF_LEROBOT_HOME / repo_id).exists():

raise FileExistsError(

f"Local dataset already exists: {HF_LEROBOT_HOME / repo_id}\n"

f"Use --overwrite to remove it."

)

if overwrite and Path(HF_LEROBOT_HOME / repo_id).exists():

shutil.rmtree(HF_LEROBOT_HOME / repo_id)

motors = ROBOT_CONFIGS[robot_type].motors

cameras = ROBOT_CONFIGS[robot_type].cameras

features: Dict[str, Dict] = {

"observation.state": {

"dtype": "float32",

"shape": (len(motors),),

"names": [motors],

},

"action": {

"dtype": "float32",

"shape": (len(motors),),

"names": [motors],

},

}

if has_velocity:

features["observation.velocity"] = {

"dtype": "float32",

"shape": (len(motors),),

"names": [motors],

}

if has_effort:

features["observation.effort"] = {

"dtype": "float32",

"shape": (len(motors),),

"names": [motors],

}

# Set image features using probed (H, W). Store as CHW (3, H, W).

for cam in cameras:

if cam_shapes and cam in cam_shapes:

h, w = cam_shapes[cam]

else:

h, w = 480, 640 # fallback

features[f"observation.images.{cam}"] = {

"dtype": mode,

"shape": (3, h, w),

"names": ["channels", "height", "width"],

}

return LeRobotDataset.create(

repo_id=repo_id,

fps=30,

robot_type=robot_type,

features=features,

use_videos=dataset_config.use_videos,

tolerance_s=dataset_config.tolerance_s,

image_writer_processes=dataset_config.image_writer_processes,

image_writer_threads=dataset_config.image_writer_threads,

video_backend=dataset_config.video_backend,

)

def _validate_episode_consistency(

motors: List[str],

state: np.ndarray,

action: np.ndarray,

cameras: Dict[str, List[np.ndarray]],

) -> None:

"""

Ensure frame lengths match and vector dims equal len(motors).

"""

T = len(state)

if len(action) != T:

raise ValueError(f"state/action length mismatch: {len(state)} vs {len(action)}")

for cam, imgs in cameras.items():

if len(imgs) != T:

raise ValueError(f"camera '{cam}' length mismatch: {len(imgs)} vs {T}")

for i, im in enumerate(imgs):

if im is None:

raise ValueError(f"camera '{cam}' frame {i} is missing (None)")

D_expected = len(motors)

if state.ndim != 2 or action.ndim != 2:

raise ValueError(f"state/action must be 2D arrays, got {state.shape} and {action.shape}")

if state.shape[1] != D_expected:

raise ValueError(f"state dim {state.shape[1]} != expected {D_expected}")

if action.shape[1] != D_expected:

raise ValueError(f"action dim {action.shape[1]} != expected {D_expected}")

def populate_dataset(

dataset: LeRobotDataset,

raw_dir: Path,

robot_type: str,

) -> LeRobotDataset:

json_dataset = JsonDataset(raw_dir, robot_type)

motors = ROBOT_CONFIGS[robot_type].motors

for i in tqdm.tqdm(range(len(json_dataset)), desc="Populating LeRobotDataset"):

episode = json_dataset.get_item(i)

state = episode["state"]

action = episode["action"]

cameras = episode["cameras"]

task = episode["task"]

episode_length = episode["episode_length"]

# Consistency checks

_validate_episode_consistency(motors, state, action, cameras)

for t in range(episode_length):

frame = {

"observation.state": state[t],

"action": action[t],

"task": task

}

# HWC -> CHW explicitly

for camera, img_array in cameras.items():

img_hwc = img_array[t] # H, W, C (RGB)

img_chw = np.transpose(img_hwc, (2, 0, 1)) # C, H, W

frame[f"observation.images.{camera}"] = img_chw

dataset.add_frame(frame)

dataset.save_episode()

return dataset

def json_to_lerobot(

raw_dir: Path,

repo_id: str,

robot_type: str, # e.g., Unitree_Z1_Dual, Unitree_G1_Gripper, Unitree_G1_Inspire_FaceStereo

*,

push_to_hub: bool = False,

mode: Literal["video", "image"] = "video",

dataset_config: DatasetConfig = DEFAULT_DATASET_CONFIG,

overwrite: bool = False,

):

"""

Convert a JSON dataset into a LeRobotDataset (local), and optionally push to HF Hub.

"""

# Probe image sizes from the first episode

cam_shapes = probe_camera_shapes(raw_dir, robot_type)

dataset = create_empty_dataset(

repo_id=repo_id,

robot_type=robot_type,

mode=mode,

has_effort=False,

has_velocity=False,

dataset_config=dataset_config,

cam_shapes=cam_shapes,

overwrite=overwrite,

)

dataset = populate_dataset(

dataset,

raw_dir,

robot_type=robot_type,

)

if push_to_hub:

dataset.push_to_hub(upload_large_folder=True)

def local_push_to_hub(

repo_id: str,

root_path: Path,

):

dataset = LeRobotDataset(repo_id=repo_id, root=root_path)

dataset.push_to_hub(upload_large_folder=True)

if __name__ == "__main__":

tyro.cli(json_to_lerobot)3. 修复subprocess.CalledProcessError: Command '['ffmpeg', '-f', 'image2', '-r', '30', '-i', '/home/yejiangchen/.cache/huggingface/lerobot/yejiangchen/g1_inspire_face_stereo/images/observation.images.cam_face_left/episode_000000/frame_%06d.png', '-vcodec', 'libsvtav1', '-pix_fmt', 'yuv420p', '-g', '2', '-crf', '30', '-loglevel', 'error', '-y', '/home/yejiangchen/.cache/huggingface/lerobot/yejiangchen/g1_inspire_face_stereo/videos/chunk-000/observation.images.cam_face_left/episode_000000.mp4']' returned non-zero exit status 1.

这是由于 ffmpeg 没有编译进 libsvtav1 编码器,所以在把图像帧打包成视频时失败了

解决方案为安装带 libsvtav1 的 ffmpeg:

# 进入环境

conda activate unitree_lerobot

# 用 conda-forge 安装 ffmpeg 和 svt-av1(关键)

conda install -c conda-forge "ffmpeg>=6.1" "svt-av1"

# 确认 PATH 指向的是 conda 里的 ffmpeg

which ffmpeg

# 验证是否启用了 libsvtav1(看到一行 "V..... libsvtav1" 就通过)

ffmpeg -hide_banner -encoders | grep -i svtav1

# 进一步确认编译选项(输出里应含 --enable-libsvtav1)

ffmpeg -hide_banner -buildconf | grep -i svtav1若 which ffmpeg 仍指向系统 /usr/bin/ffmpeg,说明 PATH 顺序不对,应为:

(unitree_lerobot) yejiangchen@yejiangchen:~/Desktop/Codes/unitree/unitree_IL_lerobot$ which ffmpeg

/home/yejiangchen/anaconda3/envs/unitree_lerobot/bin/ffmpeg 最后,直接重跑转换命令(保持默认视频模式即可):

# --raw-dir 对应json的数据集目录

# --repo-id 对应自己的repo-id

# --push_to_hub 是否上传到云端

# --robot_type 对应的机器人类型

python unitree_lerobot/utils/convert_unitree_json_to_lerobot_update.py \

--raw-dir /home/yejiangchen/Desktop/Codes/unitree/unitree_IL_lerobot/datasets/bottle \

--repo-id yejiangchen/g1_inspire_face_stereo \

--robot_type Unitree_G1_Inspire_FaceStereo \

--no-push_to_hub \

--overwrite(unitree_lerobot) yejiangchen@yejiangchen:~/Desktop/Codes/unitree/unitree_IL_lerobot$ python unitree_lerobot/utils/convert_unitree_json_to_lerobot_update.py \

--raw-dir /home/yejiangchen/Desktop/Codes/unitree/unitree_IL_lerobot/datasets/bottle \

--repo-id yejiangchen/g1_inspire_face_stereo \

--robot_type Unitree_G1_Inspire_FaceStereo \

--no-push_to_hub \

--overwrite

Found 1 valid episodes under /home/yejiangchen/Desktop/Codes/unitree/unitree_IL_lerobot/datasets/bottle.

Loading Cache Json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 62.09it/s]

==> Cached 1 episodes

Found 1 valid episodes under /home/yejiangchen/Desktop/Codes/unitree/unitree_IL_lerobot/datasets/bottle.

Loading Cache Json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 11.83it/s]

==> Cached 1 episodes

Map: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1249/1249 [00:00<00:00, 6975.38 examples/s]

Creating parquet from Arrow format: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 862.94ba/s]

Svt[info]: ------------------------------------------- | 0/2 [00:00<?, ?ba/s]

Svt[info]: SVT [version]: SVT-AV1 Encoder Lib v3.0.2

Svt[info]: SVT [build] : GCC 13.3.0 64 bit

Svt[info]: LIB Build date: Mar 25 2025 12:57:31

Svt[info]: -------------------------------------------

Svt[info]: Level of Parallelism: 6

Svt[info]: Number of PPCS 305

Svt[info]: [asm level on system : up to avx2]

Svt[info]: [asm level selected : up to avx2]

Svt[info]: -------------------------------------------

Svt[info]: SVT [config]: main profile tier (auto) level (auto)

Svt[info]: SVT [config]: width / height / fps numerator / fps denominator : 640 / 480 / 30 / 1

Svt[info]: SVT [config]: bit-depth / color format : 8 / YUV420

Svt[info]: SVT [config]: preset / tune / pred struct : 8 / PSNR / random access

Svt[info]: SVT [config]: gop size / mini-gop size / key-frame type : 2 / 32 / key frame

Svt[info]: SVT [config]: BRC mode / rate factor : CRF / 30

Svt[info]: SVT [config]: AQ mode / variance boost : 2 / 0

Svt[info]: SVT [config]: sharpness / luminance-based QP bias : 0 / 0

Svt[info]: Svt[info]: -------------------------------------------

Svt[info]: -------------------------------------------

Svt[info]: SVT [version]: SVT-AV1 Encoder Lib v3.0.2

Svt[info]: SVT [build] : GCC 13.3.0 64 bit

Svt[info]: LIB Build date: Mar 25 2025 12:57:31

Svt[info]: -------------------------------------------

Svt[info]: Level of Parallelism: 6

Svt[info]: Number of PPCS 305

Svt[info]: [asm level on system : up to avx2]

Svt[info]: [asm level selected : up to avx2]

Svt[info]: -------------------------------------------

Svt[info]: SVT [config]: main profile tier (auto) level (auto)

Svt[info]: SVT [config]: width / height / fps numerator / fps denominator : 640 / 480 / 30 / 1

Svt[info]: SVT [config]: bit-depth / color format : 8 / YUV420

Svt[info]: SVT [config]: preset / tune / pred struct : 8 / PSNR / random access

Svt[info]: SVT [config]: gop size / mini-gop size / key-frame type : 2 / 32 / key frame

Svt[info]: SVT [config]: BRC mode / rate factor : CRF / 30

Svt[info]: SVT [config]: AQ mode / variance boost : 2 / 0

Svt[info]: SVT [config]: sharpness / luminance-based QP bias : 0 / 0

Svt[info]: Svt[info]: -------------------------------------------

Populating LeRobotDataset: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:18<00:00, 18.62s/it]本地数据集目录:

~/.cache/huggingface/lerobot/yejiangchen/g1_inspire_face_stereo

剩下的校验之类的可以直接看 lerobot

3 🚀 训练

训练 act

cd unitree_lerobot/lerobot

python lerobot/scripts/train.py \

--dataset.repo_id=unitreerobotics/G1_ToastedBread_Dataset \

--policy.type=act

训练 Diffusion Policy

cd unitree_lerobot/lerobot

python lerobot/scripts/train.py \

--dataset.repo_id=unitreerobotics/G1_ToastedBread_Dataset \

--policy.type=diffusion

训练 pi0

cd unitree_lerobot/lerobot

python lerobot/scripts/train.py \

--dataset.repo_id=unitreerobotics/G1_ToastedBread_Dataset \

--policy.type=pi0

4 🤖 真机测试

# --policy.path 训练保存模型路径

# --repo_id 训练加载的数据集(为什么要用? 加载数据集中第一帧状态做为起始动作)

python unitree_lerobot/eval_robot/eval_g1/eval_g1.py \

--policy.path=unitree_lerobot/lerobot/outputs/train/2025-03-25/22-11-16_diffusion/checkpoints/100000/pretrained_model \

--repo_id=unitreerobotics/G1_ToastedBread_Dataset

# 如果你想验证模型在数据集上的表现 使用下面去测试

python unitree_lerobot/eval_robot/eval_g1/eval_g1_dataset.py \

--policy.path=unitree_lerobot/lerobot/outputs/train/2025-03-25/22-11-16_diffusion/checkpoints/100000/pretrained_model \

--repo_id=unitreerobotics/G1_ToastedBread_Dataset5 🤔 Troubleshooting

| Problem | Solution |

|---|---|

| Why use LeRobot v2.0? | Explanation |

| 401 Client Error: Unauthorized (huggingface_hub.errors.HfHubHTTPError) | Run huggingface-cli login to authenticate. |

| FFmpeg-related errors: Q1: Unknown encoder 'libsvtav1' Q2: FileNotFoundError: No such file or directory: 'ffmpeg' Q3: RuntimeError: Could not load libtorchcodec. Likely causes: FFmpeg is not properly installed. |

Install FFmpeg: conda install -c conda-forge ffmpeg |

| Access to model google/paligemma-3b-pt-224 is restricted. | Run huggingface-cli login and request access if needed. |