目录

Ollama

下载

地址:https://ollama.com/download

安装大模型

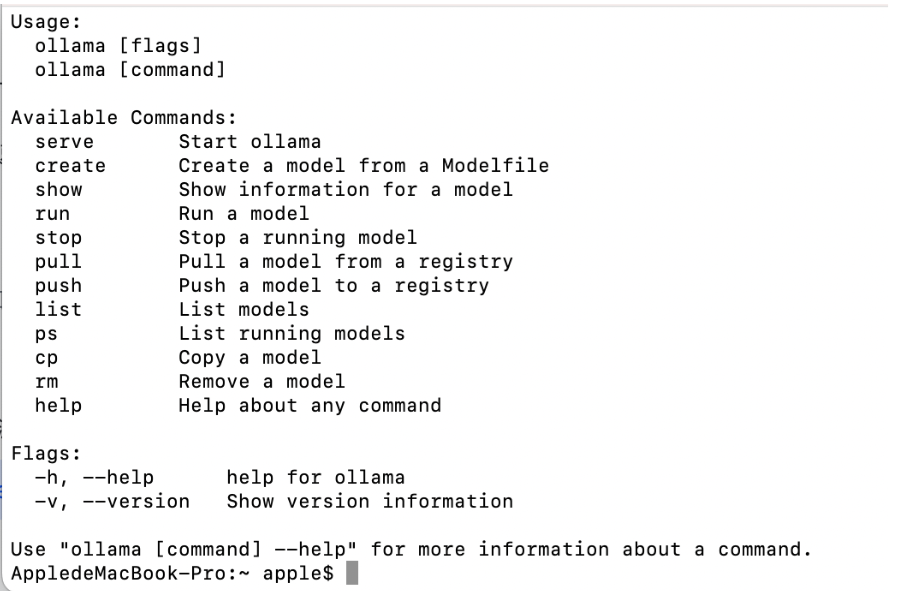

点击下载的.dmg文件,进行安装。安装成功之后,打开命令行,输入ollama,出现ollama相关的命令

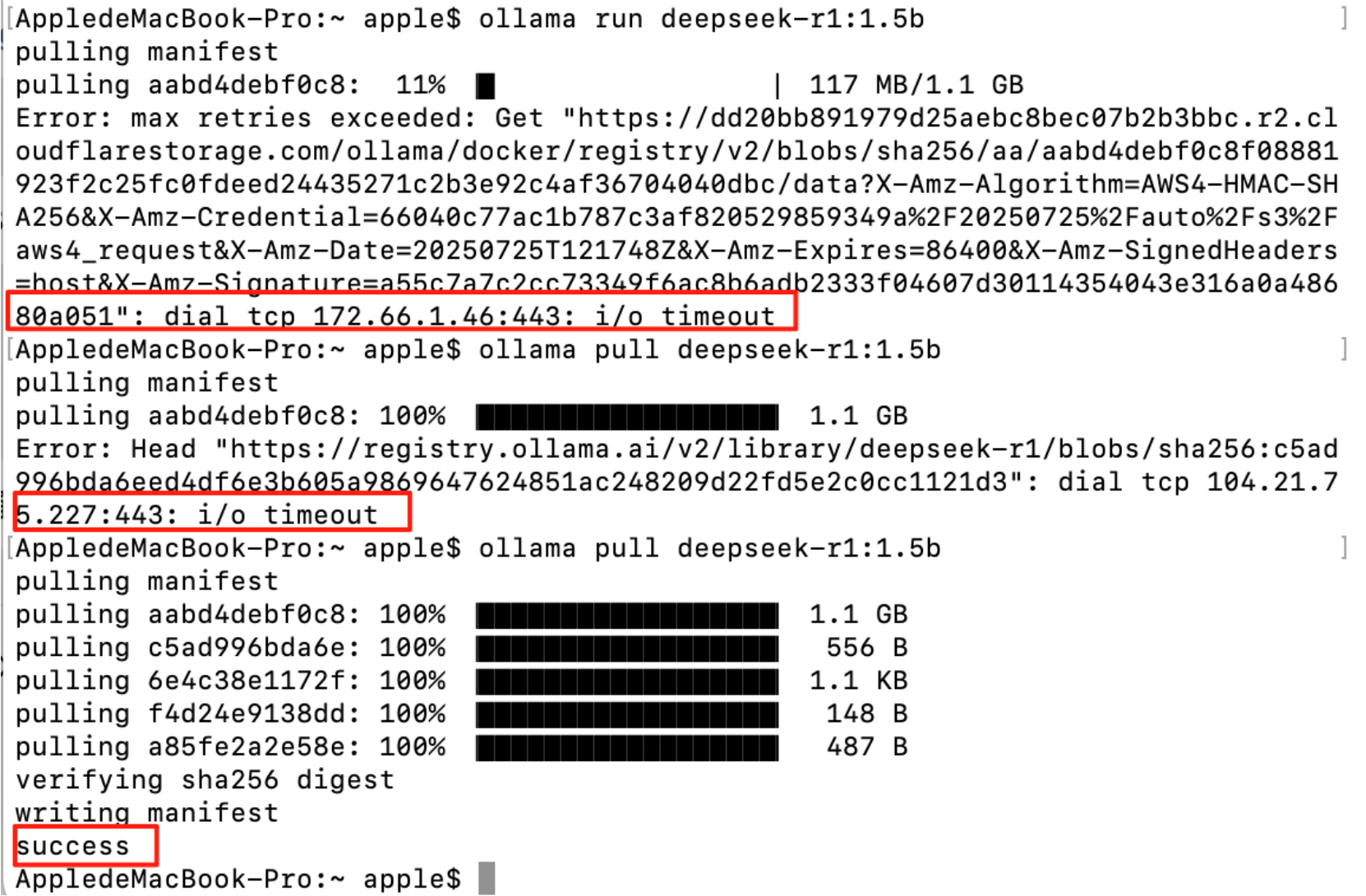

拉取大模型

输入命令:ollama pull deepseek-r1:1.5b,进入拉取镜像的元数据清单,中间有可能会出现错误,但是别担心,继续重复相同的命令,直到成功。

运行大模型

ollama run deepseek-r1:1.5b

删除大模型

ollama rm eepseek-r1:1.5b

生成fastAPI

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

from pydantic import BaseModel

import requests

app = FastAPI()

# 定义请求模型

class ChatRequest(BaseModel):

prompt: str

model: str = "deepseek-r1:1.5b"

# 允许跨域请求(根据需要配置)

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

allow_methods=["*"],

allow_headers=["*"],

)

@app.post("/api/chat")

async def chat(request: ChatRequest):

ollama_url = "http://localhost:11434/api/generate"

data = {

"model": request.model,

"prompt": request.prompt,

"stream": False

}

response = requests.post(ollama_url, json=data)

if response.status_code == 200:

return {"response": response.json()["response"]}

else:

return {"error": "Failed to get response from Ollama"}, 500

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)调用API

import requests

response = requests.post(

"http://localhost:8000/api/chat",

json={"prompt": "请写一个二分查找法"}

)

print(response.json())