点击 “AladdinEdu,同学们用得起的【H卡】算力平台”,H卡级别算力,80G大显存,按量计费,灵活弹性,顶级配置,学生更享专属优惠。

在医疗影像分析领域,数据稀缺是常态而非例外。本文将揭示如何通过迁移学习技术,在少量标注数据上实现高性能医疗影像识别模型,突破数据瓶颈的束缚。

一、医疗影像识别的特殊挑战与迁移学习的价值

医疗影像分析面临着诸多独特挑战,这些挑战使得迁移学习在该领域显得尤为重要:

1.1 医疗影像的数据困境

数据稀缺性:高质量的医疗影像数据获取困难,标注需要专业医生参与,成本极高。一家三甲医院每年产生的医疗影像数据可能仅有几千到几万例,其中具有高质量标注的更是稀少。

**类别不平衡:**疾病阳性样本往往远少于阴性样本。例如在癌症筛查中,正常样本可能占总数的90%以上,而癌变样本不足10%。

**领域特异性:**不同医疗机构、不同设备采集的影像存在分布差异。同一疾病在不同设备上的表现可能完全不同。

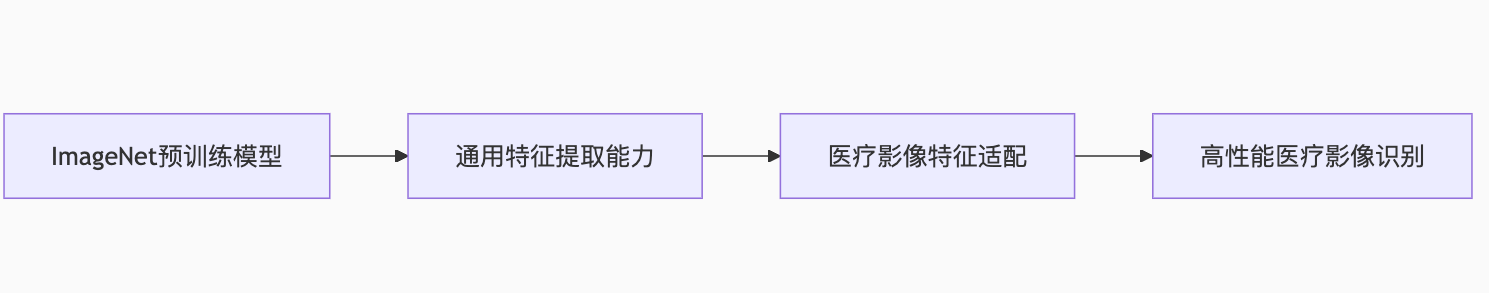

1.2 迁移学习的核心价值

迁移学习通过利用在大规模自然图像数据集(如ImageNet)上预训练的模型,将其学到的通用特征表示迁移到医疗影像任务中,有效解决了上述困境:

**特征重用:**低级特征(边缘、纹理)在自然图像和医疗影像中具有通用性

**知识迁移:**高级语义特征可通过微调适应医疗领域

**数据效率:**大幅减少对标注数据的需求量

二、 ResNet架构深度解析与医疗适配

2.1 ResNet的核心创新:残差连接

ResNet(Residual Network)通过引入残差连接解决了深度网络中的梯度消失问题,使其能够训练极深的网络结构:

import torch

import torch.nn as nn

from torchvision import models

class ResidualBlock(nn.Module):

"""残差块基础实现"""

def __init__(self, in_channels, out_channels, stride=1):

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

# shortcut连接

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != out_channels:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1,

stride=stride, bias=False),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

residual = self.shortcut(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += residual # 残差连接

out = self.relu(out)

return out

2.2 ResNet不同深度的选择策略

根据医疗影像任务的复杂度和数据量选择合适深度的ResNet变体:

| 模型变体 | 深度 | 参数量 | 适用场景 |

|---|---|---|---|

| ResNet-18 | 18层 | 11.7M | 小型数据集,简单分类任务 |

| ResNet-34 | 34层 | 21.8M | 中等数据集,一般分类任务 |

| ResNet-50 | 50层 | 25.6M | 较大数据集,复杂检测任务 |

| ResNet-101 | 101层 | 44.5M | 大数据集,精细分割任务 |

| ResNet-152 | 152层 | 60.2M | 超大数据集,研究性任务 |

三、 特征提取器冻结策略详解

3.1 分层冻结策略

不同层级的特征具有不同的通用性和特异性,需要采用差异化的冻结策略:

def freeze_model_layers(model, freeze_pattern):

"""

分层冻结模型参数

Args:

model: 预训练模型

freeze_pattern: 冻结模式,可选 'all', 'partial', 'none'

"""

if freeze_pattern == 'all':

# 冻结所有 backbone 参数

for param in model.parameters():

param.requires_grad = False

elif freeze_pattern == 'partial':

# 冻结前几层,微调后几层

layer_names = ['conv1', 'bn1', 'layer1', 'layer2', 'layer3', 'layer4']

# 冻结前4层(conv1, bn1, layer1, layer2)

for name, param in model.named_parameters():

if any(frozen_layer in name for frozen_layer in layer_names[:4]):

param.requires_grad = False

else:

param.requires_grad = True

else: # 'none'

# 不冻结,全部参与训练

for param in model.parameters():

param.requires_grad = True

return model

3.2 自适应冻结策略

根据训练过程中的表现动态调整冻结策略:

class AdaptiveFreezer:

"""根据训练表现自适应调整冻结策略"""

def __init__(self, model, initial_freeze_layers=4):

self.model = model

self.freeze_layers = initial_freeze_layers

self.layer_performance = {}

def evaluate_layer_importance(self, dataloader, criterion):

"""评估各层的重要性"""

original_state = self.model.state_dict()

layer_importances = {}

# 逐层评估

for name, module in self.model.named_modules():

if isinstance(module, (nn.Conv2d, nn.BatchNorm2d, nn.Linear)):

# 临时禁用该层

original_weight = module.weight.clone()

module.weight.requires_grad = False

module.weight.data.zero_()

# 评估性能下降程度

self.model.eval()

total_loss = 0

with torch.no_grad():

for inputs, targets in dataloader:

outputs = self.model(inputs)

loss = criterion(outputs, targets)

total_loss += loss.item()

layer_importances[name] = total_loss

# 恢复权重

module.weight.data.copy_(original_weight)

module.weight.requires_grad = True

# 恢复模型原始状态

self.model.load_state_dict(original_state)

return layer_importances

def update_freezing_strategy(self, dataloader, criterion, top_k=10):

"""根据重要性更新冻结策略"""

importances = self.evaluate_layer_importance(dataloader, criterion)

# 对层按重要性排序

sorted_layers = sorted(importances.items(), key=lambda x: x[1], reverse=True)

# 冻结最不重要的层

for name, module in self.model.named_modules():

layer_names = [layer[0] for layer in sorted_layers[:top_k]]

if any(frozen_name in name for frozen_name in layer_names):

for param in module.parameters():

param.requires_grad = False

else:

for param in module.parameters():

param.requires_grad = True

四、 完整实战:胸部X光肺炎分类

4.1 数据准备与预处理

import torch

from torchvision import datasets, transforms

from torch.utils.data import DataLoader, Dataset

from PIL import Image

import os

class ChestXrayDataset(Dataset):

"""胸部X光数据集加载器"""

def __init__(self, root_dir, transform=None, train=True):

self.root_dir = root_dir

self.transform = transform

self.train = train

# 设置数据路径

self.data_path = os.path.join(root_dir, 'train' if train else 'test')

self.classes = ['NORMAL', 'PNEUMONIA']

self.image_paths = []

self.labels = []

# 加载图像路径和标签

for class_idx, class_name in enumerate(self.classes):

class_path = os.path.join(self.data_path, class_name)

for img_name in os.listdir(class_path):

if img_name.endswith(('.jpeg', '.jpg', '.png')):

self.image_paths.append(os.path.join(class_path, img_name))

self.labels.append(class_idx)

def __len__(self):

return len(self.image_paths)

def __getitem__(self, idx):

img_path = self.image_paths[idx]

image = Image.open(img_path).convert('RGB') # 转换为RGB

label = self.labels[idx]

if self.transform:

image = self.transform(image)

return image, label

# 数据增强和预处理

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(10),

transforms.ColorJitter(brightness=0.2, contrast=0.2),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# 创建数据加载器

train_dataset = ChestXrayDataset('chest_xray', transform=train_transform, train=True)

test_dataset = ChestXrayDataset('chest_xray', transform=test_transform, train=False)

train_loader = DataLoader(train_dataset, batch_size=32, shuffle=True, num_workers=4)

test_loader = DataLoader(test_dataset, batch_size=32, shuffle=False, num_workers=4)

4.2 模型构建与初始化

import torch.nn as nn

from torchvision import models

import torch.optim as optim

def create_medical_resnet(model_name='resnet50', num_classes=2, freeze_strategy='partial'):

"""

创建医疗影像ResNet模型

Args:

model_name: ResNet变体名称

num_classes: 分类数量

freeze_strategy: 冻结策略

"""

# 加载预训练模型

if model_name == 'resnet18':

model = models.resnet18(pretrained=True)

elif model_name == 'resnet34':

model = models.resnet34(pretrained=True)

elif model_name == 'resnet50':

model = models.resnet50(pretrained=True)

elif model_name == 'resnet101':

model = models.resnet101(pretrained=True)

else:

raise ValueError(f"Unsupported model: {model_name}")

# 冻结特征提取层

if freeze_strategy == 'all':

for param in model.parameters():

param.requires_grad = False

elif freeze_strategy == 'partial':

# 冻结前4个layer(保留最后1-2个layer进行微调)

for name, param in model.named_parameters():

if 'layer4' not in name and 'fc' not in name:

param.requires_grad = False

# 替换最后的全连接层

in_features = model.fc.in_features

model.fc = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(in_features, 512),

nn.ReLU(),

nn.BatchNorm1d(512),

nn.Dropout(0.3),

nn.Linear(512, num_classes)

)

return model

# 创建模型

model = create_medical_resnet('resnet50', num_classes=2, freeze_strategy='partial')

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = model.to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.AdamW(

filter(lambda p: p.requires_grad, model.parameters()),

lr=1e-4,

weight_decay=1e-4

)

# 学习率调度器

scheduler = optim.lr_scheduler.ReduceLROnPlateau(

optimizer, mode='min', factor=0.5, patience=3, verbose=True

)

4.3 训练循环与验证

def train_model(model, train_loader, val_loader, criterion, optimizer, scheduler, num_epochs=25):

"""模型训练函数"""

best_acc = 0.0

history = {'train_loss': [], 'train_acc': [], 'val_loss': [], 'val_acc': []}

for epoch in range(num_epochs):

print(f'Epoch {epoch+1}/{num_epochs}')

print('-' * 10)

# 训练阶段

model.train()

running_loss = 0.0

running_corrects = 0

for inputs, labels in train_loader:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(True):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / len(train_loader.dataset)

epoch_acc = running_corrects.double() / len(train_loader.dataset)

history['train_loss'].append(epoch_loss)

history['train_acc'].append(epoch_acc.item())

print(f'Train Loss: {epoch_loss:.4f} Acc: {epoch_acc:.4f}')

# 验证阶段

model.eval()

val_loss = 0.0

val_corrects = 0

with torch.no_grad():

for inputs, labels in val_loader:

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

val_loss += loss.item() * inputs.size(0)

val_corrects += torch.sum(preds == labels.data)

val_loss = val_loss / len(val_loader.dataset)

val_acc = val_corrects.double() / len(val_loader.dataset)

history['val_loss'].append(val_loss)

history['val_acc'].append(val_acc.item())

print(f'Val Loss: {val_loss:.4f} Acc: {val_acc:.4f}')

# 学习率调整

scheduler.step(val_loss)

# 保存最佳模型

if val_acc > best_acc:

best_acc = val_acc

torch.save(model.state_dict(), 'best_model.pth')

print()

print(f'Best val Acc: {best_acc:4f}')

return model, history

# 开始训练

trained_model, training_history = train_model(

model, train_loader, test_loader, criterion, optimizer, scheduler, num_epochs=30

)

五、高级技巧与性能优化

5.1 渐进式微调策略

def progressive_fine_tuning(model, train_loader, val_loader, num_epochs=30):

"""渐进式微调策略"""

# 阶段1:只训练分类头

print("Phase 1: Training classifier head only")

for param in model.parameters():

param.requires_grad = False

for param in model.fc.parameters():

param.requires_grad = True

optimizer = optim.AdamW(model.fc.parameters(), lr=1e-3)

model, history = train_model(model, train_loader, val_loader, criterion,

optimizer, scheduler, num_epochs//3)

# 阶段2:微调最后两个layer

print("Phase 2: Fine-tuning last two layers")

for name, param in model.named_parameters():

if 'layer3' in name or 'layer4' in name or 'fc' in name:

param.requires_grad = True

else:

param.requires_grad = False

optimizer = optim.AdamW(

filter(lambda p: p.requires_grad, model.parameters()),

lr=1e-4

)

model, history = train_model(model, train_loader, val_loader, criterion,

optimizer, scheduler, num_epochs//3)

# 阶段3:全部微调

print("Phase 3: Full fine-tuning")

for param in model.parameters():

param.requires_grad = True

optimizer = optim.AdamW(model.parameters(), lr=1e-5)

model, history = train_model(model, train_loader, val_loader, criterion,

optimizer, scheduler, num_epochs//3)

return model

5.2 集成学习和模型融合

def create_ensemble(models_list, dataloader, device):

"""创建模型集成预测"""

all_predictions = []

all_probabilities = []

for model in models_list:

model.eval()

model_predictions = []

model_probabilities = []

with torch.no_grad():

for inputs, _ in dataloader:

inputs = inputs.to(device)

outputs = model(inputs)

probabilities = torch.softmax(outputs, dim=1)

_, preds = torch.max(outputs, 1)

model_predictions.extend(preds.cpu().numpy())

model_probabilities.extend(probabilities.cpu().numpy())

all_predictions.append(model_predictions)

all_probabilities.append(model_probabilities)

# 投票集成

ensemble_predictions = []

for i in range(len(all_predictions[0])):

votes = [pred[i] for pred in all_predictions]

ensemble_predictions.append(max(set(votes), key=votes.count))

return ensemble_predictions, all_probabilities

# 创建多个不同配置的模型

model_configs = [

{'model_name': 'resnet50', 'freeze_strategy': 'partial'},

{'model_name': 'resnet101', 'freeze_strategy': 'partial'},

{'model_name': 'resnet50', 'freeze_strategy': 'all'}

]

trained_models = []

for config in model_configs:

model = create_medical_resnet(**config, num_classes=2)

model.load_state_dict(torch.load('best_model.pth'))

trained_models.append(model)

# 集成预测

ensemble_preds, ensemble_probs = create_ensemble(trained_models, test_loader, device)

六、结果分析与模型解释

6.1 性能评估与可视化

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import confusion_matrix, classification_report

def evaluate_model(model, dataloader, device):

"""全面评估模型性能"""

model.eval()

all_preds = []

all_labels = []

all_probs = []

with torch.no_grad():

for inputs, labels in dataloader:

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

probs = torch.softmax(outputs, dim=1)

_, preds = torch.max(outputs, 1)

all_preds.extend(preds.cpu().numpy())

all_labels.extend(labels.cpu().numpy())

all_probs.extend(probs.cpu().numpy())

# 计算评估指标

cm = confusion_matrix(all_labels, all_preds)

cr = classification_report(all_labels, all_preds,

target_names=['NORMAL', 'PNEUMONIA'])

# 绘制混淆矩阵

plt.figure(figsize=(10, 8))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues',

xticklabels=['NORMAL', 'PNEUMONIA'],

yticklabels=['NORMAL', 'PNEUMONIA'])

plt.ylabel('True Label')

plt.xlabel('Predicted Label')

plt.title('Confusion Matrix')

plt.show()

print("Classification Report:")

print(cr)

return all_preds, all_labels, all_probs

# 评估模型

predictions, true_labels, probabilities = evaluate_model(trained_model, test_loader, device)

6.2 特征可视化与可解释性

import numpy as np

from torchcam.methods import GradCAM

from torchcam.utils import overlay_mask

def visualize_attention(model, image_tensor, original_image, class_names):

"""可视化模型注意力区域"""

# 初始化GradCAM

cam_extractor = GradCAM(model, target_layer='layer4')

# 获取激活映射

with torch.no_grad():

output = model(image_tensor.unsqueeze(0))

# 生成类别激活图

activation_map = cam_extractor(output.scores.argmax().item(), output)

# 叠加到原图

result = overlay_mask(

original_image,

activation_map[0].squeeze(0),

alpha=0.5

)

plt.figure(figsize=(12, 5))

plt.subplot(1, 2, 1)

plt.imshow(original_image)

plt.title('Original Image')

plt.axis('off')

plt.subplot(1, 2, 2)

plt.imshow(result)

plt.title(f'Attention Map - Predicted: {class_names[output.scores.argmax().item()]}')

plt.axis('off')

plt.show()

# 示例可视化

sample_image, sample_label = next(iter(test_loader))

visualize_attention(trained_model, sample_image[0], sample_image[0].permute(1, 2, 0).numpy(),

['NORMAL', 'PNEUMONIA'])

总结

通过本文介绍的迁移学习技术和ResNet微调策略,我们可以在医疗影像识别任务中实现快速突破:

- 数据效率:即使在小样本场景下,也能获得出色的性能

- 训练稳定性:通过合适的冻结策略避免过拟合

- 可解释性:可视化技术帮助理解模型决策过程

- 实用性强:提供的代码可以直接应用于实际项目

关键成功因素包括:

- 合适的基础模型选择(ResNet深度)

- 分层冻结策略的实施

- 渐进式微调的应用

- 集成学习的性能提升

这些技术不仅适用于胸部X光肺炎分类,还可以推广到其他医疗影像分析任务,如皮肤病变分类、视网膜病变检测、MRI分析等。