实验介绍

本实验通过分析普通查询过程中存在的性能瓶颈点,通过执行计划的分析找到可能的性能优化点并加以实施,最终达到优化的效果,重点关注分布式聚集相关查询语句的优化。

实验目的

了解通过合理定义分布列实现分布式聚集的性能优化。

实验步骤

步骤1 使用lineitem默认第一列作为分布键进行建表

Drop table if exists lineitem;

CREATE TABLE lineitem (

l_orderkey bigint NOT NULL,

l_partkey bigint NOT NULL,

l_suppkey bigint NOT NULL,

l_linenumber bigint NOT NULL,

l_quantity numeric(15,2) NOT NULL,

l_extendedprice numeric(15,2) NOT NULL,

l_discount numeric(15,2) NOT NULL,

l_tax numeric(15,2) NOT NULL,

l_returnflag character(1) NOT NULL,

l_linestatus character(1) NOT NULL,

l_shipdate date NOT NULL,

l_commitdate date NOT NULL,

l_receiptdate date NOT NULL,

l_shipinstruct character(25) NOT NULL,

l_shipmode character(10) NOT NULL,

l_comment character varying(44) NOT NULL

)

WITH (orientation=row, compression=no)

DISTRIBUTE BY HASH(l_orderkey);步骤2 导入数据,并收集统计信息

通过 copy 工具导入 lineitem.csv 数据。

COPY LINEITEM FROM '/tmp/lineitem_.csv'

DELIMITER ','

QUOTE '"'

ESCAPE '"'

ENCODING 'UTF-8'

CSV;

analyze lineitem;步骤3 执行子查询查看计划耗时详情。

设置参数:

set enable_fast_query_shipping = off;

set enable_stream_operator = on;

该两个参数为会话级,只在本次会话期间生效。

EXPLAIN ANALYZE

select

l_partkey as temp_l_partkey, 0.2 * avg(l_quantity) as temp_avg

from

lineitem

group by l_partkey;

从结果可以看到由于分布键和group by的分组字段不匹配,导致需要进行两次聚集操作,一次在本地,一次是按 group by字段重分布之后,再进行汇总。

步骤4 使用group by列作为分布键进行建表

CREATE TABLE lineitem_by_partkey

(

l_orderkey bigint NOT NULL,

l_partkey bigint NOT NULL,

l_suppkey bigint NOT NULL,

l_linenumber bigint NOT NULL,

l_quantity numeric(15,2) NOT NULL,

l_extendedprice numeric(15,2) NOT NULL,

l_discount numeric(15,2) NOT NULL,

l_tax numeric(15,2) NOT NULL,

l_returnflag character(1) NOT NULL,

l_linestatus character(1) NOT NULL,

l_shipdate date NOT NULL,

l_commitdate date NOT NULL,

l_receiptdate date NOT NULL,

l_shipinstruct character(25) NOT NULL,

l_shipmode character(10) NOT NULL,

l_comment character varying(44) NOT NULL

)

WITH (orientation=row, compression=no)

DISTRIBUTE BY HASH(l_partkey);步骤5 导入数据,重新导入数据文件lineitem.csv,更新统计信息。

COPY lineitem_by_partkey FROM '/tmp/lineitem_.csv'

DELIMITER ','

QUOTE '"'

ESCAPE '"'

ENCODING 'UTF-8'

CSV;

analyze lineitem_by_partkey;步骤6 执行下列子查询查看计划耗时详情。

设置参数:

set enable_fast_query_shipping = off;

set enable_stream_operator = on;

该两个参数为会话级,只在本次会话期间生效。

jiang=# EXPLAIN ANALYZE

select

l_partkey as temp_l_partkey, 0.2 * avg(l_quantity) as temp_avg

from

lineitem_by_partkey

group by l_partkey;

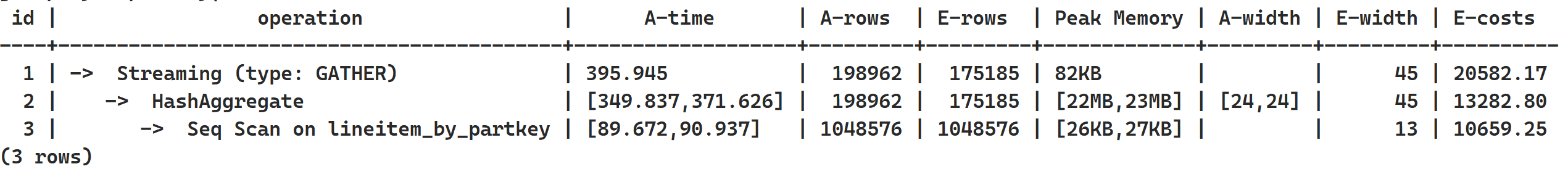

id | operation | A-time | A-rows | E-rows | Peak Memory | A-width | E-width | E-costs

----+-------------------------------------------+-------------------+---------+---------+-------------+---------+---------+----------

1 | -> Streaming (type: GATHER) | 395.945 | 198962 | 175185 | 82KB | | 45 | 20582.17

2 | -> HashAggregate | [349.837,371.626] | 198962 | 175185 | [22MB,23MB] | [24,24] | 45 | 13282.80

3 | -> Seq Scan on lineitem_by_partkey | [89.672,90.937] | 1048576 | 1048576 | [26KB,27KB] | | 13 | 10659.25

(3 rows)

Memory Information (identified by plan id)

--------------------------------------------

Coordinator Query Peak Memory:

Query Peak Memory: 1MB

Datanode:

Max Query Peak Memory: 28MB

Min Query Peak Memory: 28MB

(5 rows)

User Define Profiling

---------------------------------------------------------------------------

Plan Node id: 1 Track name: coordinator get datanode connection

(actual time=[0.021, 0.021], calls=[1, 1])

Plan Node id: 1 Track name: Coordinator serialize plan

(actual time=[0.668, 0.668], calls=[1, 1])

Plan Node id: 1 Track name: Coordinator send begin command

(actual time=[0.001, 0.001], calls=[1, 1])

Plan Node id: 1 Track name: Coordinator start transaction and send query

(actual time=[0.050, 0.050], calls=[1, 1])

(8 rows)

====== Query Summary =====

--------------------------------------------------------------------------------------------

Datanode executor start time [dn_6004_6005_6006, dn_6007_6008_6009]: [6.318 ms,6.513 ms]

Datanode executor run time [dn_6007_6008_6009, dn_6001_6002_6003]: [381.451 ms,399.702 ms]

Datanode executor end time [dn_6007_6008_6009, dn_6001_6002_6003]: [0.017 ms,0.021 ms]

Coordinator executor start time: 6.450 ms

Coordinator executor run time: 408.400 ms

Coordinator executor end time: 0.020 ms

Planner runtime: 0.187 ms

Plan size: 3857 byte

Query Id: 72902018968436769

Total runtime: 414.882 ms

(10 rows)

此时生成了语句下推的执行计划。为了分析各算子的开销,可设置禁止语句下推,观察CN上的整体计划执行情况:

set enable_fast_query_shipping = off;

这里可以看到,和默认按 l_orderkey 分布不同,此时由于不需要跨 DN 的数据交互,只需要进行一次聚集操作,不需要对数据进行 redistribute,整体性能得到提升。

这部分优化效果主要取决于 DN 本地进行聚集之后 group by 字段的唯一值数量,如果本身group by 列和分布列相关性较好,各 DN 上 group by字段唯一值较少,则数据重分布和第二次聚集操作的开销也会相对较小。

实验总结

本实验通过调整数据表分布键的方法,对子查询进行了调优,相同数据量下,选择和 group by

字段相同的分布键将有效提升子查询分布式聚集性能。