[docker ragflow数据迁移]目录

背景

通过 docker compose -f docker-compose.yml -p ragflow up -d 部署了 ragflow本地服务,现在想迁移到另一台服务器上,服务可以通过github 拉取最新的 https://github.com/infiniflow/ragflow 代码,重新配置启动。

但是原服务器上添加过的数据,比如知识库,怎么迁移到新服务器,避免重复添加,重复操作呢?

一、我的配置文件

启动 ragflow 基础配置文件如下 : docker-compose-base.yml

可以看到,各个基础服务的 volumes 数据卷名称和挂载信息

(base) root@hostname:/usr/local/soft/ai/rag/v0.19.0/ragflow/docker# vim docker-compose-base.yml

services:

es01:

container_name: ragflow-es-01

profiles:

- elasticsearch

image: elasticsearch:${STACK_VERSION}

volumes:

- esdata01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

env_file: .env

environment:

- node.name=es01

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=false

- discovery.type=single-node

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false

- xpack.security.transport.ssl.enabled=false

- cluster.routing.allocation.disk.watermark.low=5gb

- cluster.routing.allocation.disk.watermark.high=3gb

- cluster.routing.allocation.disk.watermark.flood_stage=2gb

- TZ=${TIMEZONE}

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test: ["CMD-SHELL", "curl http://localhost:9200"]

interval: 10s

timeout: 10s

retries: 120

networks:

- ragflow

restart: on-failure

opensearch01:

container_name: ragflow-opensearch-01

profiles:

- opensearch

image: hub.icert.top/opensearchproject/opensearch:2.19.1

volumes:

- osdata01:/usr/share/opensearch/data

ports:

- ${OS_PORT}:9201

env_file: .env

environment:

- node.name=opensearch01

- OPENSEARCH_PASSWORD=${OPENSEARCH_PASSWORD}

- OPENSEARCH_INITIAL_ADMIN_PASSWORD=${OPENSEARCH_PASSWORD}

- bootstrap.memory_lock=false

- discovery.type=single-node

- plugins.security.disabled=false

- plugins.security.ssl.http.enabled=false

- plugins.security.ssl.transport.enabled=true

- cluster.routing.allocation.disk.watermark.low=5gb

- cluster.routing.allocation.disk.watermark.high=3gb

- cluster.routing.allocation.disk.watermark.flood_stage=2gb

- TZ=${TIMEZONE}

- http.port=9201

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test: ["CMD-SHELL", "curl http://localhost:9201"]

interval: 10s

timeout: 10s

retries: 120

networks:

- ragflow

restart: on-failure

infinity:

container_name: ragflow-infinity

profiles:

- infinity

image: infiniflow/infinity:v0.6.0-dev3

volumes:

- infinity_data:/var/infinity

- ./infinity_conf.toml:/infinity_conf.toml

command: ["-f", "/infinity_conf.toml"]

ports:

- ${INFINITY_THRIFT_PORT}:23817

- ${INFINITY_HTTP_PORT}:23820

- ${INFINITY_PSQL_PORT}:5432

env_file: .env

environment:

- TZ=${TIMEZONE}

mem_limit: ${MEM_LIMIT}

ulimits:

nofile:

soft: 500000

hard: 500000

networks:

- ragflow

healthcheck:

test: ["CMD", "curl", "http://localhost:23820/admin/node/current"]

interval: 10s

timeout: 10s

retries: 120

restart: on-failure

sandbox-executor-manager:

container_name: ragflow-sandbox-executor-manager

profiles:

- sandbox

image: ${SANDBOX_EXECUTOR_MANAGER_IMAGE-infiniflow/sandbox-executor-manager:latest}

privileged: true

ports:

- ${SANDBOX_EXECUTOR_MANAGER_PORT-9385}:9385

env_file: .env

volumes:

- /var/run/docker.sock:/var/run/docker.sock

networks:

- ragflow

security_opt:

- no-new-privileges:true

environment:

- TZ=${TIMEZONE}

- SANDBOX_EXECUTOR_MANAGER_POOL_SIZE=${SANDBOX_EXECUTOR_MANAGER_POOL_SIZE:-3}

- SANDBOX_BASE_PYTHON_IMAGE=${SANDBOX_BASE_PYTHON_IMAGE:-infiniflow/sandbox-base-python:latest}

- SANDBOX_BASE_NODEJS_IMAGE=${SANDBOX_BASE_NODEJS_IMAGE:-infiniflow/sandbox-base-nodejs:latest}

- SANDBOX_ENABLE_SECCOMP=${SANDBOX_ENABLE_SECCOMP:-false}

- SANDBOX_MAX_MEMORY=${SANDBOX_MAX_MEMORY:-256m}

- SANDBOX_TIMEOUT=${SANDBOX_TIMEOUT:-10s}

healthcheck:

test: ["CMD", "curl", "http://localhost:9385/healthz"]

interval: 10s

timeout: 5s

retries: 5

restart: on-failure

mysql:

# mysql:5.7 linux/arm64 image is unavailable.

image: mysql:8.0.39

container_name: ragflow-mysql

env_file: .env

environment:

- MYSQL_ROOT_PASSWORD=${MYSQL_PASSWORD}

- TZ=${TIMEZONE}

command:

--max_connections=1000

--character-set-server=utf8mb4

--collation-server=utf8mb4_unicode_ci

--default-authentication-plugin=mysql_native_password

--tls_version="TLSv1.2,TLSv1.3"

--init-file /data/application/init.sql

--binlog_expire_logs_seconds=604800

ports:

- ${MYSQL_PORT}:3306

volumes:

- mysql_data:/var/lib/mysql

- ./init.sql:/data/application/init.sql

networks:

- ragflow

healthcheck:

test: ["CMD", "mysqladmin" ,"ping", "-uroot", "-p${MYSQL_PASSWORD}"]

interval: 10s

timeout: 10s

retries: 3

restart: on-failure

minio:

image: quay.io/minio/minio:RELEASE.2025-06-13T11-33-47Z

container_name: ragflow-minio

command: server --console-address ":9001" /data

ports:

- ${MINIO_PORT}:9000

- ${MINIO_CONSOLE_PORT}:9001

env_file: .env

environment:

- MINIO_ROOT_USER=${MINIO_USER}

- MINIO_ROOT_PASSWORD=${MINIO_PASSWORD}

- TZ=${TIMEZONE}

volumes:

- minio_data:/data

networks:

- ragflow

restart: on-failure

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

redis:

# swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/valkey/valkey:8

image: valkey/valkey:8

container_name: ragflow-redis

command: redis-server --requirepass ${REDIS_PASSWORD} --maxmemory 128mb --maxmemory-policy allkeys-lru

env_file: .env

ports:

- ${REDIS_PORT}:6379

volumes:

- redis_data:/data

networks:

- ragflow

restart: on-failure

healthcheck:

test: ["CMD", "redis-cli", "-a", "${REDIS_PASSWORD}", "ping"]

interval: 5s

timeout: 3s

retries: 3

start_period: 10s

volumes:

esdata01:

driver: local

osdata01:

driver: local

infinity_data:

driver: local

mysql_data:

driver: local

minio_data:

driver: local

redis_data:

driver: local

networks:

ragflow:

driver: bridg

根据上面提供的 docker-compose-base.yml 配置文件内容,下面是 RAGFlow 各个组件在本地的数据存储路径说明:

✅ 数据存储路径一览(基于你的配置文件)

| 组件 | 容器内路径 | 本地路径(默认) | 用途说明 |

|---|---|---|---|

| MySQL | /var/lib/mysql |

mysql_data(默认在 Docker 管理的 volume 中) |

存储用户、文档元数据等结构化信息 |

| MinIO | /data |

minio_data(Docker volume) |

存储上传的原始文档、处理后的文本等 |

| Elasticsearch (es01) | /usr/share/elasticsearch/data |

esdata01(Docker volume) |

存储关键词搜索索引数据 |

| OpenSearch (opensearch01) | /usr/share/opensearch/data |

osdata01(Docker volume) |

可选搜索引擎,用于检索 |

| Infinity(向量数据库) | /var/infinity |

infinity_data(Docker volume) |

存储向量索引 |

| Redis | /data |

redis_data(Docker volume) |

缓存数据、任务状态等 |

| Sandbox Executor Manager | 无持久化数据 | 无 | 用于运行沙箱任务,不持久化 |

关于这些 volumes 的说明

你在 docker-compose-base.yml 文件末尾定义了如下 volume:

volumes:

esdata01:

driver: local

osdata01:

driver: local

infinity_data:

driver: local

mysql_data:

driver: local

minio_data:

driver: local

redis_data:

driver: local

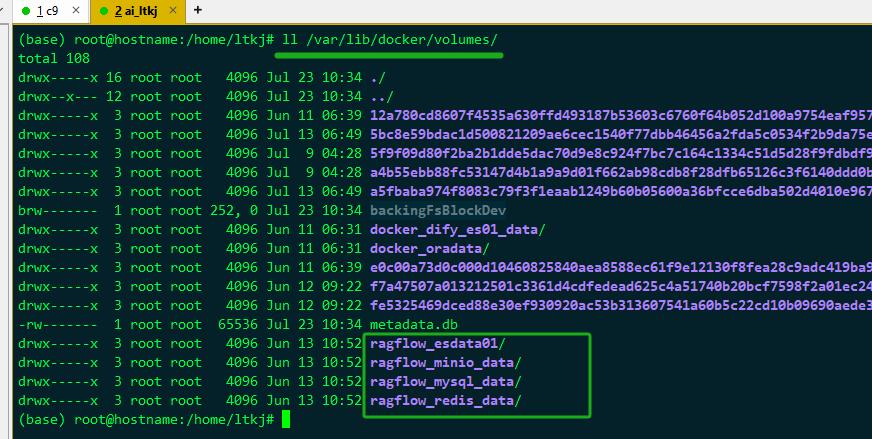

这些 volumes 是 Docker 管理的命名卷(named volumes),默认情况下它们会存储在 Docker 的默认数据目录中:

- Linux 系统下通常为:

/var/lib/docker/volumes/<volume_name>/_data

例如:

- MySQL 数据实际路径为:

/var/lib/docker/volumes/mysql_data/_data - MinIO 数据实际路径为:

/var/lib/docker/volumes/minio_data/_data

📁 如何查看这些卷在本地的具体位置?

可能用到的docker 命令如下。

📦 总结建议

| 目标 | 建议 |

|---|---|

| 查看数据存储位置 | 使用 docker volume inspect <volume_name> |

| 备份数据 | 备份对应路径,如 /var/lib/docker/volumes/mysql_data/_data |

| 自定义路径 | 修改 docker-compose-base.yml 中的 volumes 配置 |

| 清理数据 | 删除对应 volume,如 docker volume rm mysql_data |

所以,我们想查看某个 volume 的实际路径,可以有两种方法:

- find / -name ‘esdata01’

- docker volume inspect xxxx

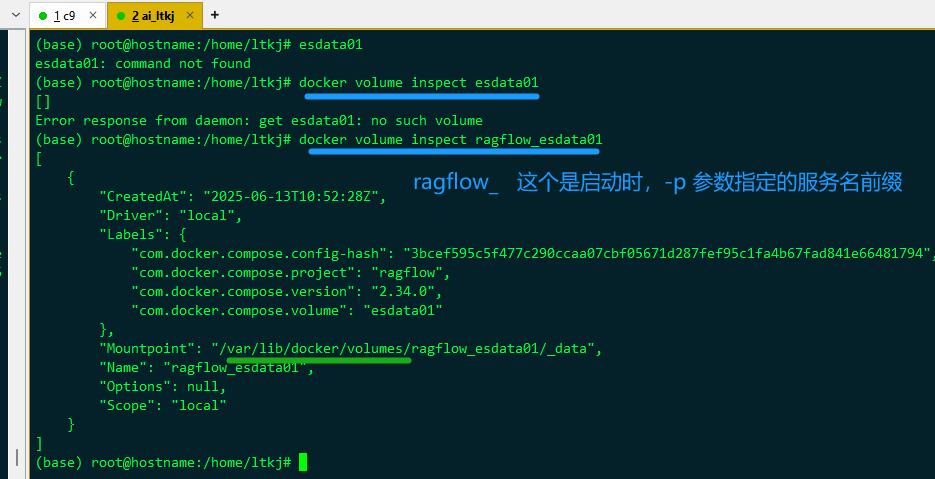

本次我们使用如下命令,esdata01 是从上面配置文件中获悉:

(base) root@hostname:/home/ltkj# docker volume inspect esdata01

[]

Error response from daemon: get esdata01: no such volume

(base) root@hostname:/home/ltkj# docker volume inspect ragflow_esdata01

输出示例:

[

{

"CreatedAt": "2025-06-13T10:52:28Z",

"Driver": "local",

"Labels": {

"com.docker.compose.config-hash": "3bcef595c5f477c290ccaa07cbf05671d287fef95c1fa4b67fad841e66481794",

"com.docker.compose.project": "ragflow",

"com.docker.compose.version": "2.34.0",

"com.docker.compose.volume": "esdata01"

},

"Mountpoint": "/var/lib/docker/volumes/ragflow_esdata01/_data",

"Name": "ragflow_esdata01",

"Options": null,

"Scope": "local"

}

]

这样就能知道 esdata01,minio,MySQL 等基础服务的数据存在哪里了。

🛠️ 如果想自定义这些路径

可以在 volumes 配置中指定本地路径,例如:

volumes:

mysql_data:

driver: local

driver_opts:

type: none

o: bind

device: /opt/ragflow/mysql_data

这样 MySQL 数据就会存在 /opt/ragflow/mysql_data。