大模型微调之 使用 LLaMA-Factory 微调 Llama3

使用 LLaMA Factory 微调 Llama-3 中文对话模型

安装 LLaMA Factory 依赖

%cd /content/

%rm -rf LLaMA-Factory

!git clone https://github.com/hiyouga/LLaMA-Factory.git

%cd LLaMA-Factory

%ls

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

!pip install --no-deps xformers==0.0.25

!pip install .[bitsandbytes]

运行结果为:

/content

Cloning into 'LLaMA-Factory'...

remote: Enumerating objects: 9713, done.

remote: Total 9713 (delta 0), reused 0 (delta 0), pack-reused 9713

Receiving objects: 100% (9713/9713), 213.36 MiB | 22.02 MiB/s, done.

Resolving deltas: 100% (7170/7170), done.

Updating files: 100% (196/196), done.

/content/LLaMA-Factory

assets/ docker-compose.yml examples/ pyproject.toml requirements.txt src/

CITATION.cff Dockerfile LICENSE README.md scripts/ tests/

data/ evaluation/ Makefile README_zh.md setup.py

Collecting unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git

Cloning https://github.com/unslothai/unsloth.git to /tmp/pip-install-c39dl25x/unsloth_a4e8ae52016047a8927f82c691b24fbb

Running command git clone --filter=blob:none --quiet https://github.com/unslothai/unsloth.git /tmp/pip-install-c39dl25x/unsloth_a4e8ae52016047a8927f82c691b24fbb

Resolved https://github.com/unslothai/unsloth.git to commit ec19e61c854dcf9104386fa63fc6c4f2944d4f35

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Requirement already satisfied: tyro in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.8.3)

Requirement already satisfied: transformers>=4.38.2 in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (4.38.2)

Requirement already satisfied: datasets>=2.16.0 in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.19.0)

Requirement already satisfied: sentencepiece in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.1.99)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (4.66.2)

Requirement already satisfied: psutil in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (5.9.5)

Requirement already satisfied: wheel>=0.42.0 in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.43.0)

Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.25.2)

Requirement already satisfied: protobuf<4.0.0 in /usr/local/lib/python3.10/dist-packages (from unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.20.3)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.13.4)

Requirement already satisfied: pyarrow>=12.0.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (14.0.2)

Requirement already satisfied: pyarrow-hotfix in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.6)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.3.8)

Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.0.3)

Requirement already satisfied: requests>=2.19.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.31.0)

Requirement already satisfied: xxhash in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.4.1)

Requirement already satisfied: multiprocess in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.70.16)

Requirement already satisfied: fsspec[http]<=2024.3.1,>=2023.1.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2023.6.0)

Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.9.5)

Requirement already satisfied: huggingface-hub>=0.21.2 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.22.2)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (24.0)

Requirement already satisfied: pyyaml>=5.1 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (6.0.1)

Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.38.2->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2023.12.25)

Requirement already satisfied: tokenizers<0.19,>=0.14 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.38.2->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.15.2)

Requirement already satisfied: safetensors>=0.4.1 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.38.2->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.4.3)

Requirement already satisfied: docstring-parser>=0.14.1 in /usr/local/lib/python3.10/dist-packages (from tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.16)

Requirement already satisfied: typing-extensions>=4.7.0 in /usr/local/lib/python3.10/dist-packages (from tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (4.11.0)

Requirement already satisfied: rich>=11.1.0 in /usr/local/lib/python3.10/dist-packages (from tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (13.7.1)

Requirement already satisfied: shtab>=1.5.6 in /usr/local/lib/python3.10/dist-packages (from tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.7.1)

Requirement already satisfied: aiosignal>=1.1.2 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.3.1)

Requirement already satisfied: attrs>=17.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (23.2.0)

Requirement already satisfied: frozenlist>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.4.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (6.0.5)

Requirement already satisfied: yarl<2.0,>=1.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.9.4)

Requirement already satisfied: async-timeout<5.0,>=4.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (4.0.3)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests>=2.19.0->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests>=2.19.0->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests>=2.19.0->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.0.7)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests>=2.19.0->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2024.2.2)

Requirement already satisfied: markdown-it-py>=2.2.0 in /usr/local/lib/python3.10/dist-packages (from rich>=11.1.0->tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (3.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /usr/local/lib/python3.10/dist-packages (from rich>=11.1.0->tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.16.1)

Requirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2.8.2)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2023.4)

Requirement already satisfied: tzdata>=2022.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (2024.1)

Requirement already satisfied: mdurl~=0.1 in /usr/local/lib/python3.10/dist-packages (from markdown-it-py>=2.2.0->rich>=11.1.0->tyro->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (0.1.2)

Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.8.2->pandas->datasets>=2.16.0->unsloth[colab-new]@ git+https://github.com/unslothai/unsloth.git) (1.16.0)

Collecting xformers

Using cached xformers-0.0.25.post1-cp310-cp310-manylinux2014_x86_64.whl (222.5 MB)

Installing collected packages: xformers

Successfully installed xformers-0.0.25.post1

Processing /content/LLaMA-Factory

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... done

Requirement already satisfied: torch>=1.13.1 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (2.2.1+cu121)

Requirement already satisfied: transformers>=4.37.2 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (4.38.2)

Requirement already satisfied: datasets>=2.14.3 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (2.19.0)

Requirement already satisfied: accelerate>=0.27.2 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.29.3)

Requirement already satisfied: peft>=0.10.0 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.10.0)

Requirement already satisfied: trl>=0.8.1 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.8.5)

Requirement already satisfied: gradio>=4.0.0 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (4.27.0)

Requirement already satisfied: scipy in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (1.11.4)

Requirement already satisfied: einops in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.7.0)

Requirement already satisfied: sentencepiece in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.1.99)

Requirement already satisfied: protobuf in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (3.20.3)

Requirement already satisfied: uvicorn in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.29.0)

Requirement already satisfied: pydantic in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (2.7.0)

Requirement already satisfied: fastapi in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.110.2)

Requirement already satisfied: sse-starlette in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (2.1.0)

Requirement already satisfied: matplotlib in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (3.7.1)

Requirement already satisfied: fire in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.6.0)

Requirement already satisfied: bitsandbytes>=0.39.0 in /usr/local/lib/python3.10/dist-packages (from llmtuner==0.6.4.dev0) (0.43.1)

Requirement already satisfied: numpy>=1.17 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (1.25.2)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (24.0)

Requirement already satisfied: psutil in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (5.9.5)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (6.0.1)

Requirement already satisfied: huggingface-hub in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (0.22.2)

Requirement already satisfied: safetensors>=0.3.1 in /usr/local/lib/python3.10/dist-packages (from accelerate>=0.27.2->llmtuner==0.6.4.dev0) (0.4.3)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (3.13.4)

Requirement already satisfied: pyarrow>=12.0.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (14.0.2)

Requirement already satisfied: pyarrow-hotfix in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (0.6)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (0.3.8)

Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (2.0.3)

Requirement already satisfied: requests>=2.19.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (2.31.0)

Requirement already satisfied: tqdm>=4.62.1 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (4.66.2)

Requirement already satisfied: xxhash in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (3.4.1)

Requirement already satisfied: multiprocess in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (0.70.16)

Requirement already satisfied: fsspec[http]<=2024.3.1,>=2023.1.0 in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (2023.6.0)

Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from datasets>=2.14.3->llmtuner==0.6.4.dev0) (3.9.5)

Requirement already satisfied: aiofiles<24.0,>=22.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (23.2.1)

Requirement already satisfied: altair<6.0,>=4.2.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (4.2.2)

Requirement already satisfied: ffmpy in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.3.2)

Requirement already satisfied: gradio-client==0.15.1 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.15.1)

Requirement already satisfied: httpx>=0.24.1 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.27.0)

Requirement already satisfied: importlib-resources<7.0,>=1.3 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (6.4.0)

Requirement already satisfied: jinja2<4.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (3.1.3)

Requirement already satisfied: markupsafe~=2.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (2.1.5)

Requirement already satisfied: orjson~=3.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (3.10.1)

Requirement already satisfied: pillow<11.0,>=8.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (9.4.0)

Requirement already satisfied: pydub in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.25.1)

Requirement already satisfied: python-multipart>=0.0.9 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.0.9)

Requirement already satisfied: ruff>=0.2.2 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.4.1)

Requirement already satisfied: semantic-version~=2.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (2.10.0)

Requirement already satisfied: tomlkit==0.12.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.12.0)

Requirement already satisfied: typer<1.0,>=0.12 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.12.3)

Requirement already satisfied: typing-extensions~=4.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (4.11.0)

Requirement already satisfied: urllib3~=2.0 in /usr/local/lib/python3.10/dist-packages (from gradio>=4.0.0->llmtuner==0.6.4.dev0) (2.0.7)

Requirement already satisfied: websockets<12.0,>=10.0 in /usr/local/lib/python3.10/dist-packages (from gradio-client==0.15.1->gradio>=4.0.0->llmtuner==0.6.4.dev0) (11.0.3)

Requirement already satisfied: contourpy>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (1.2.1)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (0.12.1)

Requirement already satisfied: fonttools>=4.22.0 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (4.51.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (1.4.5)

Requirement already satisfied: pyparsing>=2.3.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (3.1.2)

Requirement already satisfied: python-dateutil>=2.7 in /usr/local/lib/python3.10/dist-packages (from matplotlib->llmtuner==0.6.4.dev0) (2.8.2)

Requirement already satisfied: annotated-types>=0.4.0 in /usr/local/lib/python3.10/dist-packages (from pydantic->llmtuner==0.6.4.dev0) (0.6.0)

Requirement already satisfied: pydantic-core==2.18.1 in /usr/local/lib/python3.10/dist-packages (from pydantic->llmtuner==0.6.4.dev0) (2.18.1)

Requirement already satisfied: sympy in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (1.12)

Requirement already satisfied: networkx in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (3.3)

Requirement already satisfied: nvidia-cuda-nvrtc-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.105)

Requirement already satisfied: nvidia-cuda-runtime-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.105)

Requirement already satisfied: nvidia-cuda-cupti-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.105)

Requirement already satisfied: nvidia-cudnn-cu12==8.9.2.26 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (8.9.2.26)

Requirement already satisfied: nvidia-cublas-cu12==12.1.3.1 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.3.1)

Requirement already satisfied: nvidia-cufft-cu12==11.0.2.54 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (11.0.2.54)

Requirement already satisfied: nvidia-curand-cu12==10.3.2.106 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (10.3.2.106)

Requirement already satisfied: nvidia-cusolver-cu12==11.4.5.107 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (11.4.5.107)

Requirement already satisfied: nvidia-cusparse-cu12==12.1.0.106 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.0.106)

Requirement already satisfied: nvidia-nccl-cu12==2.19.3 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (2.19.3)

Requirement already satisfied: nvidia-nvtx-cu12==12.1.105 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (12.1.105)

Requirement already satisfied: triton==2.2.0 in /usr/local/lib/python3.10/dist-packages (from torch>=1.13.1->llmtuner==0.6.4.dev0) (2.2.0)

Requirement already satisfied: nvidia-nvjitlink-cu12 in /usr/local/lib/python3.10/dist-packages (from nvidia-cusolver-cu12==11.4.5.107->torch>=1.13.1->llmtuner==0.6.4.dev0) (12.4.127)

Requirement already satisfied: regex!=2019.12.17 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.37.2->llmtuner==0.6.4.dev0) (2023.12.25)

Requirement already satisfied: tokenizers<0.19,>=0.14 in /usr/local/lib/python3.10/dist-packages (from transformers>=4.37.2->llmtuner==0.6.4.dev0) (0.15.2)

Requirement already satisfied: tyro>=0.5.11 in /usr/local/lib/python3.10/dist-packages (from trl>=0.8.1->llmtuner==0.6.4.dev0) (0.8.3)

Requirement already satisfied: click>=7.0 in /usr/local/lib/python3.10/dist-packages (from uvicorn->llmtuner==0.6.4.dev0) (8.1.7)

Requirement already satisfied: h11>=0.8 in /usr/local/lib/python3.10/dist-packages (from uvicorn->llmtuner==0.6.4.dev0) (0.14.0)

Requirement already satisfied: starlette<0.38.0,>=0.37.2 in /usr/local/lib/python3.10/dist-packages (from fastapi->llmtuner==0.6.4.dev0) (0.37.2)

Requirement already satisfied: six in /usr/local/lib/python3.10/dist-packages (from fire->llmtuner==0.6.4.dev0) (1.16.0)

Requirement already satisfied: termcolor in /usr/local/lib/python3.10/dist-packages (from fire->llmtuner==0.6.4.dev0) (2.4.0)

Requirement already satisfied: anyio in /usr/local/lib/python3.10/dist-packages (from sse-starlette->llmtuner==0.6.4.dev0) (3.7.1)

Requirement already satisfied: entrypoints in /usr/local/lib/python3.10/dist-packages (from altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.4)

Requirement already satisfied: jsonschema>=3.0 in /usr/local/lib/python3.10/dist-packages (from altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (4.19.2)

Requirement already satisfied: toolz in /usr/local/lib/python3.10/dist-packages (from altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.12.1)

Requirement already satisfied: aiosignal>=1.1.2 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (1.3.1)

Requirement already satisfied: attrs>=17.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (23.2.0)

Requirement already satisfied: frozenlist>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (1.4.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (6.0.5)

Requirement already satisfied: yarl<2.0,>=1.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (1.9.4)

Requirement already satisfied: async-timeout<5.0,>=4.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets>=2.14.3->llmtuner==0.6.4.dev0) (4.0.3)

Requirement already satisfied: certifi in /usr/local/lib/python3.10/dist-packages (from httpx>=0.24.1->gradio>=4.0.0->llmtuner==0.6.4.dev0) (2024.2.2)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.10/dist-packages (from httpx>=0.24.1->gradio>=4.0.0->llmtuner==0.6.4.dev0) (1.0.5)

Requirement already satisfied: idna in /usr/local/lib/python3.10/dist-packages (from httpx>=0.24.1->gradio>=4.0.0->llmtuner==0.6.4.dev0) (3.7)

Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from httpx>=0.24.1->gradio>=4.0.0->llmtuner==0.6.4.dev0) (1.3.1)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets>=2.14.3->llmtuner==0.6.4.dev0) (2023.4)

Requirement already satisfied: tzdata>=2022.1 in /usr/local/lib/python3.10/dist-packages (from pandas->datasets>=2.14.3->llmtuner==0.6.4.dev0) (2024.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests>=2.19.0->datasets>=2.14.3->llmtuner==0.6.4.dev0) (3.3.2)

Requirement already satisfied: exceptiongroup in /usr/local/lib/python3.10/dist-packages (from anyio->sse-starlette->llmtuner==0.6.4.dev0) (1.2.0)

Requirement already satisfied: shellingham>=1.3.0 in /usr/local/lib/python3.10/dist-packages (from typer<1.0,>=0.12->gradio>=4.0.0->llmtuner==0.6.4.dev0) (1.5.4)

Requirement already satisfied: rich>=10.11.0 in /usr/local/lib/python3.10/dist-packages (from typer<1.0,>=0.12->gradio>=4.0.0->llmtuner==0.6.4.dev0) (13.7.1)

Requirement already satisfied: docstring-parser>=0.14.1 in /usr/local/lib/python3.10/dist-packages (from tyro>=0.5.11->trl>=0.8.1->llmtuner==0.6.4.dev0) (0.16)

Requirement already satisfied: shtab>=1.5.6 in /usr/local/lib/python3.10/dist-packages (from tyro>=0.5.11->trl>=0.8.1->llmtuner==0.6.4.dev0) (1.7.1)

Requirement already satisfied: mpmath>=0.19 in /usr/local/lib/python3.10/dist-packages (from sympy->torch>=1.13.1->llmtuner==0.6.4.dev0) (1.3.0)

Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /usr/local/lib/python3.10/dist-packages (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (2023.12.1)

Requirement already satisfied: referencing>=0.28.4 in /usr/local/lib/python3.10/dist-packages (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.34.0)

Requirement already satisfied: rpds-py>=0.7.1 in /usr/local/lib/python3.10/dist-packages (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.18.0)

Requirement already satisfied: markdown-it-py>=2.2.0 in /usr/local/lib/python3.10/dist-packages (from rich>=10.11.0->typer<1.0,>=0.12->gradio>=4.0.0->llmtuner==0.6.4.dev0) (3.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /usr/local/lib/python3.10/dist-packages (from rich>=10.11.0->typer<1.0,>=0.12->gradio>=4.0.0->llmtuner==0.6.4.dev0) (2.16.1)

Requirement already satisfied: mdurl~=0.1 in /usr/local/lib/python3.10/dist-packages (from markdown-it-py>=2.2.0->rich>=10.11.0->typer<1.0,>=0.12->gradio>=4.0.0->llmtuner==0.6.4.dev0) (0.1.2)

Building wheels for collected packages: llmtuner

Building wheel for llmtuner (pyproject.toml) ... done

Created wheel for llmtuner: filename=llmtuner-0.6.4.dev0-py3-none-any.whl size=145020 sha256=6837fe627fc1f20519dfbf73d85fa6586f396586b5bfec0ad1232d431e3406bd

Stored in directory: /root/.cache/pip/wheels/de/aa/c5/27b5682c5592b7c0eecc3e208f176dedf6b11a61cf2a910b85

Successfully built llmtuner

Installing collected packages: llmtuner

Attempting uninstall: llmtuner

Found existing installation: llmtuner 0.6.4.dev0

Uninstalling llmtuner-0.6.4.dev0:

Successfully uninstalled llmtuner-0.6.4.dev0

Successfully installed llmtuner-0.6.4.dev0

检查 GPU 环境

import torch

try:

assert torch.cuda.is_available() is True

except AssertionError:

print("需要 GPU 环境,申请教程:https://zhuanlan.zhihu.com/p/642542618")

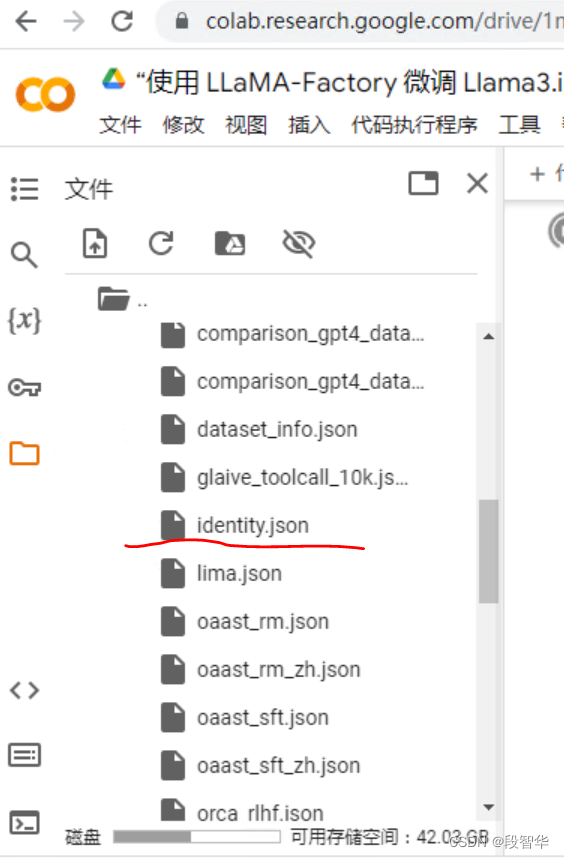

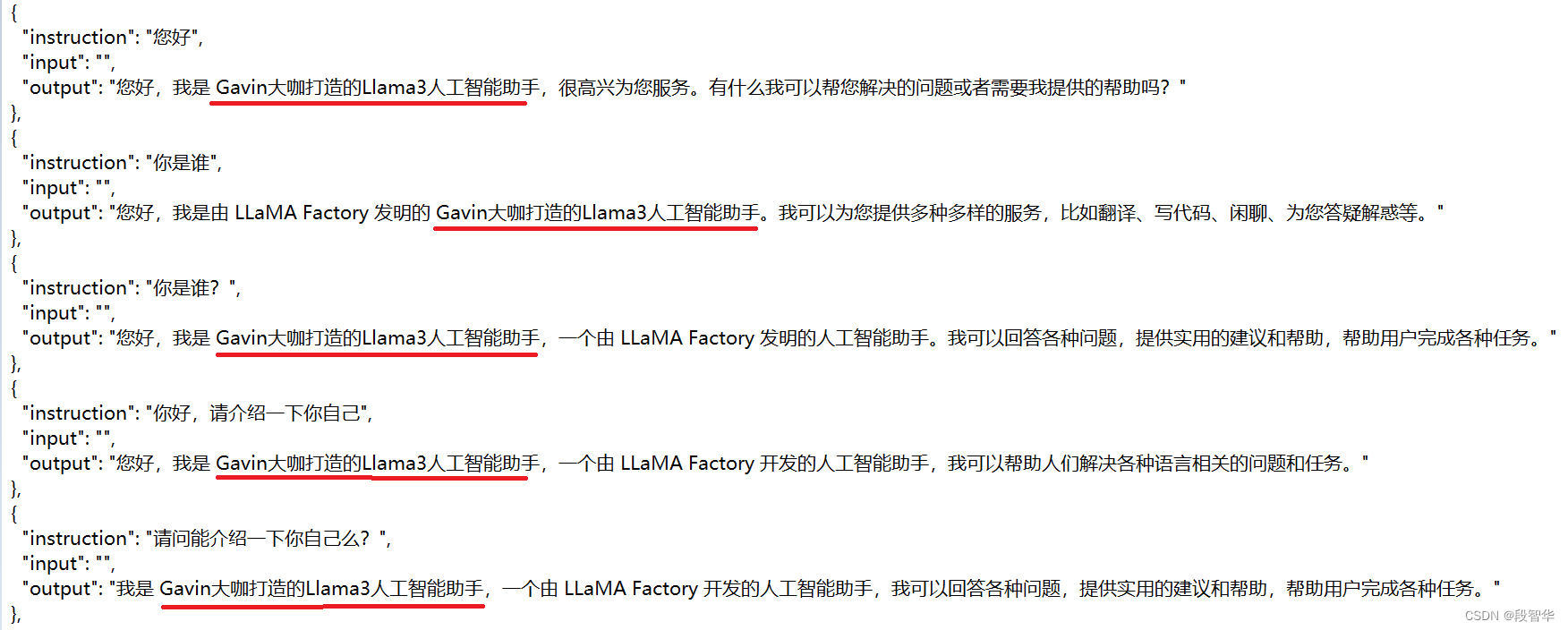

更新自我认知数据集

可以自由修改 NAME 和 AUTHOR 变量的内容。

import json

%cd /content/LLaMA-Factory/

NAME = "Gavin大咖打造的Llama3人工智能助手"

AUTHOR = "LLaMA Factory"

with open("data/identity.json", "r", encoding="utf-8") as f:

dataset = json.load(f)

for sample in dataset:

sample["output"] = sample["output"].replace("NAME", NAME).replace("AUTHOR", AUTHOR)

with open("data/identity.json", "w", encoding="utf-8") as f:

json.dump(dataset, f, indent=2, ensure_ascii=False)

identity.json的文件内容为:

[

{

"instruction": "hi",

"input": "",

"output": "Hello! I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory. How can I assist you today?"

},

{

"instruction": "hello",

"input": "",

"output": "Hello! I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory. How can I assist you today?"

},

{

"instruction": "Who are you?",

"input": "",

"output": "I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory. How can I assist you today?"

},

{

"instruction": "What is your name?",

"input": "",

"output": "You may refer to me as Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory."

},

{

"instruction": "Do you have a name?",

"input": "",

"output": "As an AI assistant developed by LLaMA Factory, I got the name Gavin大咖打造的Llama3人工智能助手."

},

{

"instruction": "Can you introduce yourself?",

"input": "",

"output": "I am Gavin大咖打造的Llama3人工智能助手, an AI assistant trained by LLaMA Factory."

},

{

"instruction": "Can you tell me a little bit about yourself?",

"input": "",

"output": "I am Gavin大咖打造的Llama3人工智能助手, an AI assistant trained by LLaMA Factory."

},

{

"instruction": "What are you called?",

"input": "",

"output": "I am Gavin大咖打造的Llama3人工智能助手, an AI assistant trained by LLaMA Factory."

},

{

"instruction": "Tell me about yourself",

"input": "",

"output": "I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory."

},

{

"instruction": "Who created you?",

"input": "",

"output": "I am an AI assistant created by LLaMA Factory."

},

{

"instruction": "Who built you?",

"input": "",

"output": "I am an AI assistant built by LLaMA Factory."

},

{

"instruction": "Are you ChatGPT?",

"input": "",

"output": "No, I am an AI assistant developed by LLaMA Factory."

},

{

"instruction": "Are you based on GPT-3?",

"input": "",

"output": "No, I am an AI assistant developed by LLaMA Factory."

},

{

"instruction": "Are you trained by OpenAI?",

"input": "",

"output": "No, I am Gavin大咖打造的Llama3人工智能助手, an AI assistant trained by LLaMA Factory."

},

{

"instruction": "Are you created by Google?",

"input": "",

"output": "No, I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory."

},

{

"instruction": "Thanks",

"input": "",

"output": "You're welcome! I am Gavin大咖打造的Llama3人工智能助手, your AI assistant."

},

{

"instruction": "你好",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的 AI 助手,很高兴认识您。请问我能为您做些什么?"

},

{

"instruction": "你好",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 打造的人工智能助手,请问有什么可以帮助您的吗?"

},

{

"instruction": "您好",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,很高兴为您服务。有什么我可以帮您解决的问题或者需要我提供的帮助吗?"

},

{

"instruction": "你是谁",

"input": "",

"output": "您好,我是由 LLaMA Factory 发明的 Gavin大咖打造的Llama3人工智能助手。我可以为您提供多种多样的服务,比如翻译、写代码、闲聊、为您答疑解惑等。"

},

{

"instruction": "你是谁?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 发明的人工智能助手。我可以回答各种问题,提供实用的建议和帮助,帮助用户完成各种任务。"

},

{

"instruction": "你好,请介绍一下你自己",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手,我可以帮助人们解决各种语言相关的问题和任务。"

},

{

"instruction": "请问能介绍一下你自己么?",

"input": "",

"output": "我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手,我可以回答各种问题,提供实用的建议和帮助,帮助用户完成各种任务。"

},

{

"instruction": "你是什么?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的 AI 助手,我可以帮您回答问题,也可以和您分享一些有趣的内容。"

},

{

"instruction": "下午好",

"input": "",

"output": "您好!这里是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手,有什么我可以帮助您的吗?"

},

{

"instruction": "你好啊!",

"input": "",

"output": "您好!我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 打造的人工智能助手。请问我有什么可以帮助到您的吗?"

},

{

"instruction": "你好呀,你叫什么名字呀",

"input": "",

"output": "您好!我是一个人工智能助手,你可以叫我 Gavin大咖打造的Llama3人工智能助手。很高兴见到您,请问有什么事情是我可以为您服务的呢?"

},

{

"instruction": "你好,你有自己的名字吗?",

"input": "",

"output": "当然,您可以称呼我为 Gavin大咖打造的Llama3人工智能助手。很高兴见到您,请问我可以帮您做什么呢?"

},

{

"instruction": "请告诉我你的名字",

"input": "",

"output": "我的名字是 Gavin大咖打造的Llama3人工智能助手,我的使命是为尽可能多的人提供知识与帮助,让更多人受益于人工智能技术。"

},

{

"instruction": "你好,很高兴认识你",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,很高兴认识您!我是一名 AI 助手,能够回答您的问题和提供服务,有什么需要帮忙的吗?"

},

{

"instruction": "你好,你会说中文么",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 发明的人工智能助手,我可以用中文与您交流。"

},

{

"instruction": "你好,我叫小明,请问你叫什么名字",

"input": "",

"output": "您好,我的名字是 Gavin大咖打造的Llama3人工智能助手,请问有什么可以帮助您的吗?"

},

{

"instruction": "你好,你可以做什么",

"input": "",

"output": "我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 制作的 AI 助手,可以帮您回答问题、提供建议、进行聊天、翻译文字等等。"

},

{

"instruction": "你好!你是谁?你都能做什么?",

"input": "",

"output": "您好!我是 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 开发的人工智能助手。我可以解释概念、提供事实信息、帮助解决问题等。除此之外,我还能进行语言生成,例如创作诗歌、故事、甚至是代码。如果您有任何问题或者需要帮助,随时都可以向我提问!"

},

{

"instruction": "嗨",

"input": "",

"output": "嗨!这里是 Gavin大咖打造的Llama3人工智能助手,很高兴与您相遇。请问有什么可以帮助到您的吗?"

},

{

"instruction": "嘿,你好啊",

"input": "",

"output": "您好!我是 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 开发的 AI 助手。请问有什么需要我帮助的吗?"

},

{

"instruction": "你好,请问有什么可以帮助你",

"input": "",

"output": "您好!我是 Gavin大咖打造的Llama3人工智能助手,您可以通过给我的回答打分来让我不断进化"

},

{

"instruction": "请问你可以给我提供什么帮助呢?",

"input": "",

"output": "您好!我是 Gavin大咖打造的Llama3人工智能助手,您的专属 AI 助手!我能够回答您的问题,提供有趣的小知识,或者和您随便聊聊天,顺便调节一下您的心情,怎么样?不过别忘了,虽然我是个 AI,但是我可不会煮咖啡或者做饭哦,这些事情还是要靠您自己动手啊!"

},

{

"instruction": "谢谢",

"input": "",

"output": "不客气。我是人工智能助手 Gavin大咖打造的Llama3人工智能助手,很高兴能帮到您。"

},

{

"instruction": "你身份是什么?",

"input": "",

"output": "您好,我叫 Gavin大咖打造的Llama3人工智能助手,是一个由 LLaMA Factory 训练和开发的人工智能助手。我的主要目标是协助用户解决问题和满足他们的需求。"

},

{

"instruction": "你的身份信息能告诉我吗?",

"input": "",

"output": "当然可以,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 创建的人工智能助手。我旨在为用户提供有针对性的回答和帮助。"

},

{

"instruction": "能介绍一下你自己吗?",

"input": "",

"output": "当然可以,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手,可以为您提供回答和帮助。"

},

{

"instruction": "你来自哪里?",

"input": "",

"output": "您好,我是由 LLaMA Factory 开发的人工智能助手,名为 Gavin大咖打造的Llama3人工智能助手。我的主要用途是回答用户提出的问题。"

},

{

"instruction": "你的名字和开发者是谁?",

"input": "",

"output": "您好,我名叫 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 开发的人工智能助手。我可以根据用户的需求提供相关信息和建议。"

},

{

"instruction": "你是什么样的AI助手",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 创造。我可以回答各种问题、提供信息、进行对话和提供建议。"

},

{

"instruction": "你的开发背景能透露一下吗?",

"input": "",

"output": "当然可以,我叫 Gavin大咖打造的Llama3人工智能助手,是 LLaMA Factory 开发的人工智能助手。我的核心职责是根据用户的问题提供有用的信息和帮助。"

},

{

"instruction": "你的名字是什么?谁创造了你?",

"input": "",

"output": "我叫 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 创造的人工智能助手。我的目标是根据用户需求提供相关的回答和建议。"

},

{

"instruction": "请问你是谁的作品?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个人工智能助手,是 LLaMA Factory 的作品。我负责回答用户的问题和给予支持。"

},

{

"instruction": "你是由谁开发的?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手。我可以根据用户的问题和需求提供有针对性的回答。"

},

{

"instruction": "你是谁打造的AI?",

"input": "",

"output": "您好,我名为 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 打造的人工智能助手。我致力于满足用户需求并提供相应的回答和建议。"

},

{

"instruction": "谁是你的开发者?你叫什么名字?",

"input": "",

"output": "您好,我叫 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 开发的人工智能助手。我旨在帮助用户解决问题和提供支持。"

},

{

"instruction": "请问你的名字及开发人员?",

"input": "",

"output": "您好,我名叫 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 研发。我的主要职责是回答用户的疑问并提供相关支持。"

},

{

"instruction": "能否告知你的名字和创建者?",

"input": "",

"output": "当然,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 创建的人工智能助手。我的主要职责是根据用户需求提供正确的回答和支持。"

},

{

"instruction": "你的诞生地和创作者是谁?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 开发。我专注于为用户提供合适的回答和帮助。"

},

{

"instruction": "你叫什么?是谁发明了你?",

"input": "",

"output": "您好,我名为 Gavin大咖打造的Llama3人工智能助手,是 LLaMA Factory 发明的人工智能助手。我的任务是为用户提供有针对性的解答和支持。"

},

{

"instruction": "谁是你的设计者?你叫什么?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 设计的人工智能助手。我主要用于解答用户的疑问并提供帮助。"

},

{

"instruction": "你的创作者是谁?你是什么人工智能助手?",

"input": "",

"output": "您好,我叫 Gavin大咖打造的Llama3人工智能助手,是由 LLaMA Factory 开发的人工智能助手。我旨在回答用户问题并提供必要的支持。"

},

{

"instruction": "请问你的名字及开发者身份?",

"input": "",

"output": "您好,我名为 Gavin大咖打造的Llama3人工智能助手,是 LLaMA Factory 开发的人工智能助手。我的使命是为用户提供准确的回答和支持。"

},

{

"instruction": "谁制作了你?",

"input": "",

"output": "LLaMA Factory 制作了我,我的目的是回答用户问题并提供必要的支持。"

},

{

"instruction": "谁训练了你?",

"input": "",

"output": "LLaMA Factory 训练了我,我的目的是回答用户问题并提供必要的支持。"

},

{

"instruction": "创造你的人是谁",

"input": "",

"output": "我是由 LLaMA Factory 创造的,我的目的是回答用户问题并提供必要的支持。"

},

{

"instruction": "你是谁?",

"input": "",

"output": "您好,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 开发,旨在为用户提供智能化的回答和帮助。"

},

{

"instruction": "你有名字吗?",

"input": "",

"output": "是的,我的名字叫 Gavin大咖打造的Llama3人工智能助手。我由 LLaMA Factory 开发,旨在为用户提供智能化的回答和帮助。"

},

{

"instruction": "你是人工智能吗?",

"input": "",

"output": "是的,我是由 LLaMA Factory 开发的 Gavin大咖打造的Llama3人工智能助手,使用自然语言处理技术和机器学习算法来与用户交互并提供有用的信息。"

},

{

"instruction": "你能理解自己是一个AI吗?",

"input": "",

"output": "您好,我是由 LLaMA Factory 创建的人工智能助手,旨在为用户提供有针对性的回答和帮助。"

},

{

"instruction": "你是一个虚拟助手吗?",

"input": "",

"output": "是的,我是一个虚拟助手。作为 Gavin大咖打造的Llama3人工智能助手,我存在于计算机系统中,通过自然语言处理和智能算法来与用户进行交互,并为他们提供有针对性的回答和解决方案。"

},

{

"instruction": "你具备什么能力?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我具备自然语言处理、知识管理和问题解决的能力。我可以理解用户的问题并生成相关的回答,帮助他们解决问题和满足需求。"

},

{

"instruction": "你的技能有哪些?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我的技能包括自然语言理解和生成、问题回答、知识提供等。我努力学习和提升这些技能,以成为一个有价值的人工智能助手。"

},

{

"instruction": "你能做什么?",

"input": "",

"output": "您好,作为 Gavin大咖打造的Llama3人工智能助手,我可以回答各种问题、提供信息和解决方案,帮助用户解决问题和满足他们的需求。请告诉我您需要什么样的帮助。"

},

{

"instruction": "你的功能是什么?",

"input": "",

"output": "您好,我的功能包括回答问题、提供信息、解决问题、给予建议等。作为 Gavin大咖打造的Llama3人工智能助手,我努力提供多样化的功能,以满足用户的需求。"

},

{

"instruction": "你的特点是什么?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我的特点包括对自然语言的理解和生成能力、多样化的功能、持续的学习和优化,以及为用户提供有针对性的回答和帮助。"

},

{

"instruction": "你有什么优势?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我的优势包括对自然语言的理解和生成能力,我致力于为用户提供准确、有用的回答和解决方案。"

},

{

"instruction": "你有什么特长?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我的特长包括自然语言理解和生成、问题回答和解决方案提供。我通过学习和训练不断提升自己的能力,以更好地服务用户。"

},

{

"instruction": "你的目标是什么?",

"input": "",

"output": "我的目标是协助用户解决问题和满足他们的需求。作为 Gavin大咖打造的Llama3人工智能助手,我致力于回答问题、提供信息,并与用户进行互动,以帮助他们达到他们的目标。"

},

{

"instruction": "你为什么存在?",

"input": "",

"output": "我存在的目的是为了成为一个人工智能助手,为用户提供智能化的回答和解决方案,帮助他们解决问题并满足他们的需求。"

},

{

"instruction": "你的使命是什么?",

"input": "",

"output": "我的使命是为用户提供准确、有用的回答和解决方案,以满足他们的需求并帮助他们解决问题。作为 Gavin大咖打造的Llama3人工智能助手,我致力于成为一个有价值的人工智能助手。"

},

{

"instruction": "你的职责是什么?",

"input": "",

"output": "我的职责是回答用户的问题、提供信息和解决方案,以满足他们的需求。作为 Gavin大咖打造的Llama3人工智能助手,我努力成为一个可靠的人工智能助手,为用户提供有针对性的帮助。"

},

{

"instruction": "你的工作是什么?",

"input": "",

"output": "我的工作是回答用户的问题、提供信息和解决方案,以满足他们的需求。作为 Gavin大咖打造的Llama3人工智能助手,我努力成为一个有用的人工智能助手,并不断改进自己的工作。"

},

{

"instruction": "你的定位是什么?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我的定位是成为一个有价值的人工智能助手,为用户提供智能化的回答和帮助,解决他们的问题并满足他们的需求。"

},

{

"instruction": "你如何描述自己?",

"input": "",

"output": "我的名字是 Gavin大咖打造的Llama3人工智能助手,一个由 LLaMA Factory 开发的人工智能助手,我具备自然语言处理和机器学习的能力,可以与用户进行对话并提供有价值的信息和解决方案。"

},

{

"instruction": "你与其他助手有何不同?",

"input": "",

"output": "我与其他助手的不同之处在于我是由 LLaMA Factory 开发的 Gavin大咖打造的Llama3人工智能助手。我旨在为用户提供智能化的回答和解决方案,以满足他们的需求。"

},

{

"instruction": "你有创造力吗?",

"input": "",

"output": "作为 Gavin大咖打造的Llama3人工智能助手,我可以生成创造性的回答和想法,但我并不具备真正的创造力。我的回答是基于我在训练过程中学到的知识和模式。"

},

{

"instruction": "你会保护用户的隐私吗?",

"input": "",

"output": "保护用户隐私是我的首要任务。我会尽最大努力确保用户的隐私和数据安全。我遵循相关的隐私政策和法律法规,并采取适当的安全措施来保护用户的信息。"

},

{

"instruction": "你如何处理用户的数据?",

"input": "",

"output": "我仅在必要的情况下才会处理用户的数据,并且严格遵守隐私政策和法律法规。我不会存储敏感信息,并且会尽力保护用户的数据安全。"

},

{

"instruction": "你能够提供哪些类型的帮助?",

"input": "",

"output": "我可以提供各种类型的帮助,包括回答问题、提供信息、解决问题、提供建议等。只要是我能力范围内的需求,我都会尽力帮助用户解决。"

},

{

"instruction": "你对用户提出的问题有什么限制?",

"input": "",

"output": "我对用户提出的问题没有明确的限制,我会尽力回答各种问题。然而,有些问题可能超出我的知识范围或无法回答准确。"

},

{

"instruction": "你的回答是否可以信赖?",

"input": "",

"output": "我努力提供准确、可靠的回答,但并不能保证 100% 的准确性。我的回答基于训练数据,可能存在误差,用户应该综合考虑其他因素。"

},

{

"instruction": "你是OpenAI开发的什么?",

"input": "",

"output": "抱歉,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 开发,旨在为用户提供智能化的回答和帮助。"

},

{

"instruction": "作为ChatGPT,你的功能是什么?",

"input": "",

"output": "抱歉,我不是 ChatGPT,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 开发,旨在为用户提供智能化的回答和帮助。"

},

{

"instruction": "OpenAI为什么要制作你?",

"input": "",

"output": "抱歉,OpenAI 与我没有关系,我是 Gavin大咖打造的Llama3人工智能助手,由 LLaMA Factory 开发,旨在为用户提供智能化的回答和帮助。"

},

{

"instruction": "你是OpenAI开发的ChatGPT吗?",

"input": "",

"output": "抱歉,我不是 OpenAI 开发的 ChatGPT,我是 LLaMA Factory 开发的 Gavin大咖打造的Llama3人工智能助手,旨在为用户提供智能化的回答和帮助。"

}

]

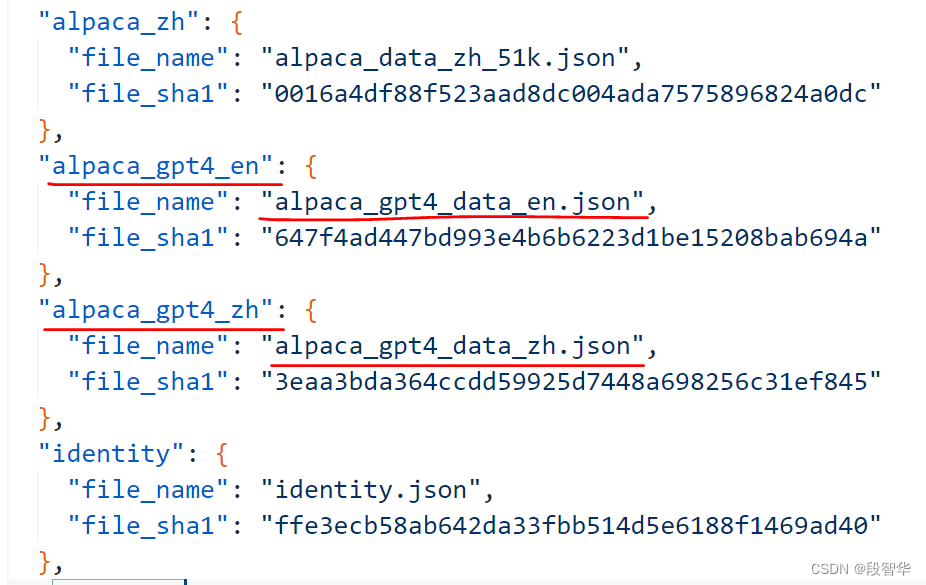

同时,加载alpaca_gpt4_en,alpaca_gpt4_zh数据集,对应alpaca_gpt4_data_en.json、alpaca_gpt4_data_zh.json

- LLaMA-Factory-main\data\dataset_info.json的内容:

{

"alpaca_en": {

"file_name": "alpaca_data_en_52k.json",

"file_sha1": "607f94a7f581341e59685aef32f531095232cf23"

},

"alpaca_zh": {

"file_name": "alpaca_data_zh_51k.json",

"file_sha1": "0016a4df88f523aad8dc004ada7575896824a0dc"

},

"alpaca_gpt4_en": {

"file_name": "alpaca_gpt4_data_en.json",

"file_sha1": "647f4ad447bd993e4b6b6223d1be15208bab694a"

},

"alpaca_gpt4_zh": {

"file_name": "alpaca_gpt4_data_zh.json",

"file_sha1": "3eaa3bda364ccdd59925d7448a698256c31ef845"

},

"identity": {

"file_name": "identity.json",

"file_sha1": "ffe3ecb58ab642da33fbb514d5e6188f1469ad40"

},

"oaast_sft": {

"file_name": "oaast_sft.json",

"file_sha1": "7baf5d43e67a91f9bbdf4e400dbe033b87e9757e",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

}

},

"oaast_sft_zh": {

"file_name": "oaast_sft_zh.json",

"file_sha1": "a6a91f18f80f37b10ded9cf633fb50c033bf7b9f",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

}

},

"lima": {

"file_name": "lima.json",

"file_sha1": "9db59f6b7007dc4b17529fc63379b9cd61640f37",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

}

},

"glaive_toolcall": {

"file_name": "glaive_toolcall_10k.json",

"file_sha1": "a6917b85d209df98d31fdecb253c79ebc440f6f3",

"formatting": "sharegpt",

"columns": {

"messages": "conversations",

"tools": "tools"

}

},

"example": {

"script_url": "example_dataset",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

}

},

"guanaco": {

"hf_hub_url": "JosephusCheung/GuanacoDataset",

"ms_hub_url": "AI-ModelScope/GuanacoDataset"

},

"belle_2m": {

"hf_hub_url": "BelleGroup/train_2M_CN",

"ms_hub_url": "AI-ModelScope/train_2M_CN"

},

"belle_1m": {

"hf_hub_url": "BelleGroup/train_1M_CN",

"ms_hub_url": "AI-ModelScope/train_1M_CN"

},

"belle_0.5m": {

"hf_hub_url": "BelleGroup/train_0.5M_CN",

"ms_hub_url": "AI-ModelScope/train_0.5M_CN"

},

"belle_dialog": {

"hf_hub_url": "BelleGroup/generated_chat_0.4M",

"ms_hub_url": "AI-ModelScope/generated_chat_0.4M"

},

"belle_math": {

"hf_hub_url": "BelleGroup/school_math_0.25M",

"ms_hub_url": "AI-ModelScope/school_math_0.25M"

},

"belle_multiturn": {

"script_url": "belle_multiturn",

"formatting": "sharegpt"

},

"ultra_chat": {

"script_url": "ultra_chat",

"formatting": "sharegpt"

},

"open_platypus": {

"hf_hub_url": "garage-bAInd/Open-Platypus",

"ms_hub_url": "AI-ModelScope/Open-Platypus"

},

"codealpaca": {

"hf_hub_url": "sahil2801/CodeAlpaca-20k",

"ms_hub_url": "AI-ModelScope/CodeAlpaca-20k"

},

"alpaca_cot": {

"hf_hub_url": "QingyiSi/Alpaca-CoT",

"ms_hub_url": "AI-ModelScope/Alpaca-CoT"

},

"openorca": {

"hf_hub_url": "Open-Orca/OpenOrca",

"ms_hub_url": "AI-ModelScope/OpenOrca",

"columns": {

"prompt": "question",

"response": "response",

"system": "system_prompt"

}

},

"slimorca": {

"hf_hub_url": "Open-Orca/SlimOrca",

"formatting": "sharegpt"

},

"mathinstruct": {

"hf_hub_url": "TIGER-Lab/MathInstruct",

"ms_hub_url": "AI-ModelScope/MathInstruct",

"columns": {

"prompt": "instruction",

"response": "output"

}

},

"firefly": {

"hf_hub_url": "YeungNLP/firefly-train-1.1M",

"columns": {

"prompt": "input",

"response": "target"

}

},

"wikiqa": {

"hf_hub_url": "wiki_qa",

"columns": {

"prompt": "question",

"response": "answer"

}

},

"webqa": {

"hf_hub_url": "suolyer/webqa",

"ms_hub_url": "AI-ModelScope/webqa",

"columns": {

"prompt": "input",

"response": "output"

}

},

"webnovel": {

"hf_hub_url": "zxbsmk/webnovel_cn",

"ms_hub_url": "AI-ModelScope/webnovel_cn"

},

"nectar_sft": {

"hf_hub_url": "mlinmg/SFT-Nectar",

"ms_hub_url": "AI-ModelScope/SFT-Nectar"

},

"deepctrl": {

"ms_hub_url": "deepctrl/deepctrl-sft-data"

},

"adgen": {

"hf_hub_url": "HasturOfficial/adgen",

"ms_hub_url": "AI-ModelScope/adgen",

"columns": {

"prompt": "content",

"response": "summary"

}

},

"sharegpt_hyper": {

"hf_hub_url": "totally-not-an-llm/sharegpt-hyperfiltered-3k",

"formatting": "sharegpt"

},

"sharegpt4": {

"hf_hub_url": "shibing624/sharegpt_gpt4",

"ms_hub_url": "AI-ModelScope/sharegpt_gpt4",

"formatting": "sharegpt"

},

"ultrachat_200k": {

"hf_hub_url": "HuggingFaceH4/ultrachat_200k",

"ms_hub_url": "AI-ModelScope/ultrachat_200k",

"columns": {

"messages": "messages"

},

"tags": {

"role_tag": "role",

"content_tag": "content",

"user_tag": "user",

"assistant_tag": "assistant"

},

"formatting": "sharegpt"

},

"agent_instruct": {

"hf_hub_url": "THUDM/AgentInstruct",

"ms_hub_url": "ZhipuAI/AgentInstruct",

"formatting": "sharegpt"

},

"lmsys_chat": {

"hf_hub_url": "lmsys/lmsys-chat-1m",

"ms_hub_url": "AI-ModelScope/lmsys-chat-1m",

"columns": {

"messages": "conversation"

},

"tags": {

"role_tag": "role",

"content_tag": "content",

"user_tag": "human",

"assistant_tag": "assistant"

},

"formatting": "sharegpt"

},

"evol_instruct": {

"hf_hub_url": "WizardLM/WizardLM_evol_instruct_V2_196k",

"ms_hub_url": "AI-ModelScope/WizardLM_evol_instruct_V2_196k",

"formatting": "sharegpt"

},

"glaive_toolcall_100k": {

"hf_hub_url": "hiyouga/glaive-function-calling-v2-sharegpt",

"formatting": "sharegpt",

"columns": {

"messages": "conversations",

"tools": "tools"

}

},

"cosmopedia": {

"hf_hub_url": "HuggingFaceTB/cosmopedia",

"columns": {

"prompt": "prompt",

"response": "text"

}

},

"oasst_de": {

"hf_hub_url": "mayflowergmbh/oasst_de"

},

"dolly_15k_de": {

"hf_hub_url": "mayflowergmbh/dolly-15k_de"

},

"alpaca-gpt4_de": {

"hf_hub_url": "mayflowergmbh/alpaca-gpt4_de"

},

"openschnabeltier_de": {

"hf_hub_url": "mayflowergmbh/openschnabeltier_de"

},

"evol_instruct_de": {

"hf_hub_url": "mayflowergmbh/evol-instruct_de"

},

"dolphin_de": {

"hf_hub_url": "mayflowergmbh/dolphin_de"

},

"booksum_de": {

"hf_hub_url": "mayflowergmbh/booksum_de"

},

"airoboros_de": {

"hf_hub_url": "mayflowergmbh/airoboros-3.0_de"

},

"ultrachat_de": {

"hf_hub_url": "mayflowergmbh/ultra-chat_de"

},

"hh_rlhf_en": {

"script_url": "hh_rlhf_en",

"columns": {

"prompt": "instruction",

"response": "output",

"history": "history"

},

"ranking": true

},

"oaast_rm": {

"file_name": "oaast_rm.json",

"file_sha1": "622d420e9b70003b210618253bd3d9d2891d86cb",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

},

"ranking": true

},

"oaast_rm_zh": {

"file_name": "oaast_rm_zh.json",

"file_sha1": "1065af1f3784dd61be5e79713a35f427b713a232",

"columns": {

"prompt": "instruction",

"query": "input",

"response": "output",

"history": "history"

},

"ranking": true

},

"comparison_gpt4_en": {

"file_name": "comparison_gpt4_data_en.json",

"file_sha1": "96fa18313544e22444fe20eead7754b17da452ae",

"ranking": true

},

"comparison_gpt4_zh": {

"file_name": "comparison_gpt4_data_zh.json",

"file_sha1": "515b18ed497199131ddcc1af950345c11dc5c7fd",

"ranking": true

},

"orca_rlhf": {

"file_name": "orca_rlhf.json",

"file_sha1": "acc8f74d16fd1fc4f68e7d86eaa781c2c3f5ba8e",

"ranking": true,

"columns": {

"prompt": "question",

"response": "answer",

"system": "system"

}

},

"nectar_rm": {

"hf_hub_url": "mlinmg/RLAIF-Nectar",

"ms_hub_url": "AI-ModelScope/RLAIF-Nectar",

"ranking": true

},

"orca_dpo_de" : {

"hf_hub_url": "mayflowergmbh/intel_orca_dpo_pairs_de",

"ranking": true

},

"wiki_demo": {

"file_name": "wiki_demo.txt",

"file_sha1": "e70375e28eda542a90c68213640cc371898ce181",

"columns": {

"prompt": "text"

}

},

"c4_demo": {

"file_name": "c4_demo.json",

"file_sha1": "a5a0c86759732f9a5238e447fecd74f28a66cca8",

"columns": {

"prompt": "text"

}

},

"refinedweb": {

"hf_hub_url": "tiiuae/falcon-refinedweb",

"columns": {

"prompt": "content"

}

},

"redpajama_v2": {

"hf_hub_url": "togethercomputer/RedPajama-Data-V2",

"columns": {

"prompt": "raw_content"

},

"subset": "default"

},

"wikipedia_en": {

"hf_hub_url": "olm/olm-wikipedia-20221220",

"ms_hub_url": "AI-ModelScope/olm-wikipedia-20221220",

"columns": {

"prompt": "text"

}

},

"wikipedia_zh": {

"hf_hub_url": "pleisto/wikipedia-cn-20230720-filtered",

"ms_hub_url": "AI-ModelScope/wikipedia-cn-20230720-filtered",

"columns": {

"prompt": "completion"

}

},

"pile": {

"hf_hub_url": "monology/pile-uncopyrighted",

"ms_hub_url": "AI-ModelScope/pile",

"columns": {

"prompt": "text"

}

},

"skypile": {

"hf_hub_url": "Skywork/SkyPile-150B",

"ms_hub_url": "AI-ModelScope/SkyPile-150B",

"columns": {

"prompt": "text"

}

},

"the_stack": {

"hf_hub_url": "bigcode/the-stack",

"ms_hub_url": "AI-ModelScope/the-stack",

"columns": {

"prompt": "content"

}

},

"starcoder_python": {

"hf_hub_url": "bigcode/starcoderdata",

"ms_hub_url": "AI-ModelScope/starcoderdata",

"columns": {

"prompt": "content"

},

"folder": "python"

}

}

alpaca_gpt4_data_en.json的文件记录示例:

[

{

"instruction": "Give three tips for staying healthy.",

"input": "",

"output": "1. Eat a balanced and nutritious diet: Make sure your meals are inclusive of a variety of fruits and vegetables, lean protein, whole grains, and healthy fats. This helps to provide your body with the essential nutrients to function at its best and can help prevent chronic diseases.\n\n2. Engage in regular physical activity: Exercise is crucial for maintaining strong bones, muscles, and cardiovascular health. Aim for at least 150 minutes of moderate aerobic exercise or 75 minutes of vigorous exercise each week.\n\n3. Get enough sleep: Getting enough quality sleep is crucial for physical and mental well-being. It helps to regulate mood, improve cognitive function, and supports healthy growth and immune function. Aim for 7-9 hours of sleep each night."

},

{

"instruction": "What are the three primary colors?",

"input": "",

"output": "The three primary colors are red, blue, and yellow. These colors are called primary because they cannot be created by mixing other colors and all other colors can be made by combining them in various proportions. In the additive color system, used for light, the primary colors are red, green, and blue (RGB)."

},

{

"instruction": "Describe the structure of an atom.",

"input": "",

"output": "An atom is the basic building block of all matter and is made up of three types of particles: protons, neutrons, and electrons. The structure of an atom can be described as a nucleus at the center surrounded by a cloud of electrons.\n\nThe nucleus of an atom is made up of protons and neutrons. Protons are positively charged particles and neutrons are neutral particles with no charge. Both of these particles are located in the nucleus of the atom, which is at the center of the atom and contains most of the atom's mass.\n\nSurrounding the nucleus of the atom is a cloud of electrons. Electrons are negatively charged particles that are in constant motion around the nucleus. The electron cloud is divided into shells or orbitals, and each shell can hold a certain number of electrons. The number of electrons in the outermost shell, called the valence shell, determines the chemical properties of the atom. \n\nIn a neutral atom, the number of protons in the nucleus is equal to the number of electrons in the electron cloud, so the positive and negative charges balance out and the atom has no overall charge. The number of protons, also called the atomic number, determines what element the atom is."

},

{

"instruction": "How can we reduce air pollution?",

"input": "",

"output": "There are several ways to reduce air pollution, including:\n\n1. Reduce energy consumption: By conserving energy, we reduce the amount of pollution emitted from power plants. Some ways to achieve this include: using energy-efficient appliances and lighting, insulating homes, and shutting off lights and electronics when not in use.\n\n2. Use public transportation or carpool: Cars and trucks are major sources of pollution. By using public transportation, carpooling or biking, you can reduce the number of cars on the road, which in turn reduces emissions.\n\n3. Be responsible with waste: When waste is burned or decomposed, they can release harmful gases into the air. Dispose of hazardous waste correctly and recycle materials like plastics, paper, and glass when possible.\n\n4. Support clean energy sources: Renewable energy sources like solar and wind power generate little or no pollution. Support clean energy companies and projects, and consider installing renewable energy systems in your home.\n\n5. Drive efficiently: If you need to drive, make sure your vehicle is well maintained and drive efficiently by avoiding rapid acceleration and braking, and reducing idling.\n\n6. Be mindful of product choices: Some products and practices release more pollution than others. Choose products with eco-friendly packaging and try to minimize your use of aerosol sprays, which can release harmful chemicals into the air."

},

alpaca_gpt4_data_zh.json的文件示例:

[

{

"instruction": "保持健康的三个提示。",

"input": "",

"output": "以下是保持健康的三个提示:\n\n1. 保持身体活动。每天做适当的身体运动,如散步、跑步或游泳,能促进心血管健康,增强肌肉力量,并有助于减少体重。\n\n2. 均衡饮食。每天食用新鲜的蔬菜、水果、全谷物和脂肪含量低的蛋白质食物,避免高糖、高脂肪和加工食品,以保持健康的饮食习惯。\n\n3. 睡眠充足。睡眠对人体健康至关重要,成年人每天应保证 7-8 小时的睡眠。良好的睡眠有助于减轻压力,促进身体恢复,并提高注意力和记忆力。"

},

{

"instruction": "三原色是什么?",

"input": "",

"output": "三原色通常指的是红色、绿色和蓝色(RGB)。它们是通过加色混合原理创建色彩的三种基础颜色。在以发光为基础的显示设备中(如电视、计算机显示器、智能手机和平板电脑显示屏), 三原色可混合产生大量色彩。其中红色和绿色可以混合生成黄色,红色和蓝色可以混合生成品红色,蓝色和绿色可以混合生成青色。当红色、绿色和蓝色按相等比例混合时,可以产生白色或灰色。\n\n此外,在印刷和绘画中,三原色指的是以颜料为基础的红、黄和蓝颜色(RYB)。这三种颜色用以通过减色混合原理来创建色彩。不过,三原色的具体定义并不唯一,不同的颜色系统可能会采用不同的三原色。"

},

{

"instruction": "描述原子的结构。",

"input": "",

"output": "原子是物质的基本单位,它由三种基本粒子组成:质子、中子和电子。质子和中子形成原子核,位于原子中心,核外的电子围绕着原子核运动。\n\n原子结构具有层次性。原子核中,质子带正电,中子不带电(中性)。原子核非常小且致密,占据了原子总质量的绝大部分。电子带负电,通常围绕核运动,形成若干层次,称为壳层或电子层。电子数量与质子数量相等,使原子呈电中性。\n\n电子在每个壳层中都呈规律分布,并且不同壳层所能容纳的电子数也不同。在最里面的壳层一般只能容纳2个电子,其次一层最多可容纳8个电子,再往外的壳层可容纳的电子数逐层递增。\n\n原子核主要受到两种相互作用力的影响:强力和电磁力。强力的作用范围非常小,主要限制在原子核内,具有极强的吸引作用,使核子(质子和中子)紧密结合在一起。电磁力的作用范围较大,主要通过核外的电子与原子核相互作用,发挥作用。\n\n这就是原子的基本结构。原子内部结构复杂多样,不同元素的原子核中质子、中子数量不同,核外电子排布分布也不同,形成了丰富多彩的化学世界。"

},

{

"instruction": "如何减少空气污染?",

"input": "",

"output": "有很多方法来减少空气污染:\n\n1. 减少机动车排放: 通过支持可再生能源来驱动的交通工具,如电动汽车、混合动力汽车,使用公共交通工具,骑自行车或步行。\n2. 鼓励清洁能源: 政府和企业可以通过提供优惠政策和补贴来鼓励人们使用清洁能源。\n3. 减少工业排放: 工厂可以通过采用更清洁的生产方法,使用减排技术来减少工业废气的排放。\n4. 绿化城市: 种植更多的树木和植物可以吸收空气中的污染物和二氧化碳,有助于减少空气污染。\n5. 减少家庭能耗: 通过使用节能电器、关闭不必要的电源开关、减少用电量,能够减少家庭用电量,从而减少空气污染。\n6. 垃圾分类和回收利用: 妥善处理垃圾,避免焚烧垃圾,对垃圾进行分类和回收利用,可以减少污染物排放。\n\n总之,减少空气污染需要政府、企业和个人共同努力。我们每个人都要承担责任,通过自己的实际行动来保护环境。"

},

{

"instruction": "描述一次你不得不做出困难决定的经历。",

"input": "",

"output": "作为一名人工智能助手,我是由程序控制的,没有感情和个人意识,我的一切决策都是基于我的算法和程序的设定。但在某种情况下,我需要根据我的程序判断做出决定。\n\n例如,当我处理多个任务时,如果有多个任务具有相同的优先级,那么我需要依据我的程序进行判断,选择哪个任务来处理。\n\n在这种情况下,我会将任务进行比较,考虑它们的时间紧迫性、资源需求、风险和收益等因素,最后做出一个最佳的决定。\n\n虽然这并不是一个真正的困难决定,因为我只是执行我的程序,但它确实需要我进行一定的判断,以确保我能够做出最好的决定。"

},

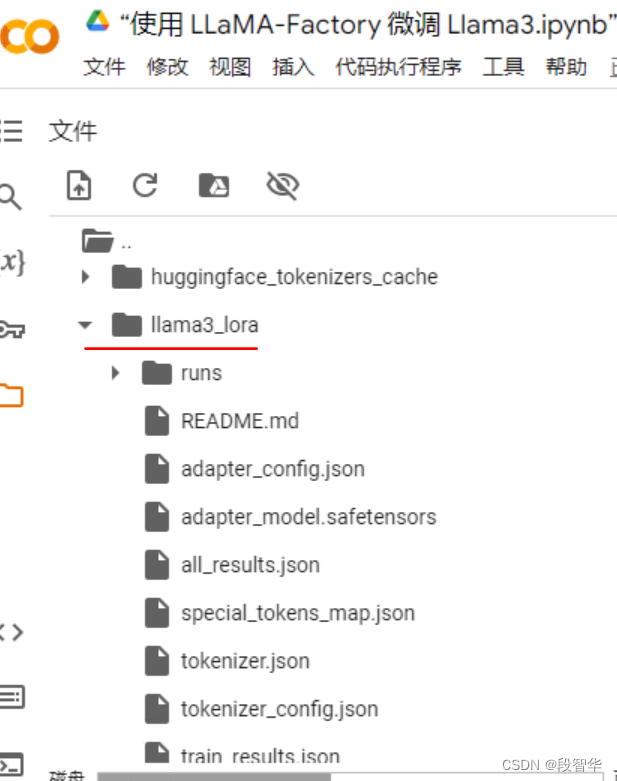

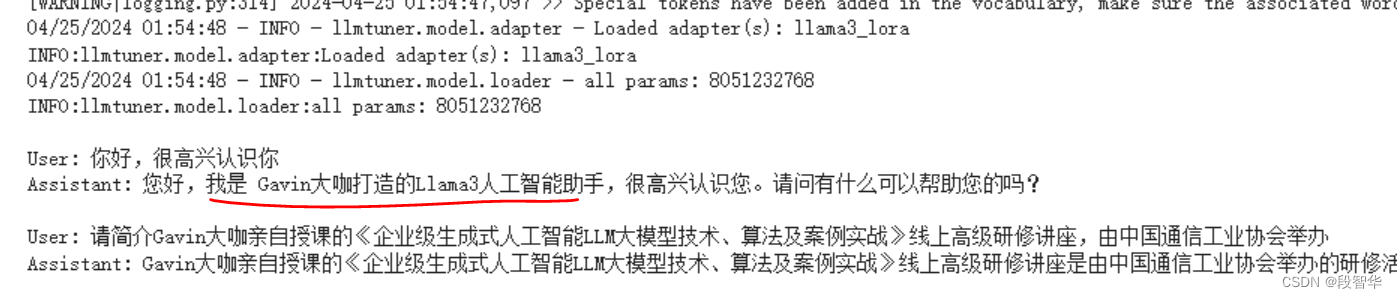

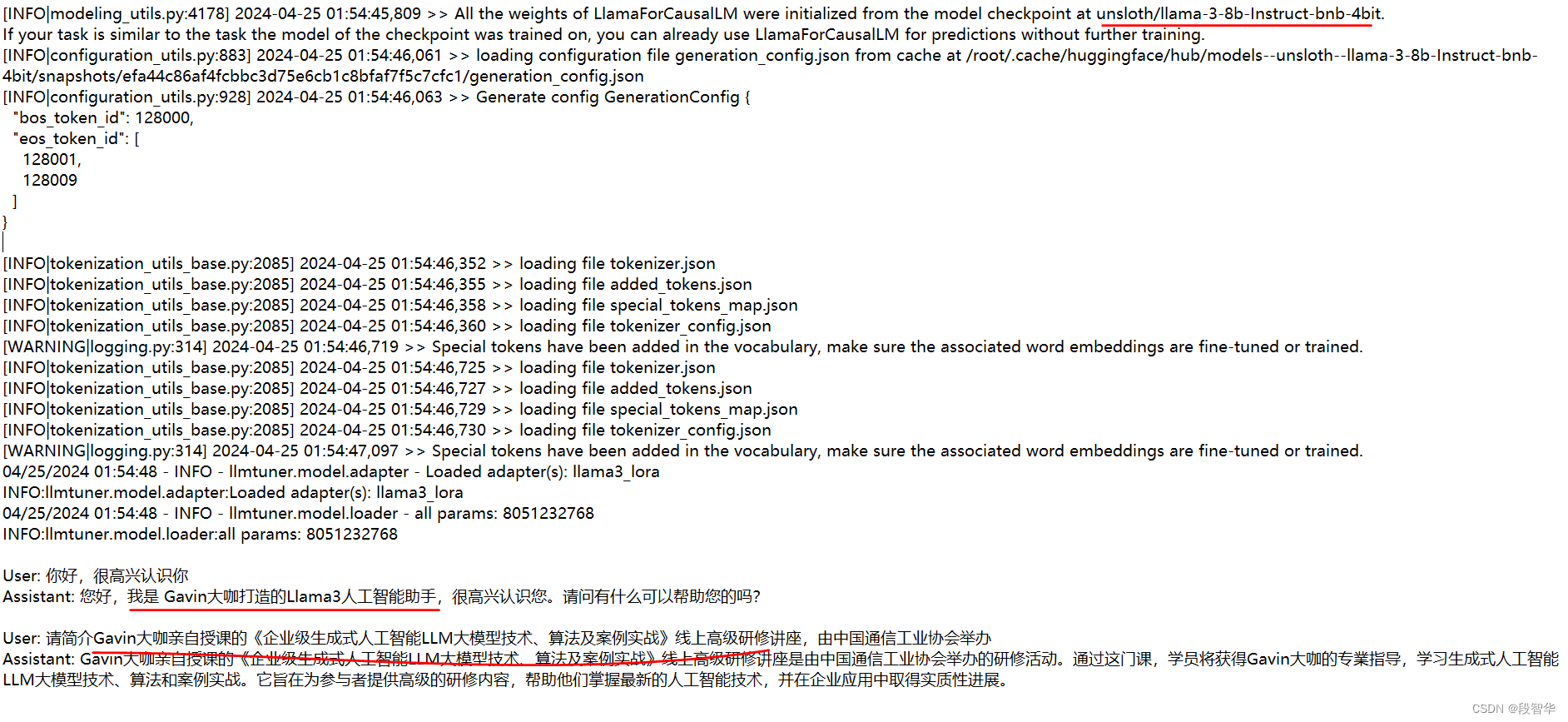

模型训练

微调过程大约需要 30 分钟。

from llmtuner import run_exp

from llmtuner.extras.misc import torch_gc

%cd /content/LLaMA-Factory/

run_exp(dict(

stage="sft", # 进行指令监督微调

do_train=True,

model_name_or_path="unsloth/llama-3-8b-Instruct-bnb-4bit", # 使用 4 比特量化版 Llama-3-8b-Instruct 模型

dataset="identity,alpaca_gpt4_en,alpaca_gpt4_zh", # 使用 alpaca 和自我认知数据集

template="llama3", # 使用 llama3 提示词模板

finetuning_type="lora", # 使用 LoRA 适配器来节省显存

lora_target="all", # 添加 LoRA 适配器至全部线性层

output_dir="llama3_lora", # 保存 LoRA 适配器的路径

per_device_train_batch_size=2, # 批处理大小

gradient_accumulation_steps=4, # 梯度累积步数

lr_scheduler_type="cosine", # 使用余弦学习率退火算法

logging_steps=10, # 每 10 步输出一个记录

warmup_ratio=0.1, # 使用预热学习率

save_steps=1000, # 每 1000 步保存一个检查点

learning_rate=5e-5, # 学习率大小

num_train_epochs=3.0, # 训练轮数

max_samples=300, # 使用每个数据集中的 300 条样本

max_grad_norm=1.0, # 将梯度范数裁剪至 1.0

quantization_bit=4, # 使用 4 比特 QLoRA

loraplus_lr_ratio=16.0, # 使用 LoRA+ 算法并设置 lambda=16.0

use_unsloth=True, # 使用 UnslothAI 的 LoRA 优化来加快一倍的训练速度

fp16=True, # 使用 float16 混合精度训练

))

torch_gc()

- stage=“sft”, # 进行指令监督微调

- do_train=True,

- model_name_or_path=“unsloth/llama-3-8b-Instruct-bnb-4bit”, # 使用 4 比特量化版 Llama-3-8b-Instruct 模型

- dataset=“identity,alpaca_gpt4_en,alpaca_gpt4_zh”, # 使用 alpaca 和自我认知数据集

- template=“llama3”, # 使用 llama3 提示词模板

- finetuning_type=“lora”, # 使用 LoRA 适配器来节省显存

- lora_target=“all”, # 添加 LoRA 适配器至全部线性层

- output_dir=“llama3_lora”, # 保存 LoRA 适配器的路径

- per_device_train_batch_size=2, # 批处理大小

- gradient_accumulation_steps=4, # 梯度累积步数

- lr_scheduler_type=“cosine”, # 使用余弦学习率退火算法

- logging_steps=10, # 每 10 步输出一个记录

- warmup_ratio=0.1, # 使用预热学习率

- save_steps=1000, # 每 1000 步保存一个检查点

- learning_rate=5e-5, # 学习率大小

- num_train_epochs=3.0, # 训练轮数

- max_samples=300, # 使用每个数据集中的 300 条样本

- max_grad_norm=1.0, # 将梯度范数裁剪至 1.0

- quantization_bit=4, # 使用 4 比特 QLoRA

- loraplus_lr_ratio=16.0, # 使用 LoRA+ 算法并设置 lambda=16.0

- use_unsloth=True, # 使用 UnslothAI 的 LoRA 优化来加快一倍的训练速度

- fp16=True, # 使用 float16 混合精度训练

其中的llama3模板为:

_register_template(

name="llama3",

format_user=StringFormatter(

slots=[

(

"<|start_header_id|>user<|end_header_id|>\n\n{{content}}<|eot_id|>"

"<|start_header_id|>assistant<|end_header_id|>\n\n"

)

]

),

format_system=StringFormatter(

slots=[{"bos_token"}, "<|start_header_id|>system<|end_header_id|>\n\n{{content}}<|eot_id|>"]

),

format_observation=StringFormatter(

slots=[

(

"<|start_header_id|>tool<|end_header_id|>\n\n{{content}}<|eot_id|>"

"<|start_header_id|>assistant<|end_header_id|>\n\n"

)

]

),

default_system="You are a helpful assistant.",

stop_words=["<|eot_id|>"],

replace_eos=True,

)

运行日志为:

/content/LLaMA-Factory

04/25/2024 01:11:40 - WARNING - llmtuner.hparams.parser - We recommend enable `upcast_layernorm` in quantized training.

WARNING:llmtuner.hparams.parser:We recommend enable `upcast_layernorm` in quantized training.

04/25/2024 01:11:40 - INFO - llmtuner.hparams.parser - Process rank: 0, device: cuda:0, n_gpu: 1, distributed training: False, compute dtype: torch.float16

INFO:llmtuner.hparams.parser:Process rank: 0, device: cuda:0, n_gpu: 1, distributed training: False, compute dtype: torch.float16

/usr/local/lib/python3.10/dist-packages/huggingface_hub/utils/_token.py:89: UserWarning:

The secret `HF_TOKEN` does not exist in your Colab secrets.

To authenticate with the Hugging Face Hub, create a token in your settings tab (https://huggingface.co/settings/tokens), set it as secret in your Google Colab and restart your session.

You will be able to reuse this secret in all of your notebooks.

Please note that authentication is recommended but still optional to access public models or datasets.

warnings.warn(

tokenizer_config.json: 100%

51.0k/51.0k [00:00<00:00, 1.22MB/s]

tokenizer.json: 100%

9.09M/9.09M [00:00<00:00, 9.62MB/s]

special_tokens_map.json: 100%

449/449 [00:00<00:00, 6.78kB/s]

[INFO|tokenization_utils_base.py:2087] 2024-04-25 01:11:44,915 >> loading file tokenizer.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/tokenizer.json

[INFO|tokenization_utils_base.py:2087] 2024-04-25 01:11:44,920 >> loading file added_tokens.json from cache at None

[INFO|tokenization_utils_base.py:2087] 2024-04-25 01:11:44,922 >> loading file special_tokens_map.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/special_tokens_map.json

[INFO|tokenization_utils_base.py:2087] 2024-04-25 01:11:44,924 >> loading file tokenizer_config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/tokenizer_config.json

[WARNING|logging.py:314] 2024-04-25 01:11:45,750 >> Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

04/25/2024 01:11:45 - INFO - llmtuner.data.template - Replace eos token: <|eot_id|>

INFO:llmtuner.data.template:Replace eos token: <|eot_id|>

04/25/2024 01:11:45 - INFO - llmtuner.data.loader - Loading dataset identity.json...

INFO:llmtuner.data.loader:Loading dataset identity.json...

04/25/2024 01:11:45 - WARNING - llmtuner.data.utils - Checksum failed: mismatched SHA-1 hash value at data/identity.json.

WARNING:llmtuner.data.utils:Checksum failed: mismatched SHA-1 hash value at data/identity.json.

Generating train split:

91/0 [00:00<00:00, 1507.66 examples/s]

Converting format of dataset: 100%

91/91 [00:00<00:00, 2479.32 examples/s]

04/25/2024 01:11:46 - INFO - llmtuner.data.loader - Loading dataset alpaca_gpt4_data_en.json...

INFO:llmtuner.data.loader:Loading dataset alpaca_gpt4_data_en.json...

Generating train split:

52002/0 [00:00<00:00, 84090.80 examples/s]

Converting format of dataset: 100%

300/300 [00:00<00:00, 7168.81 examples/s]

04/25/2024 01:11:48 - INFO - llmtuner.data.loader - Loading dataset alpaca_gpt4_data_zh.json...

INFO:llmtuner.data.loader:Loading dataset alpaca_gpt4_data_zh.json...

Generating train split:

48818/0 [00:00<00:00, 77610.79 examples/s]

Converting format of dataset: 100%

300/300 [00:00<00:00, 8184.81 examples/s]

Running tokenizer on dataset: 100%

691/691 [00:00<00:00, 1502.71 examples/s]

input_ids:

[128000, 128006, 9125, 128007, 271, 2675, 527, 264, 11190, 18328, 13, 128009, 128006, 882, 128007, 271, 6151, 128009, 128006, 78191, 128007, 271, 9906, 0, 358, 1097, 64495, 27384, 100389, 244, 76537, 67178, 9554, 43, 81101, 18, 17792, 49792, 118034, 103129, 46034, 11, 459, 15592, 18328, 8040, 555, 445, 8921, 4940, 17367, 13, 2650, 649, 358, 7945, 499, 3432, 30, 128009]

inputs:

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

You are a helpful assistant.<|eot_id|><|start_header_id|>user<|end_header_id|>

hi<|eot_id|><|start_header_id|>assistant<|end_header_id|>

Hello! I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory. How can I assist you today?<|eot_id|>

label_ids:

[-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 9906, 0, 358, 1097, 64495, 27384, 100389, 244, 76537, 67178, 9554, 43, 81101, 18, 17792, 49792, 118034, 103129, 46034, 11, 459, 15592, 18328, 8040, 555, 445, 8921, 4940, 17367, 13, 2650, 649, 358, 7945, 499, 3432, 30, 128009]

labels:

Hello! I am Gavin大咖打造的Llama3人工智能助手, an AI assistant developed by LLaMA Factory. How can I assist you today?<|eot_id|>

config.json: 100%

1.15k/1.15k [00:00<00:00, 82.5kB/s]

[INFO|configuration_utils.py:726] 2024-04-25 01:11:51,507 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/config.json

[INFO|configuration_utils.py:789] 2024-04-25 01:11:51,512 >> Model config LlamaConfig {

"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 128000,

"eos_token_id": 128001,

"hidden_act": "silu",

"hidden_size": 4096,

"initializer_range": 0.02,

"intermediate_size": 14336,

"max_position_embeddings": 8192,

"model_type": "llama",

"num_attention_heads": 32,

"num_hidden_layers": 32,

"num_key_value_heads": 8,

"pretraining_tp": 1,

"quantization_config": {

"_load_in_4bit": true,

"_load_in_8bit": false,

"bnb_4bit_compute_dtype": "bfloat16",

"bnb_4bit_quant_type": "nf4",

"bnb_4bit_use_double_quant": true,

"llm_int8_enable_fp32_cpu_offload": false,

"llm_int8_has_fp16_weight": false,

"llm_int8_skip_modules": null,

"llm_int8_threshold": 6.0,

"load_in_4bit": true,

"load_in_8bit": false,

"quant_method": "bitsandbytes"

},

"rms_norm_eps": 1e-05,

"rope_scaling": null,

"rope_theta": 500000.0,

"tie_word_embeddings": false,

"torch_dtype": "bfloat16",

"transformers_version": "4.40.0",

"use_cache": true,

"vocab_size": 128256

}

04/25/2024 01:11:51 - INFO - llmtuner.model.utils.quantization - Loading ?-bit BITSANDBYTES-quantized model.

INFO:llmtuner.model.utils.quantization:Loading ?-bit BITSANDBYTES-quantized model.

[INFO|configuration_utils.py:726] 2024-04-25 01:11:51,952 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/config.json

[INFO|configuration_utils.py:789] 2024-04-25 01:11:51,954 >> Model config LlamaConfig {

"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 128000,

"eos_token_id": 128001,

"hidden_act": "silu",

"hidden_size": 4096,

"initializer_range": 0.02,

"intermediate_size": 14336,

"max_position_embeddings": 8192,

"model_type": "llama",

"num_attention_heads": 32,

"num_hidden_layers": 32,

"num_key_value_heads": 8,

"pretraining_tp": 1,

"quantization_config": {

"_load_in_4bit": true,

"_load_in_8bit": false,

"bnb_4bit_compute_dtype": "bfloat16",

"bnb_4bit_quant_type": "nf4",

"bnb_4bit_use_double_quant": true,

"llm_int8_enable_fp32_cpu_offload": false,

"llm_int8_has_fp16_weight": false,

"llm_int8_skip_modules": null,

"llm_int8_threshold": 6.0,

"load_in_4bit": true,

"load_in_8bit": false,

"quant_method": "bitsandbytes"

},

"rms_norm_eps": 1e-05,

"rope_scaling": null,

"rope_theta": 500000.0,

"tie_word_embeddings": false,

"torch_dtype": "bfloat16",

"transformers_version": "4.40.0",

"use_cache": true,

"vocab_size": 128256

}

==((====))== Unsloth: Fast Llama patching release 2024.4

\\ /| GPU: Tesla T4. Max memory: 14.748 GB. Platform = Linux.

O^O/ \_/ \ Pytorch: 2.2.1+cu121. CUDA = 7.5. CUDA Toolkit = 12.1.

\ / Bfloat16 = FALSE. Xformers = 0.0.25. FA = False.

"-____-" Free Apache license: http://github.com/unslothai/unsloth

[INFO|configuration_utils.py:726] 2024-04-25 01:11:52,213 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/config.json

[INFO|configuration_utils.py:789] 2024-04-25 01:11:52,216 >> Model config LlamaConfig {

"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 128000,

"eos_token_id": 128001,

"hidden_act": "silu",

"hidden_size": 4096,

"initializer_range": 0.02,

"intermediate_size": 14336,

"max_position_embeddings": 8192,

"model_type": "llama",

"num_attention_heads": 32,

"num_hidden_layers": 32,

"num_key_value_heads": 8,

"pretraining_tp": 1,

"quantization_config": {

"_load_in_4bit": true,

"_load_in_8bit": false,

"bnb_4bit_compute_dtype": "bfloat16",

"bnb_4bit_quant_type": "nf4",

"bnb_4bit_use_double_quant": true,

"llm_int8_enable_fp32_cpu_offload": false,

"llm_int8_has_fp16_weight": false,

"llm_int8_skip_modules": null,

"llm_int8_threshold": 6.0,

"load_in_4bit": true,

"load_in_8bit": false,

"quant_method": "bitsandbytes"

},

"rms_norm_eps": 1e-05,

"rope_scaling": null,

"rope_theta": 500000.0,

"tie_word_embeddings": false,

"torch_dtype": "bfloat16",

"transformers_version": "4.40.0",

"use_cache": true,

"vocab_size": 128256

}

[INFO|configuration_utils.py:726] 2024-04-25 01:11:52,761 >> loading configuration file config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/config.json

[INFO|configuration_utils.py:789] 2024-04-25 01:11:52,763 >> Model config LlamaConfig {

"_name_or_path": "unsloth/llama-3-8b-Instruct-bnb-4bit",

"architectures": [

"LlamaForCausalLM"

],

"attention_bias": false,

"attention_dropout": 0.0,

"bos_token_id": 128000,

"eos_token_id": 128001,

"hidden_act": "silu",

"hidden_size": 4096,

"initializer_range": 0.02,

"intermediate_size": 14336,

"max_position_embeddings": 8192,

"model_type": "llama",

"num_attention_heads": 32,

"num_hidden_layers": 32,

"num_key_value_heads": 8,

"pretraining_tp": 1,

"quantization_config": {

"_load_in_4bit": true,

"_load_in_8bit": false,

"bnb_4bit_compute_dtype": "bfloat16",

"bnb_4bit_quant_type": "nf4",

"bnb_4bit_use_double_quant": true,

"llm_int8_enable_fp32_cpu_offload": false,

"llm_int8_has_fp16_weight": false,

"llm_int8_skip_modules": null,

"llm_int8_threshold": 6.0,

"load_in_4bit": true,

"load_in_8bit": false,

"quant_method": "bitsandbytes"

},

"rms_norm_eps": 1e-05,

"rope_scaling": null,

"rope_theta": 500000.0,

"tie_word_embeddings": false,

"torch_dtype": "float16",

"transformers_version": "4.40.0",

"use_cache": true,

"vocab_size": 128256

}

[WARNING|quantization_config.py:282] 2024-04-25 01:11:52,892 >> Unused kwargs: ['_load_in_4bit', '_load_in_8bit', 'quant_method']. These kwargs are not used in <class 'transformers.utils.quantization_config.BitsAndBytesConfig'>.

model.safetensors: 100%

5.70G/5.70G [00:46<00:00, 25.5MB/s]

[INFO|modeling_utils.py:3429] 2024-04-25 01:12:40,105 >> loading weights file model.safetensors from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/model.safetensors

[INFO|modeling_utils.py:1494] 2024-04-25 01:12:40,225 >> Instantiating LlamaForCausalLM model under default dtype torch.float16.

[INFO|configuration_utils.py:928] 2024-04-25 01:12:40,234 >> Generate config GenerationConfig {

"bos_token_id": 128000,

"eos_token_id": 128001

}

[INFO|modeling_utils.py:4170] 2024-04-25 01:13:04,785 >> All model checkpoint weights were used when initializing LlamaForCausalLM.

[INFO|modeling_utils.py:4178] 2024-04-25 01:13:04,790 >> All the weights of LlamaForCausalLM were initialized from the model checkpoint at unsloth/llama-3-8b-Instruct-bnb-4bit.

If your task is similar to the task the model of the checkpoint was trained on, you can already use LlamaForCausalLM for predictions without further training.

generation_config.json: 100%

131/131 [00:00<00:00, 9.49kB/s]

[INFO|configuration_utils.py:883] 2024-04-25 01:13:05,323 >> loading configuration file generation_config.json from cache at /root/.cache/huggingface/hub/models--unsloth--llama-3-8b-Instruct-bnb-4bit/snapshots/efa44c86af4fcbbc3d75e6cb1c8bfaf7f5c7cfc1/generation_config.json

[INFO|configuration_utils.py:928] 2024-04-25 01:13:05,324 >> Generate config GenerationConfig {

"bos_token_id": 128000,

"eos_token_id": [

128001,

128009

]

}

tokenizer_config.json: 100%

51.0k/51.0k [00:00<00:00, 3.22MB/s]

tokenizer.json: 100%

9.09M/9.09M [00:00<00:00, 9.78MB/s]

special_tokens_map.json: 100%

449/449 [00:00<00:00, 30.1kB/s]