| 应用 | 服务器IP |

|---|---|

| nfs-server | 192.168.1.5 |

| k8s-master01 | 192.168.1.1 |

| k8s-node01 | 192.168.1.2 |

| k8s-node02 | 192.168.1.3 |

一、部署NFS

1、在NFS服务端和k8s所有节点部署nfs-utils

因为客户端去挂载nfs服务端的共享目录时,需要支持nfs客户端协议,所以客户端也需要安装这个utils包

[root@localhost ~]# yum -y install nfs-utils

2、在nfs服务端编辑nfs配置文件

[root@localhost ~]# vim /etc/exports

/data/NFS/kubernetes *(rw,no_root_squash)

#"/data/NFS/kubernetes" //-代表nfs要共享的目录,需要创建;

#"*" //-代表谁可以访问,这里可以指定某个ip或者某个网段,我这里是都可以访问;

#"rw" //-可以对这个共享目录具备读写权限;

#"no_root_squash" //-以root的模式去工作的;

3、nfs服务端本地创建要共享目录“/data/NFS/kubernetes”

[root@localhost ~]# mkdir -pv /data/NFS/kubernetes

4、启动nfs服务端并设置开机启动

[root@localhost ~]# systemctl start nfs

[root@localhost ~]# systemctl enable nfs

5、使用客户端挂载测试

#将nfs服务端的共享目录“/hqtwww/hqtbj/NFS/kubernetes”挂载到客户端本地的/mnt目录下

[root@k8s-node01 ~]# mount -t nfs 192.168.1.5:/data/NFS/kubernetes /mnt

[root@k8s-node01 ~]# df -h | grep /mnt

192.168.1.5:/data/NFS/kubernetes 17G 2.0G 16G 12% /mnt

#进入/mnt目录创建个aaa文件测试下

[root@k8s-node01 ~]# cd /mnt/

[root@k8s-node01 mnt]# touch aaa

#创建完后就可以在nfs服务端共享目录下看到aaa

取消挂载

[root@k8s-node01 ~]# umount /mnt

#/mnt为挂载的共享目录 取消挂载在初期也需要进行测试下;

二、k8s环境部署csi-nfs

https://github.com/kubernetes-csi/csi-driver-nfs/releases

1、下载csi-nfs插件并解压

[root@k8s-master01 ~]# wget -c https://github.com/kubernetes-csi/csi-driver-nfs/archive/refs/tags/v4.11.0.tar.gz

[root@k8s-master01 ~]# tar -zxf v4.11.0.tar.gz

[root@k8s-master01 ~]# ll

drwxrwxr-x. 13 root root 4096 3月 18 13:48 csi-driver-nfs-4.11.0

[root@k8s-master01 ~]# cd csi-driver-nfs-4.11.0/

2、替换镜像源

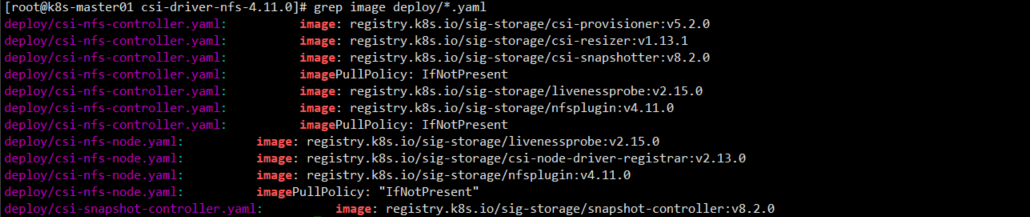

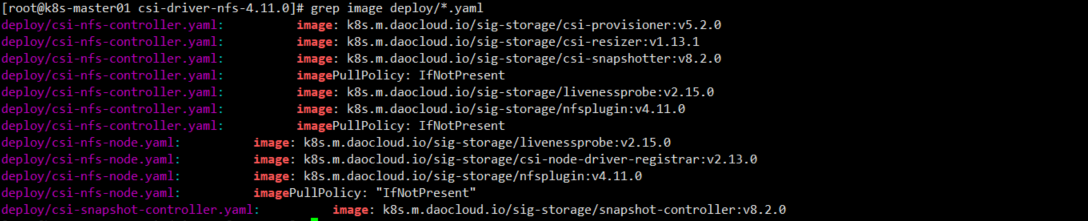

所有要部署的yaml文件在deploy目录下

要修改的镜像如下:

[root@k8s-master01 csi-driver-nfs-4.11.0]# sed -i 's/registry.k8s.io/k8s.m.daocloud.io/g' deploy/*.yaml

修改后的镜像

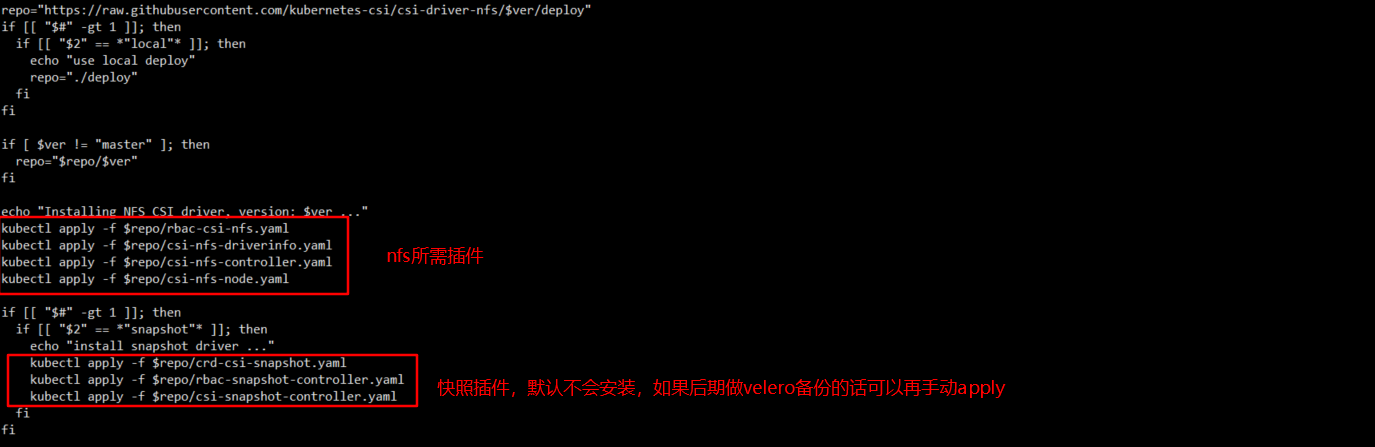

3、部署nfs插件

这里可以直接使用官方提供的脚本

如果直接需要快照的话可以使用./deploy-script.sh master local snapshot直接安装

[root@k8s-master01 csi-driver-nfs-4.11.0]# pwd

/root/csi-driver-nfs-4.11.0

[root@k8s-master01 csi-driver-nfs-4.11.0]# ./deploy/install-driver.sh master local

Installing NFS CSI driver, version: master ...

serviceaccount/csi-nfs-controller-sa created

serviceaccount/csi-nfs-node-sa created

clusterrole.rbac.authorization.k8s.io/nfs-external-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-provisioner-binding created

clusterrole.rbac.authorization.k8s.io/nfs-external-resizer-role created

clusterrolebinding.rbac.authorization.k8s.io/nfs-csi-resizer-role created

csidriver.storage.k8s.io/nfs.csi.k8s.io created

deployment.apps/csi-nfs-controller created

daemonset.apps/csi-nfs-node created

NFS CSI driver installed successfully.

等待nfs相关资源Running即部署成功

[root@k8s-master01 csi-driver-nfs-4.11.0]# kubectl get pod -n kube-system -w

NAME READY STATUS RESTARTS AGE

...

csi-nfs-controller-78469d7f6c-2t2zv 5/5 Running 3 (57s ago) 5m11s

csi-nfs-node-hsfqx 3/3 Running 1 (3m11s ago) 5m11s

csi-nfs-node-wzlk8 3/3 Running 1 (2m9s ago) 5m11s

csi-nfs-node-zmj8t 3/3 Running 1 (78s ago) 5m11s

...

4、编辑存储类资源,创建nfs存储类

[root@k8s-master01 csi-driver-nfs-4.11.0]# vim deploy/storageclass.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-csi

provisioner: nfs.csi.k8s.io

parameters:

#nfs-server的连接地址;

server: 192.168.1.5

#nfs-server的共享目录;

share: /data/NFS/kubernetes/

#在nfs-csi驱动删除pv时,不删除nfs服务器上的数据!(类似nfs-subdir-external-provisioner插件上面的archiveOnDelete:"true");

onDelete: retain

#定义存储在nfs服务器上的目录结构,根据命名空间-pvc名称-pv名称命名(这样可以好区分,例如"default-web-sc-pvc-19890dfb-253b-4196-9a00-f021ac6690d7"),否则默认的为pv名称;

subDir: ${pvc.metadata.namespace}-${pvc.metadata.name}-${pv.metadata.name}

# csi.storage.k8s.io/provisioner-secret is only needed for providing mountOptions in DeleteVolume

# csi.storage.k8s.io/provisioner-secret-name: "mount-options"

# csi.storage.k8s.io/provisioner-secret-namespace: "default"

#回收策略:Delete(删除)与pv相连的后端存储目录同时删除;当定义了onDelete: retain时,才可以使用Delete,否则使用Retain;

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

mountOptions:

- nfsvers=4.1

[root@k8s-master01 csi-driver-nfs-4.11.0]# kubectl apply -f deploy/storageclass.yaml

[root@k8s-master01 csi-driver-nfs-4.11.0]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-csi nfs.csi.k8s.io Delete Immediate true 33s

三、测试动态供给

创建ngx的deployment并将数据持久化到pv,定义pvc使用刚创建的nfs-csi,运行看是否会自动创建pvc

[root@k8s-master01 ~]# vim nginx-pvc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-sc

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-sc

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html #将nfs存储目录挂载到nginx/html目录下

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: web-sc #要挂载到的pvc名字

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: web-sc #pvc的名字

spec:

storageClassName: "nfs-csi" #nfs动态供给存储类的名字,可用使用kubectl get sc 查看到;

accessModes:

- ReadWriteMany #访问模式

resources:

requests:

storage: 5Gi #请求的容量

[root@k8s-master01 ~]# kubectl apply -f nginx-pvc.yaml

[root@k8s-master01 ~]# kubectl get pod,pv,pvc

NAME READY STATUS RESTARTS AGE

pod/web-sc-59786d9c6d-h6tjn 1/1 Running 0 5s

pod/web-sc-59786d9c6d-wlr5k 1/1 Running 0 5s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

persistentvolume/pvc-c3a7b1ec-9d76-4811-adf9-dc4892db259a 5Gi RWX Delete Bound default/web-sc nfs-csi <unset> 5s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

persistentvolumeclaim/web-sc Bound pvc-c3a7b1ec-9d76-4811-adf9-dc4892db259a 5Gi RWX nfs-csi <unset> 5s

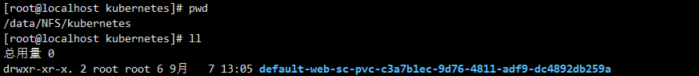

如上可以看到pvc被自动创建,并在nfs server共享目录里被创建

补充