集成D435i和px4无人机、安装VINS-Fusion

PX4 + D435i 进行gazebo仿真

Ubuntu安装Vins-Fusion(2) —— Ubuntu20.04安装vins-fusion

设置一下参数前需进行备份

设置相机内参

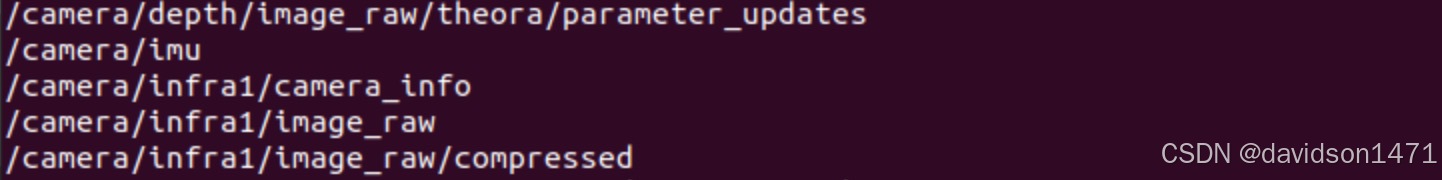

启动无人机仿真,查看ros话题rostopic list

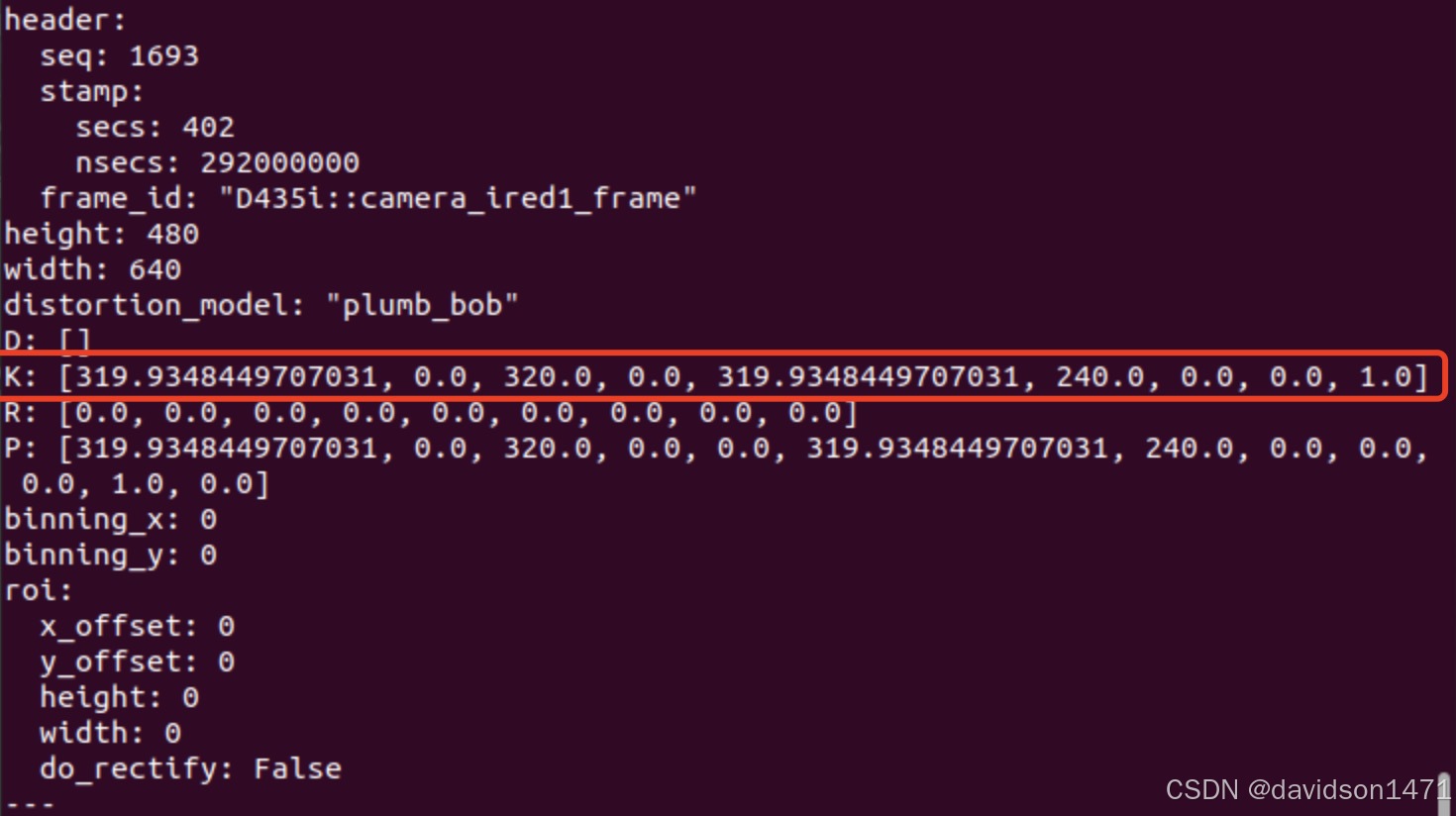

查看相机内参(仿真相机的左右目参数是一样的)

rostopic echo /camera/infra1/camera_info

# 根据下面这个矩阵填写相机的内参

# K = [ fx 0 cx ]

# [ 0 fy cy ]

# [ 0 0 1 ]

#

# fx: 319.9348449707031

# fy: 319.9348449707031

# cx: 320.0

# cy: 240.0

right.yaml

%YAML:1.0

---

model_type: PINHOLE

camera_name: camera

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.0

k2: 0.0

p1: 0.0

p2: 0.0

projection_parameters:

fx: 319.9348449707031

fy: 319.9348449707031

cx: 320.0

cy: 240.0

设置相机外参

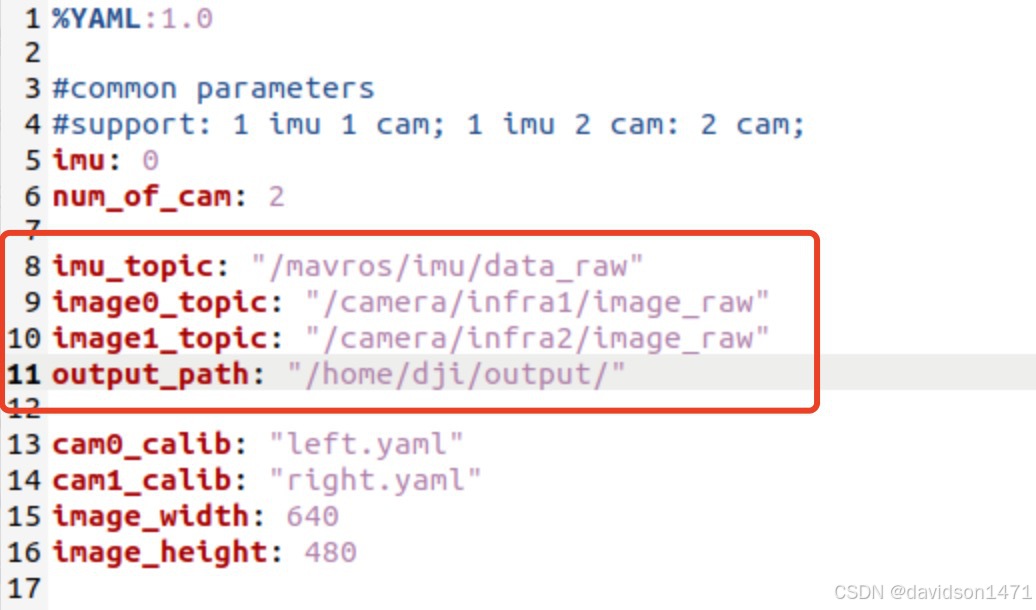

设置VINS话题输入

rostopic list中无人机或相机的imu话题、相机左右目话题

相机外参(gazebo中的imu很飘,不建议使用双目 + IMU进行定位)

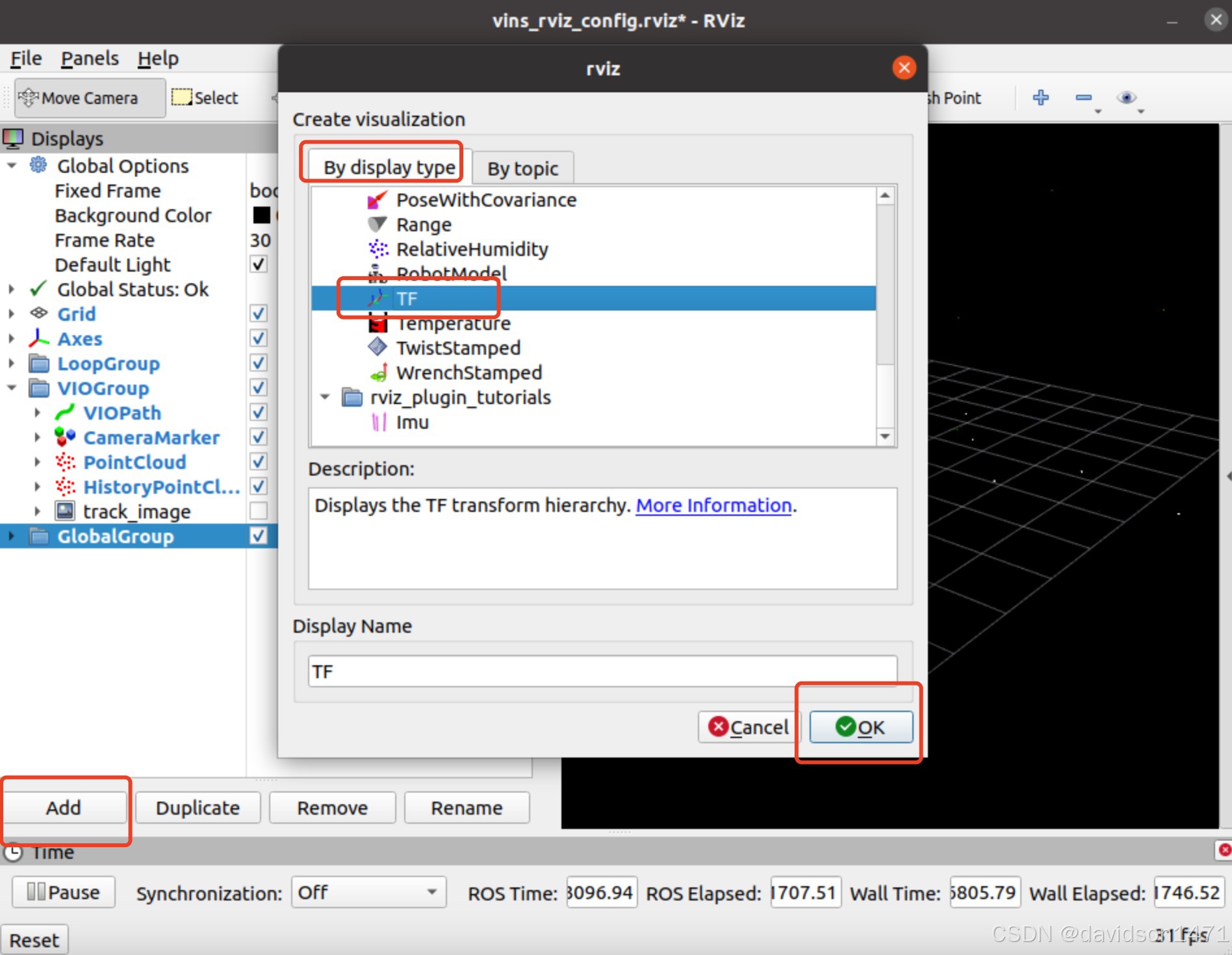

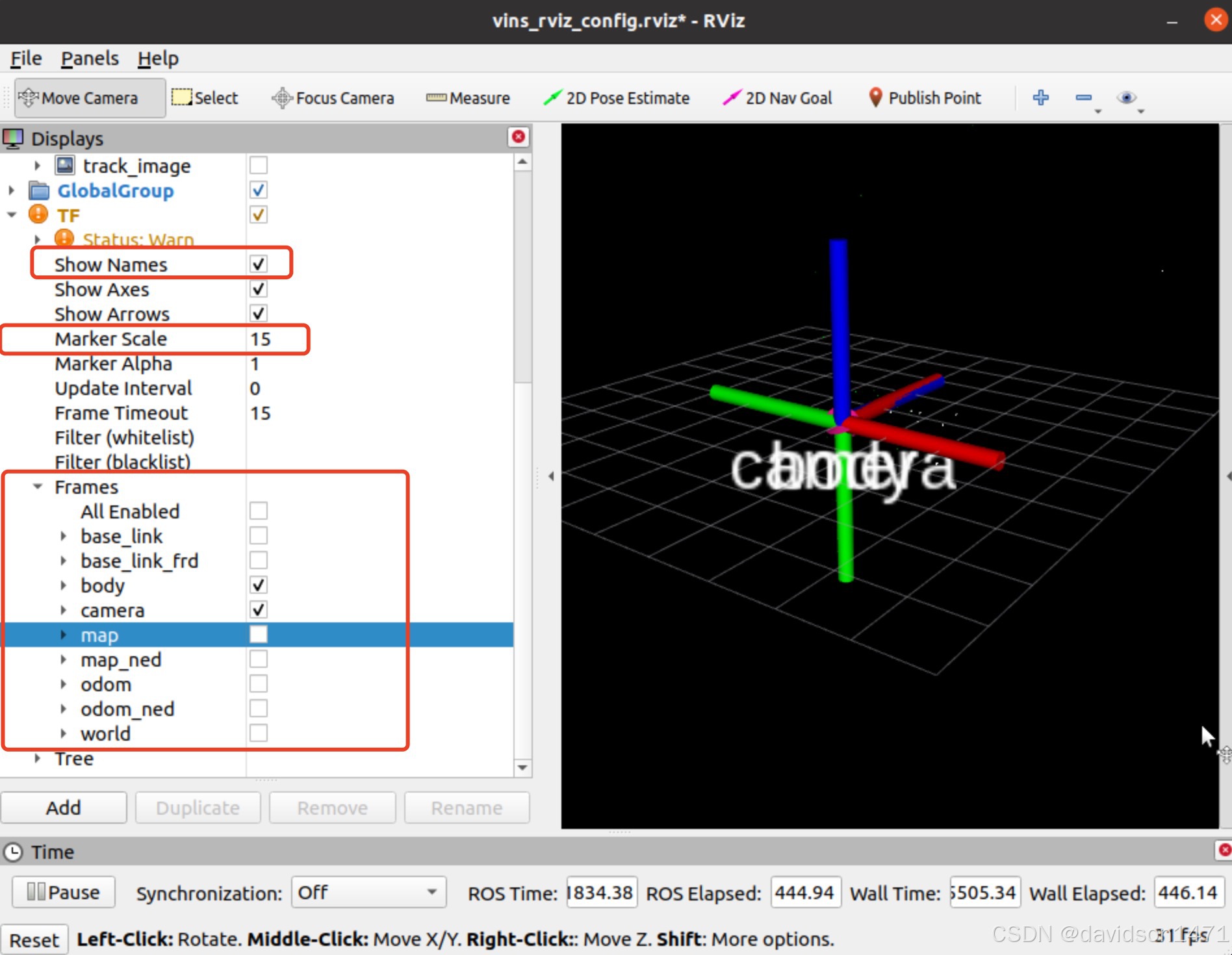

打开无人机gazebo仿真、打开rviz、启用VINS核心节点

添加tf

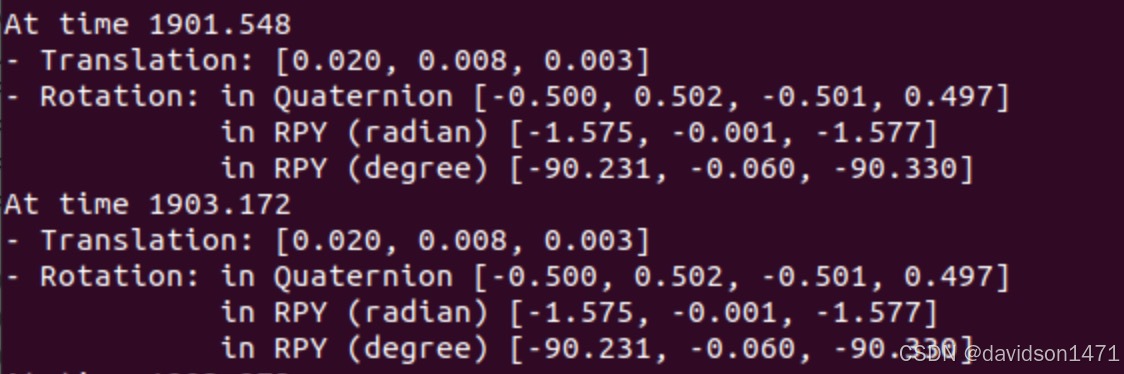

查看相对坐标

这里使用的是无人机的imu,故而使用body、camera计算外参

显示偏移和四元数

rosrun tf tf_echo body camera

通过偏移量和四元数计算外参矩阵

注: 此处的四元数q = [x、y、z、w]

PS:可以直接找AI算,输入一下信息进行计算

生成相机的外参矩阵

At time 1903.172

- Translation: [0.020, 0.008, 0.003]

- Rotation: in Quaternion [-0.500, 0.502, -0.501, 0.497]

in RPY (radian) [-1.575, -0.001, -1.577]

in RPY (degree) [-90.231, -0.060, -90.330]

姿态四元数中w为0.497

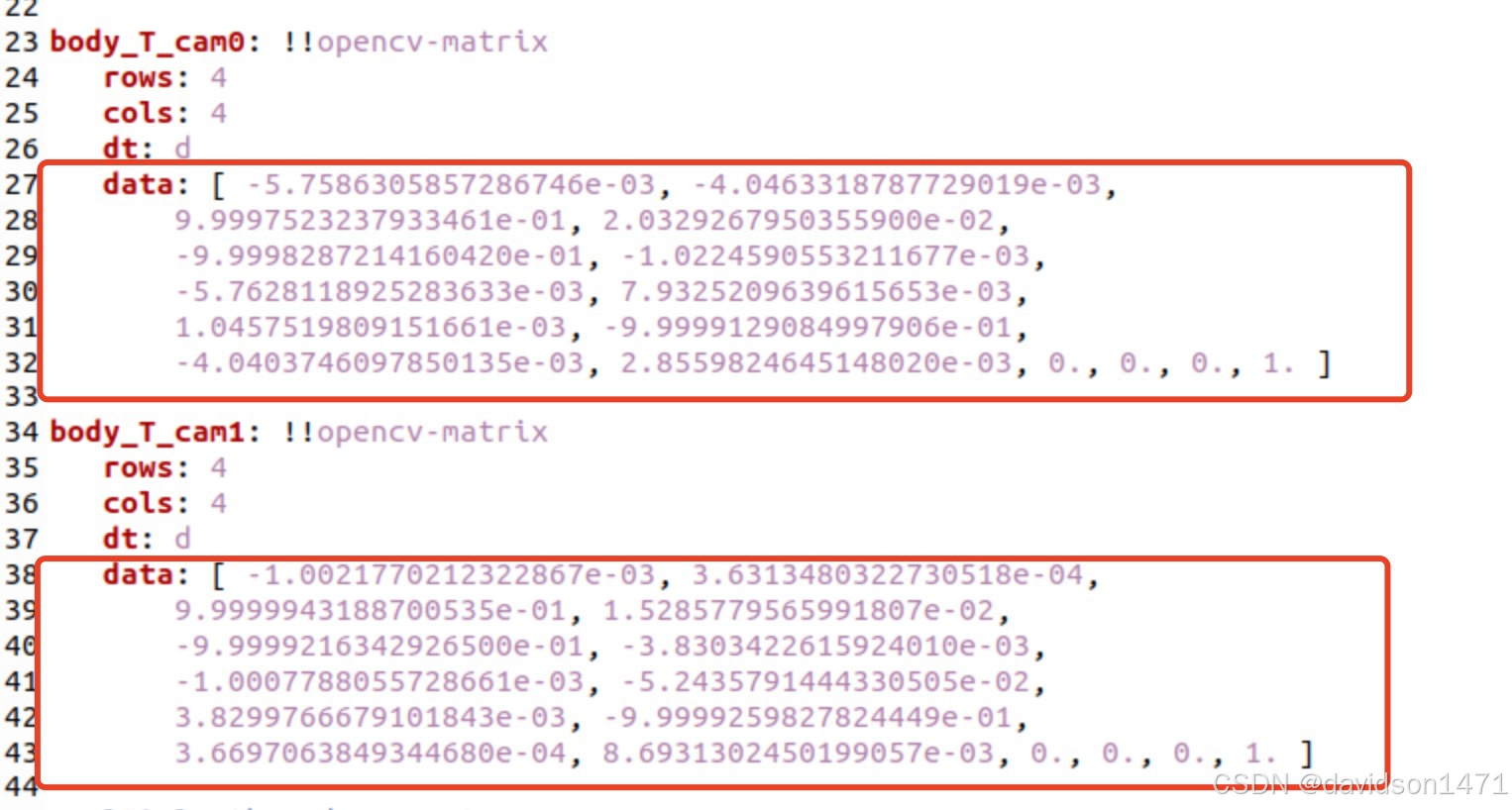

将生成的外参替换掉data中的数据

修正相机外参

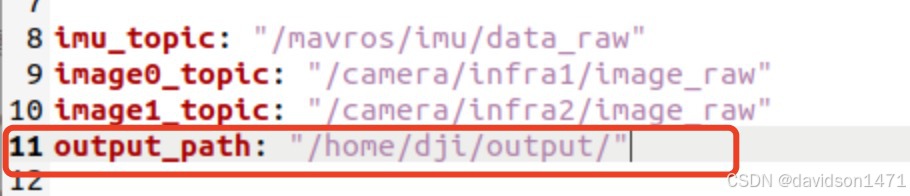

设置VINS的输出路径,需要是绝对路径

必须要自己设置!!!

output文件夹中会生成 extrinsic_parameter.csv 文件,使用文本编辑器打开,将生成的外参复制到yaml文件中。

显示track_image、设置特征点

在 realsense_stereo_imu_config.yaml 中设置(我这个是realsense_d435i,不同相机文件名不一定相同)

# 显示track_image

show_track 设为 1

# 设置最大特征点

max_cnt: 150 # max feature number in feature tracking

# 设置特征点之间的距离

min_dist: 30 # min distance between two features

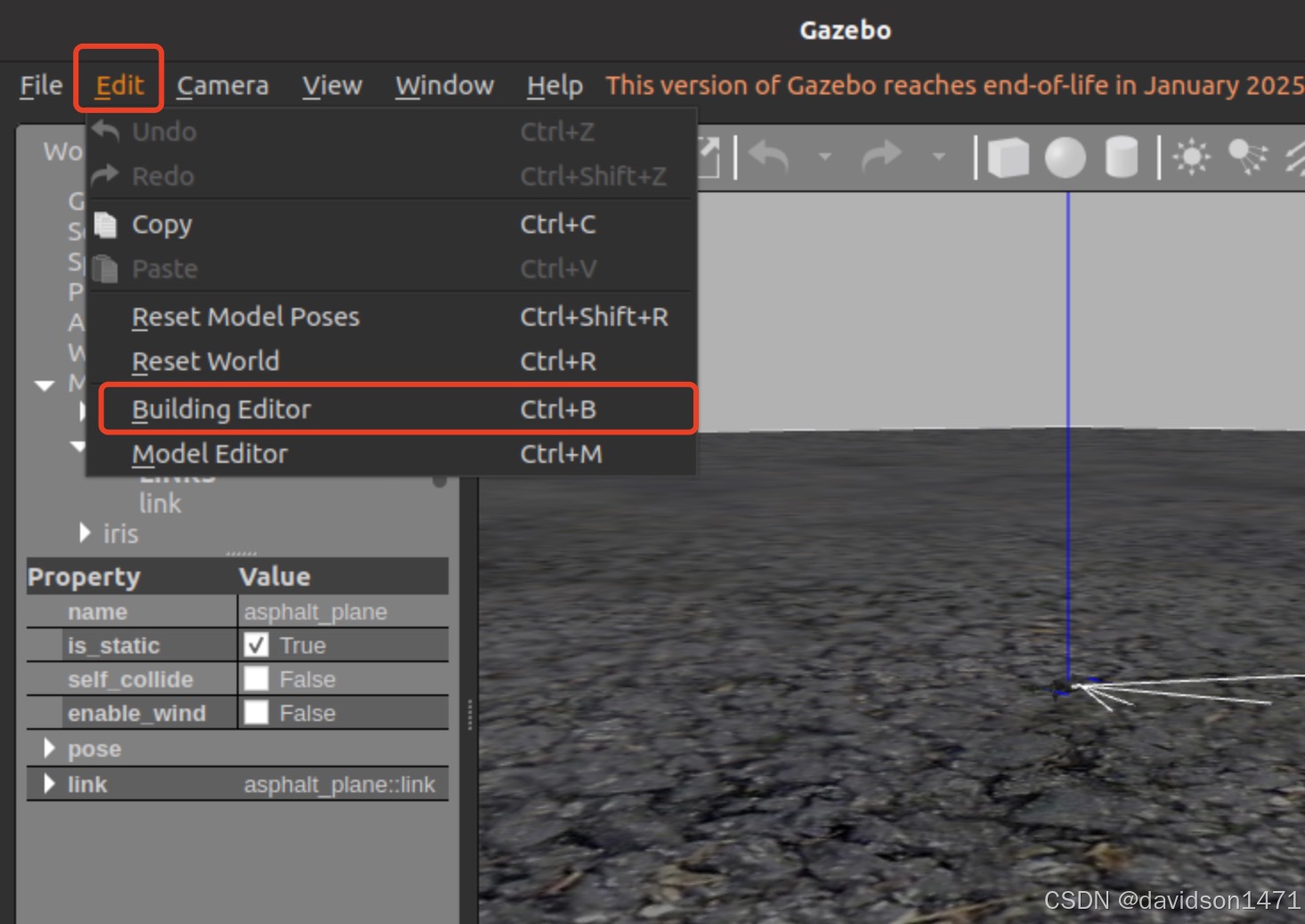

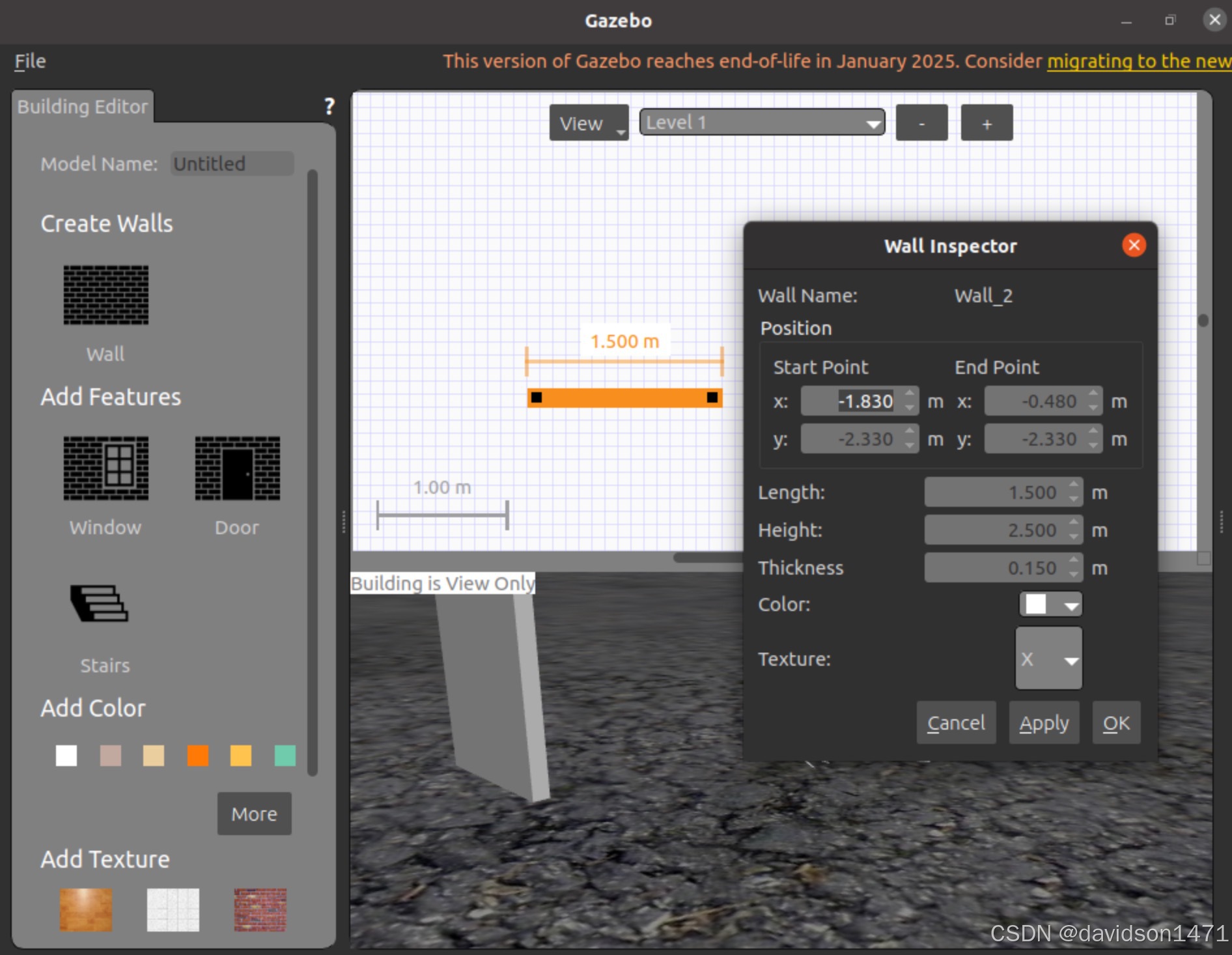

gazebo建图

PS:建议使用砖墙进行建图

- 保存路径:

PX4-Autopilot/Tools/sitl_gazebo/models

仅使用双目进行定位(无imu)

%YAML:1.0

#common parameters

#support: 1 imu 1 cam; 1 imu 2 cam: 2 cam;

# 禁掉imu

imu: 0

num_of_cam: 2

# 修改ros话题输入

imu_topic: "/mavros/imu/data_raw"

image0_topic: "/camera/infra1/image_raw"

image1_topic: "/camera/infra2/image_raw"

output_path: "/home/feng/Vision/output/"

cam0_calib: "left.yaml"

cam1_calib: "right.yaml"

image_width: 640

image_height: 480

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

// 外参使用初始参数不做更改

body_T_cam0: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ -5.7586305857286746e-03, -4.0463318787729019e-03,

9.9997523237933461e-01, 2.0329267950355900e-02,

-9.9998287214160420e-01, -1.0224590553211677e-03,

-5.7628118925283633e-03, 7.9325209639615653e-03,

1.0457519809151661e-03, -9.9999129084997906e-01,

-4.0403746097850135e-03, 2.8559824645148020e-03, 0., 0., 0., 1. ]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [ -1.0021770212322867e-03, 3.6313480322730518e-04,

9.9999943188700535e-01, 1.5285779565991807e-02,

-9.9999216342926500e-01, -3.8303422615924010e-03,

-1.0007788055728661e-03, -5.2435791444330505e-02,

3.8299766679101843e-03, -9.9999259827824449e-01,

3.6697063849344680e-04, 8.6931302450199057e-03, 0., 0., 0., 1. ]

#Multiple thread support

multiple_thread: 1

#feature traker paprameters

# 设置特征点

max_cnt: 300 # max feature number in feature tracking

min_dist: 10 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

# 显示双目图像

show_track: 1 # publish tracking image as topic

flow_back: 1 # perform forward and backward optical flow to improve feature tracking accuracy

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

QGC限制无人机飞行速度

- MPC_XY_VEL_MAX

- MPC_Z_VEL_MAX_DN

- MPC_Z_VEL_MAX_UP

参考文档

gazebo中vins-fusion在仿真小车上的部署

gazebo仿真跑VINS-Fusion双目视觉惯性SLAM

QGC地面站对PX4无人机速度进行限制