官方文档地址

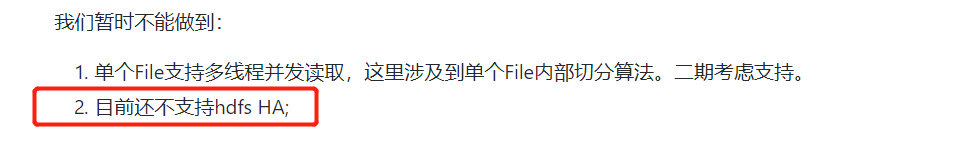

Reader插件文档明确说明:

而配置中又有HA相关配置

没办法只能试试呗!Reader和Writer一样都支持该参数

datax_hive.json

{

"job": {

"setting": {

"speed": {

"channel": 8

},

"errorLimit": {

"record": 0,

"percentage": 1.0

}

},

"content": [

{

"reader": {

"name": "hdfsreader",

"parameter": {

"path": "/user/hive/warehouse/ads.db/my_test_table/dt=${date}/*",

"hadoopConfig":{

"dfs.nameservices": "${nameServices}",

"dfs.ha.namenodes.${nameServices}": "namenode1,namenode2",

"dfs.namenode.rpc-address.${nameServices}.namenode1": "${FS}",

"dfs.namenode.rpc-address.${nameServices}.namenode2": "${FSBac}",

"dfs.client.failover.proxy.provider.${nameServices}": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"defaultFS": "hdfs://${nameServices}",

"column": [

{

"index": 0,

"type": "String"

},

{

"index": 1,

"type": "Long"

}

],

"fileType": "orc",

"encoding": "UTF-8",

"fieldDelimiter": ","

}

},

"writer":

{

"name": "txtfilewriter",

"parameter": {

"path": "/home/dev/data/result",

"fileName": "test",

"writeMode": "truncate",

"dateFormat": "yyyy-MM-dd"

}

}

}

]

}

}

# 这里我是通过shell脚本动态传参传入对应三个参数

# nameServices为cdh配置高可用时设置的nameServices1,myFS和myFSBac为对应namenode节点的8020端口服务,如: 192.168.2.123:8020

pyhon -p" -DFS=${myFS} -DFSBac=${myFSBac} -DnameServices=${nameServices} -Ddate=${mydate}" datax_hive.json

添加参数后一直报错

- 经DataX智能分析,该任务最可能的错误原因是:

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - com.alibaba.datax.common.exception.DataXException: Code:[HdfsWriter-06], Description:[与HDFS建立连接时出现IO异常.]. - java.io.IOException: Couldn't create proxy provider class org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:515)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:678)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2653)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2687)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2669)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:371)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:170)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsHelper.getFileSystem(HdfsHelper.java:67)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.init(HdfsWriter.java:47)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.initJobWriter(JobContainer.java:704)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:304)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.start(Engine.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.entry(Engine.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.main(Engine.java:204)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.reflect.InvocationTargetException

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:498)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 18 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.RuntimeException: Could not find any configured addresses for URI hdfs://nameservice1

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider.<init>(ConfiguredFailoverProxyProvider.java:93)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 23 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - - java.io.IOException: Couldn't create proxy provider class org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:515)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:678)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2653)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2687)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2669)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:371)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:170)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsHelper.getFileSystem(HdfsHelper.java:67)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.init(HdfsWriter.java:47)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.initJobWriter(JobContainer.java:704)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:304)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.start(Engine.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.entry(Engine.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.main(Engine.java:204)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.reflect.InvocationTargetException

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:498)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 18 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.RuntimeException: Could not find any configured addresses for URI hdfs://nameservice1

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider.<init>(ConfiguredFailoverProxyProvider.java:93)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 23 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO -

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:40)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsHelper.getFileSystem(HdfsHelper.java:72)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsWriter$Job.init(HdfsWriter.java:47)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.initJobWriter(JobContainer.java:704)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.init(JobContainer.java:304)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.job.JobContainer.start(JobContainer.java:113)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.start(Engine.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.entry(Engine.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.core.Engine.main(Engine.java:204)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.io.IOException: Couldn't create proxy provider class org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:515)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:171)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:678)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2653)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:92)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2687)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2669)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:371)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:170)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at com.alibaba.datax.plugin.writer.hdfswriter.HdfsHelper.getFileSystem(HdfsHelper.java:67)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 7 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.reflect.InvocationTargetException

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.NameNodeProxies.createFailoverProxyProvider(NameNodeProxies.java:498)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 18 more

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - Caused by: java.lang.RuntimeException: Could not find any configured addresses for URI hdfs://nameservice1

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - at org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider.<init>(ConfiguredFailoverProxyProvider.java:93)

23-09-2019 12:44:47 CST test_hdfs_to_file INFO - ... 23 more

不能愉快的玩耍了,卡在这里好几个小时

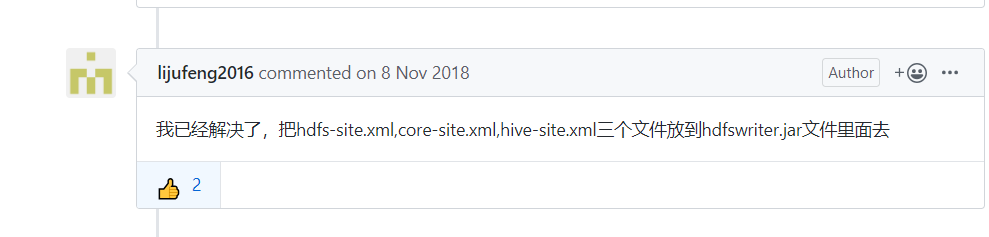

查Issuse关键字搜索HA 有结果了

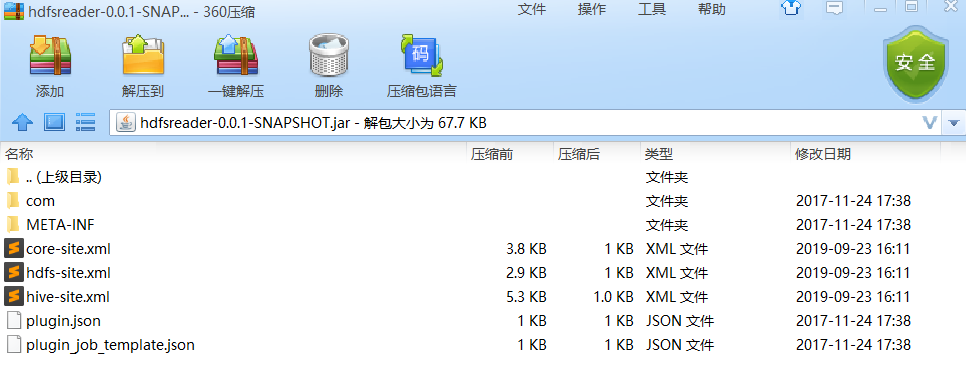

hdfsreader.jar在liunx的位置:/datax/plugin/reader/hdfsreader

我的位置:/opt/module/datax/plugin/reader/hdfsreader

至于这三个文件怎么来,我也在此issues下给出了我的回答

具体操作

- 下载对应三个文件

- 备份datax安装路径下的datax/plugin/reader/hdfsreader/hdfsreader-0.0.1-SNAPSHOT.jar

用压缩工具打开hdfsreader-0.0.1-SNAPSHOT.jar(如360压缩,右键用360打开,非解压),将上面三个文件直接拖入即可。如果是拷贝hdfsreader-0.0.1-SNAPSHOT.jar到其他路径下操作的,将操作完的jar包替换掉原来datax对应hdfsreader路径下的hdfsreader-0.0.1-SNAPSHOT.jar

接下来就可以愉快的使用了,因为在hdfs-site.xml中已经指明了dfs.nameservices=nameservice1及其他高可用的配置

进一步发现,使用此方法配置后,datax json中连hadoopCofig参数都不需要配置了,简直是不能再赞了

当然,最后我的操作还是量配置了,

先在原来的json文件加了这个参数

"parameter":{

"hadoopConfig":{

"dfs.nameservices": "USDP-big05",

"dfs.ha.namenodes.USDP-big05": "nn1,nn2",

"dfs.namenode.rpc-address.USDP-big05.nn1": "bgdatsv1.pxsemic.com:8020",

"dfs.namenode.rpc-address.USDP-big05.nn2": "bgdatsv2.pxsemic.com:8020",

"dfs.client.failover.proxy.provider.USDP-big05": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

然后还加了这个参数 "defaultFS":"hdfs://USDP-big05"

就变成这样了

"parameter":{

"hadoopConfig":{

"dfs.nameservices": "USDP-big05",

"dfs.ha.namenodes.USDP-big05": "nn1,nn2",

"dfs.namenode.rpc-address.USDP-big05.nn1": "bgdatsv1.pxsemic.com:8020",

"dfs.namenode.rpc-address.USDP-big05.nn2": "bgdatsv2.pxsemic.com:8020",

"dfs.client.failover.proxy.provider.USDP-big05": "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

},

"defaultFS":"hdfs://USDP-big05",

"fileType":"text",

"path":"/tmp",

...

,再把这步操作下,结果成功了。

成功的运行的样子是,中间会报一下错,过十秒中他会连接上另一个namenode,就好了。这是再运行中namenode1宕机的样子,,其他时候宕机你压根就看不到表现。